Contributing & Developer Notes

+Pull Requests, Bug Reports, and all Contributions are welcome, encouraged, and appreciated! +Please use the appropriate issue or pull request template when making a contribution to help the maintainers get it merged quickly.

+We make use of the GitHub Discussions page to go over potential features to add. +Please feel free to stop by if you are looking for something to develop or have an idea for a useful feature!

+When submitting a PR, please mark your PR with the “PR Ready for Review” label when you are finished making changes so that the GitHub actions bots can work their magic!

+Developer Install

+To contribute to the astartes source code, start by forking and then cloning the repository (i.e. git clone git@github.com:YourUsername/astartes.git) and then inside the repository run pip install -e .[dev]. This will set you up with all the required dependencies to run astartes and conform to our formatting standards (black and isort), which you can configure to run automatically in VSCode like this.

++Warning +Windows (PowerShell) and MacOS Catalina or newer (zsh) require double quotes around the

+[]characters (i.e.pip install "astartes[dev]")

Version Checking

+astartes uses pyproject.toml to specify all metadata, but the version is also specified in astartes/__init__.py (via __version__) for backwards compatibility with Python 3.7.

+To check which version of astartes you have installed, you can run python -c "import astartes; print(astartes.__version__)" on Python 3.7 or `python -c “from importlib.metadata import version; version(‘astartes’)” on Python 3.8 or newer.

Testing

+All of the tests in astartes are written using the built-in python unittest module (to allow running without pytest) but we highly recommend using pytest.

+To execute the tests from the astartes repository, simply type pytest after running the developer install (or alternately, pytest -v for a more helpful output).

+On GitHub, we use actions to run the tests on every Pull Request and on a nightly basis (look in .github/workflows for more information).

+These tests include unit tests, functional tests, and regression tests.

Adding New Samplers

+Adding a new sampler should extend the abstract_sampler.py abstract base class.

+Each subclass should override the _sample method with its own algorithm for data partitioning and optionally the _before_sample method to perform any data validation.

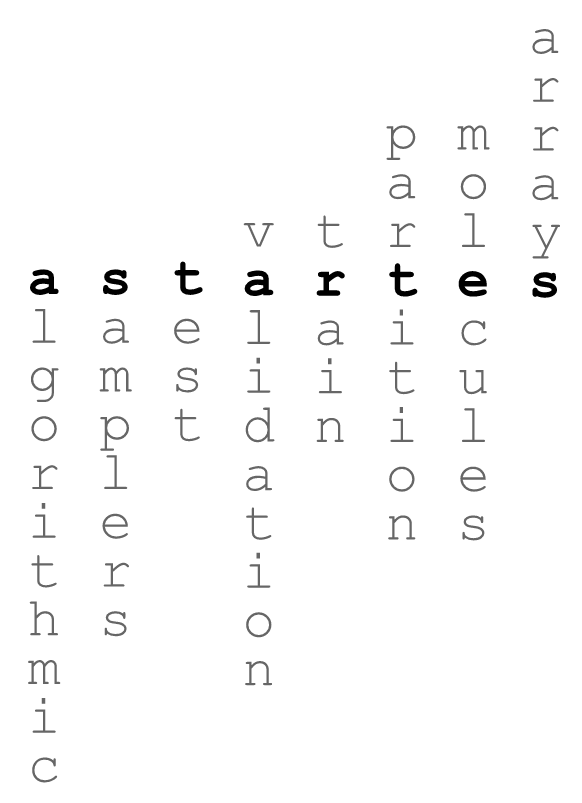

All samplers in astartes are classified as one of two types: extrapolative or interpolative.

+Extrapolative samplers work by clustering data into groups (which are then partitioned into train/validation/test to enforce extrapolation) whereas interpolative samplers provide an exact order in which samples should be moved into the training set.

When actually implemented, this means that extrapolative samplers should set the self._samples_clusters attribute and interpolative samplers should set the self._samples_idxs attribute.

New samplers can be as simple as a passthrough to another train_test_split, or it can be an original implementation that results in X and y being split into two lists. Take a look at astartes/samplers/interpolation/random_split.py for a basic example!

After the sampler has been implemented, add it to __init__.py in in astartes/samplers and it will automatically be unit tested. Additional unit tests to verify that hyperparameters can be properly passed, etc. are also recommended.

For historical reasons, and as a guide for any developers who would like add new samplers, below is a running list of samplers which have been considered for addition to asartes but ultimately not added for various reasons.

Not Implemented Sampling Algorithms

+Sampler Name |

+Reasoning |

+Relevant Link(s) |

+

|---|---|---|

D-Optimal |

+Requires a-priori knowledge of the test and train size which does not fit in the |

+The Wikipedia article for optimal design does a good job explaining why this is difficult, and points at some potential alternatives. |

+

Duplex |

+Requires knowing test and train size before execution, and can only partition data into two sets which would make it incompatible with |

+This implementation in R includes helpful references and a reference implementation. |

+

Adding New Featurization Schemes

+All of the sampling methods implemented in astartes accept arbitrary arrays of numbers and return the sampled groups (with the exception of Scaffold.py). If you have an existing featurization scheme (i.e. take an arbitrary input and turn it into an array of numbers), we would be thrilled to include it in astartes.

Adding a new interface should take on this format:

+from astartes import train_test_split

+

+def train_test_split_INTERFACE(

+ INTERFACE_input,

+ INTERFACE_ARGS,

+ y: np.array = None,

+ labels: np.array = None,

+ test_size: float = 0.25,

+ train_size: float = 0.75,

+ splitter: str = 'random',

+ hopts: dict = {},

+ INTERFACE_hopts: dict = {},

+):

+ # turn the INTERFACE_input into an input X

+ # based on INTERFACE ARGS where INTERFACE_hopts

+ # specifies additional behavior

+ X = []

+

+ # call train test split with this input

+ return train_test_split(

+ X,

+ y=y,

+ labels=labels,

+ test_size=test_size,

+ train_size=train_size,

+ splitter=splitter,

+ hopts=hopts,

+ )

+If possible, we would like to also add an example Jupyter Notebook with any new interface to demonstrate to new users how it functions. See our other examples in the examples directory.

Contact @JacksonBurns if you need assistance adding an existing workflow to astartes. If this featurization scheme requires additional dependencies to function, we may add it as an additional extra package in the same way that molecules in installed.

The train_val_test_split Function

+train_val_test_split is the workhorse function of astartes.

+It is responsible for instantiating the sampling algorithm, partitioning the data into training, validation, and testing, and then returning the requested results while also keeping an eye on data types.

+Under the hood, train_test_split is just calling train_val_test_split with val_size set to 0.0.

+For more information on how it works, check out the inline documentation in astartes/main.py.

Development Philosophy

+The developers of astartes prioritize (1) reproducibility, (2) flexibility, and (3) maintainability.

-

+

All versions of

astartes1.xshould produce the same results across all platforms, so we have thorough unit and regression testing run on a continuous basis.

+We specify as few dependencies as possible with the loosest possible dependency requirements, which allows integrating

+astarteswith other tools more easily.-

+

Dependencies which introduce a lot of requirements and/or specific versions of requirements are shuffled into the

extras_requireto avoid weighing down the main package.

+Compatibility with all versions of modern Python is achieved by not tightly specifying version numbers as well as by regression testing across all versions.

+

+We follow DRY (Don’t Repeat Yourself) principles to avoid code duplication and decrease maintainence burden, have near-perfect test coverage, and enforce consistent formatting style in the source code.

+-

+

Inline comments are critical for maintainability - at the time of writing,

astarteshas 1 comment line for every 2 lines of source code.

+

+

JOSS Branch

+astartes corresponding JOSS paper is stored in this repository on a separate branch. You can find paper.md on the aptly named joss-paper branch.

Note for Maintainers: To push changes from the main branch into the joss-paper branch, run the Update JOSS Branch workflow.

+

+