+

+

\ No newline at end of file

diff --git a/docs/header.html b/docs/header.html

index be1ffa28b..019931324 100644

--- a/docs/header.html

+++ b/docs/header.html

@@ -1,9 +1,9 @@

diff --git a/docs/index.md b/docs/index.md

index 3d2824f13..21ca27339 100644

--- a/docs/index.md

+++ b/docs/index.md

@@ -1,7 +1,7 @@

---

layout: landing

title: 'LangTest Deliver Safe & Effective Language Models'

-excerpt: 60+ Test Types for Comparing LLM & NLP Models on Accuracy, Bias, Fairness, Robustness & More

+excerpt: 100+ Test Types for Comparing LLM & NLP Models on Accuracy, Bias, Fairness, Robustness & More

permalink: /

header: true

article_header:

@@ -11,7 +11,7 @@ article_header:

url: /docs/pages/docs/install

- text: 'Star on GitHub'

type: full_white

- url: https://github.com/JohnSnowLabs/langtest

+ url: https://github.com/Pacific-AI-Corp/langtest

- text: ' Slack'

type: trans

url: https://spark-nlp.slack.com/archives/C051A5XG6CU

diff --git a/docs/pages/docs/contribute.md b/docs/pages/docs/contribute.md

index 1dd748fae..88e81b50c 100644

--- a/docs/pages/docs/contribute.md

+++ b/docs/pages/docs/contribute.md

@@ -8,10 +8,10 @@ permalink: /docs/pages/docs/contribute

modify_date: "2019-05-16"

---

-We're thrilled that you're interested in contributing to our project. Your contributions can make a significant impact, and we appreciate your support in making [LangTest](https://github.com/JohnSnowLabs/langtest) even better.

+We're thrilled that you're interested in contributing to our project. Your contributions can make a significant impact, and we appreciate your support in making [LangTest](https://github.com/Pacific-AI-Corp/langtest) even better.

Imagine you've identified an area in LangTest that could use improvement or a new feature. Here's a step-by-step guide on how to make a valid contribution:

-Prior to proceeding, ensure that you have meticulously reviewed each step outlined in the [Contribution file](https://github.com/JohnSnowLabs/langtest/blob/add-onboarding-materials/CONTRIBUTING.md). This preparation will equip you with the necessary knowledge to confidently make changes within your designated branch.

+Prior to proceeding, ensure that you have meticulously reviewed each step outlined in the [Contribution file](https://github.com/Pacific-AI-Corp/langtest/blob/add-onboarding-materials/CONTRIBUTING.md). This preparation will equip you with the necessary knowledge to confidently make changes within your designated branch.

> Let's suppose you're eager to add a robustness test.

diff --git a/docs/pages/docs/getting_started.md b/docs/pages/docs/getting_started.md

index 7a3fc5d05..b920d2452 100644

--- a/docs/pages/docs/getting_started.md

+++ b/docs/pages/docs/getting_started.md

@@ -39,7 +39,7 @@ LangTest, an open-source Python library, addresses this by automatically generat

Show your support by giving us a star on GitHub, it's the fuel that keeps our journey of innovation and progress in motion.

{:.btn-block}

- [⭐ Star on GitHub](https://github.com/JohnSnowLabs/langtest){:.button.button--info.button--rounded.button--md}

+ [⭐ Star on GitHub](https://github.com/Pacific-AI-Corp/langtest){:.button.button--info.button--rounded.button--md}

@@ -49,7 +49,7 @@ LangTest, an open-source Python library, addresses this by automatically generat

If you thrive on interactive education, our blogs are your gateway to immersive knowledge.

{:.btn-block}

- [📃 Blogs](https://www.johnsnowlabs.com/responsible-ai-blog/){:.button.button--info.button--rounded.button--md}

+ [📃 Blogs](https://pacific.ai/healthcare-ai-governace-library/){:.button.button--info.button--rounded.button--md}

diff --git a/docs/pages/docs/langtest_versions/latest_release.md b/docs/pages/docs/langtest_versions/latest_release.md

index 10b8cf05f..601a6f2eb 100644

--- a/docs/pages/docs/langtest_versions/latest_release.md

+++ b/docs/pages/docs/langtest_versions/latest_release.md

@@ -490,32 +490,32 @@ The website has been updated to feature new content emphasizing Databricks integ

## What's Changed

-* Websites Changes in v2.1.0 by @chakravarthik27 in https://github.com/JohnSnowLabs/langtest/pull/1006

-* updates web pages by @chakravarthik27 in https://github.com/JohnSnowLabs/langtest/pull/1032

-* adding workflow for github pages by @chakravarthik27 in https://github.com/JohnSnowLabs/langtest/pull/1051

-* websites updates with fixes by @chakravarthik27 in https://github.com/JohnSnowLabs/langtest/pull/1079

-* Website Updates for 2.4.0 by @chakravarthik27 in https://github.com/JohnSnowLabs/langtest/pull/1126

-* Fix/basic setup within datrabricks using azure openai by @chakravarthik27 in https://github.com/JohnSnowLabs/langtest/pull/1128

-* Feature/implement accuracy drop tests on robustness and bias by @chakravarthik27 in https://github.com/JohnSnowLabs/langtest/pull/1129

-* Feature/add support for chat and instruct model types by @chakravarthik27 in https://github.com/JohnSnowLabs/langtest/pull/1131

-* updated: model_kwargs handling for evaluation model by @chakravarthik27 in https://github.com/JohnSnowLabs/langtest/pull/1133

-* updated: acclerate and spacy packages by @chakravarthik27 in https://github.com/JohnSnowLabs/langtest/pull/1135

-* Feature/enhance harness report to include detailed score counts and grouped results by @chakravarthik27 in https://github.com/JohnSnowLabs/langtest/pull/1132

-* Feature/random masking on images tests by @chakravarthik27 in https://github.com/JohnSnowLabs/langtest/pull/1138

-* Unit testing/add new unit tests to enhance test coverage and reliability by @chakravarthik27 in https://github.com/JohnSnowLabs/langtest/pull/1140

-* added new overlay classes for enhanced image robustness by @chakravarthik27 in https://github.com/JohnSnowLabs/langtest/pull/1141

-* Annotations/improve the type annotation for config by @chakravarthik27 in https://github.com/JohnSnowLabs/langtest/pull/1143

-* fix: enhance model loading logic and update dependencies for by @chakravarthik27 in https://github.com/JohnSnowLabs/langtest/pull/1145

-* fix: improve model_report function to handle numeric values and initi… by @chakravarthik27 in https://github.com/JohnSnowLabs/langtest/pull/1146

-* Feature/support for loading datasets from dlt within databricks by @chakravarthik27 in https://github.com/JohnSnowLabs/langtest/pull/1148

-* feat: update dependency version constraints in pyproject.toml by @chakravarthik27 in https://github.com/JohnSnowLabs/langtest/pull/1149

-* feat: enhance DegradationAnalysis to support question-answering task by @chakravarthik27 in https://github.com/JohnSnowLabs/langtest/pull/1153

-* Chore/final website updates for 2.5.0 by @chakravarthik27 in https://github.com/JohnSnowLabs/langtest/pull/1150

-* Chore/final website updates by @chakravarthik27 in https://github.com/JohnSnowLabs/langtest/pull/1155

-* Release/2.5.0 by @chakravarthik27 in https://github.com/JohnSnowLabs/langtest/pull/1144

-

-

-**Full Changelog**: https://github.com/JohnSnowLabs/langtest/compare/2.4.0...2.5.0

+* Websites Changes in v2.1.0 by @chakravarthik27 in https://github.com/Pacific-AI-Corp/langtest/pull/1006

+* updates web pages by @chakravarthik27 in https://github.com/Pacific-AI-Corp/langtest/pull/1032

+* adding workflow for github pages by @chakravarthik27 in https://github.com/Pacific-AI-Corp/langtest/pull/1051

+* websites updates with fixes by @chakravarthik27 in https://github.com/Pacific-AI-Corp/langtest/pull/1079

+* Website Updates for 2.4.0 by @chakravarthik27 in https://github.com/Pacific-AI-Corp/langtest/pull/1126

+* Fix/basic setup within datrabricks using azure openai by @chakravarthik27 in https://github.com/Pacific-AI-Corp/langtest/pull/1128

+* Feature/implement accuracy drop tests on robustness and bias by @chakravarthik27 in https://github.com/Pacific-AI-Corp/langtest/pull/1129

+* Feature/add support for chat and instruct model types by @chakravarthik27 in https://github.com/Pacific-AI-Corp/langtest/pull/1131

+* updated: model_kwargs handling for evaluation model by @chakravarthik27 in https://github.com/Pacific-AI-Corp/langtest/pull/1133

+* updated: acclerate and spacy packages by @chakravarthik27 in https://github.com/Pacific-AI-Corp/langtest/pull/1135

+* Feature/enhance harness report to include detailed score counts and grouped results by @chakravarthik27 in https://github.com/Pacific-AI-Corp/langtest/pull/1132

+* Feature/random masking on images tests by @chakravarthik27 in https://github.com/Pacific-AI-Corp/langtest/pull/1138

+* Unit testing/add new unit tests to enhance test coverage and reliability by @chakravarthik27 in https://github.com/Pacific-AI-Corp/langtest/pull/1140

+* added new overlay classes for enhanced image robustness by @chakravarthik27 in https://github.com/Pacific-AI-Corp/langtest/pull/1141

+* Annotations/improve the type annotation for config by @chakravarthik27 in https://github.com/Pacific-AI-Corp/langtest/pull/1143

+* fix: enhance model loading logic and update dependencies for by @chakravarthik27 in https://github.com/Pacific-AI-Corp/langtest/pull/1145

+* fix: improve model_report function to handle numeric values and initi… by @chakravarthik27 in https://github.com/Pacific-AI-Corp/langtest/pull/1146

+* Feature/support for loading datasets from dlt within databricks by @chakravarthik27 in https://github.com/Pacific-AI-Corp/langtest/pull/1148

+* feat: update dependency version constraints in pyproject.toml by @chakravarthik27 in https://github.com/Pacific-AI-Corp/langtest/pull/1149

+* feat: enhance DegradationAnalysis to support question-answering task by @chakravarthik27 in https://github.com/Pacific-AI-Corp/langtest/pull/1153

+* Chore/final website updates for 2.5.0 by @chakravarthik27 in https://github.com/Pacific-AI-Corp/langtest/pull/1150

+* Chore/final website updates by @chakravarthik27 in https://github.com/Pacific-AI-Corp/langtest/pull/1155

+* Release/2.5.0 by @chakravarthik27 in https://github.com/Pacific-AI-Corp/langtest/pull/1144

+

+

+**Full Changelog**: https://github.com/Pacific-AI-Corp/langtest/compare/2.4.0...2.5.0

{%- include docs-langtest-pagination.html -%}

diff --git a/docs/pages/docs/langtest_versions/release_notes_1_0_0.md b/docs/pages/docs/langtest_versions/release_notes_1_0_0.md

index 44a384306..d32d50a2e 100644

--- a/docs/pages/docs/langtest_versions/release_notes_1_0_0.md

+++ b/docs/pages/docs/langtest_versions/release_notes_1_0_0.md

@@ -50,14 +50,14 @@ h.generate().run().report()

* [LangTest: Documentation](https://langtest.org/docs/pages/docs/install)

* [LangTest: Notebooks](https://langtest.org/docs/pages/tutorials/tutorials)

* [LangTest: Test Types](https://langtest.org/docs/pages/tests/test)

-* [LangTest: GitHub Repo](https://github.com/JohnSnowLabs/langtest)

+* [LangTest: GitHub Repo](https://github.com/Pacific-AI-Corp/langtest)

## ❤️ Community support

* [Slack](https://www.johnsnowlabs.com/slack-redirect/) For live discussion with the LangTest community, join the `#langtest` channel

-* [GitHub](https://github.com/JohnSnowLabs/langtest/tree/main) For bug reports, feature requests, and contributions

-* [Discussions](https://github.com/JohnSnowLabs/langtest/discussions) To engage with other community members, share ideas, and show off how you use NLP Test!

+* [GitHub](https://github.com/Pacific-AI-Corp/langtest/tree/main) For bug reports, feature requests, and contributions

+* [Discussions](https://github.com/Pacific-AI-Corp/langtest/discussions) To engage with other community members, share ideas, and show off how you use NLP Test!

We would love to have you join the mission :point_right: open an issue, a PR, or give us some feedback on features you'd like to see! :raised_hands:

diff --git a/docs/pages/docs/langtest_versions/release_notes_1_10_0.md b/docs/pages/docs/langtest_versions/release_notes_1_10_0.md

index 81f1f78e4..d7534473d 100644

--- a/docs/pages/docs/langtest_versions/release_notes_1_10_0.md

+++ b/docs/pages/docs/langtest_versions/release_notes_1_10_0.md

@@ -37,7 +37,7 @@ We're thrilled to announce the latest release of LangTest, introducing remarkabl

## 🔥 Key Enhancements:

### 🚀Implementing and Evaluating RAG with LlamaIndex and Langtest

- [](https://github.com/JohnSnowLabs/langtest/blob/main/demo/tutorials/RAG/RAG_OpenAI.ipynb)

+ [](https://github.com/Pacific-AI-Corp/langtest/blob/main/demo/tutorials/RAG/RAG_OpenAI.ipynb)

LangTest seamlessly integrates LlamaIndex, focusing on two main aspects: constructing the RAG with LlamaIndex and evaluating its performance. The integration involves utilizing LlamaIndex's generate_question_context_pairs module to create relevant question and context pairs, forming the foundation for retrieval and response evaluation in the RAG system.

@@ -60,7 +60,7 @@ retriever_evaluator.display_results()

```

### 📚Grammar Testing in Evaluating and Enhancing NLP Models

- [](https://github.com/JohnSnowLabs/langtest/blob/main/demo/tutorials/test-specific-notebooks/Grammar_Demo.ipynb)

+ [](https://github.com/Pacific-AI-Corp/langtest/blob/main/demo/tutorials/test-specific-notebooks/Grammar_Demo.ipynb)

Grammar Testing is a key feature in LangTest's suite of evaluation strategies, emphasizing the assessment of a language model's proficiency in contextual understanding and nuance interpretation. By creating test cases that paraphrase original sentences, the goal is to gauge the model's ability to comprehend and interpret text, thereby enriching insights into its contextual mastery.

@@ -121,7 +121,7 @@ HealthSearchQA, a new free-response dataset of medical questions sought online,

Users can effortlessly pass any Hugging Face model object into the LangTest harness and run a variety of tasks. This feature streamlines the process of evaluating and comparing different models, making it easier for users to leverage LangTest's comprehensive suite of tools with the wide array of models available on Hugging Face.

-

+

## 🚀 New LangTest Blogs:

diff --git a/docs/pages/docs/langtest_versions/release_notes_1_1_0.md b/docs/pages/docs/langtest_versions/release_notes_1_1_0.md

index 51a509a0c..e7a8da900 100644

--- a/docs/pages/docs/langtest_versions/release_notes_1_1_0.md

+++ b/docs/pages/docs/langtest_versions/release_notes_1_1_0.md

@@ -44,7 +44,7 @@ modify_date: 2023-08-11

* [LangTest: Documentation](https://langtest.org/docs/pages/docs/install)

* [LangTest: Notebooks](https://langtest.org/docs/pages/tutorials/tutorials)

* [LangTest: Test Types](https://langtest.org/docs/pages/tests/test)

-* [LangTest: GitHub Repo](https://github.com/JohnSnowLabs/langtest)

+* [LangTest: GitHub Repo](https://github.com/Pacific-AI-Corp/langtest)

## ⚒️ Previous Versions

diff --git a/docs/pages/docs/langtest_versions/release_notes_1_2_0.md b/docs/pages/docs/langtest_versions/release_notes_1_2_0.md

index 6bf11d8f2..bdc9649ad 100644

--- a/docs/pages/docs/langtest_versions/release_notes_1_2_0.md

+++ b/docs/pages/docs/langtest_versions/release_notes_1_2_0.md

@@ -33,7 +33,7 @@ modify_date: 2023-08-11

* [LangTest: Documentation](https://langtest.org/docs/pages/docs/install)

* [LangTest: Notebooks](https://langtest.org/docs/pages/tutorials/tutorials)

* [LangTest: Test Types](https://langtest.org/docs/pages/tests/test)

-* [LangTest: GitHub Repo](https://github.com/JohnSnowLabs/langtest)

+* [LangTest: GitHub Repo](https://github.com/Pacific-AI-Corp/langtest)

## ⚒️ Previous Versions

diff --git a/docs/pages/docs/langtest_versions/release_notes_1_4_0.md b/docs/pages/docs/langtest_versions/release_notes_1_4_0.md

index 122d85987..75250cb36 100644

--- a/docs/pages/docs/langtest_versions/release_notes_1_4_0.md

+++ b/docs/pages/docs/langtest_versions/release_notes_1_4_0.md

@@ -37,21 +37,21 @@ We added some of the subsets to our library:

4. Casual Judgement

➤ Notebook Links:

-- [BigBench](https://github.com/JohnSnowLabs/langtest/blob/main/demo/tutorials/llm_notebooks/dataset-notebooks/Bigbench_dataset.ipynb)

-- [LogiQA](https://github.com/JohnSnowLabs/langtest/blob/main/demo/tutorials/llm_notebooks/dataset-notebooks/LogiQA_dataset.ipynb)

-- [asdiv](https://github.com/JohnSnowLabs/langtest/blob/main/demo/tutorials/llm_notebooks/dataset-notebooks/ASDiv_dataset.ipynb)

+- [BigBench](https://github.com/Pacific-AI-Corp/langtest/blob/main/demo/tutorials/llm_notebooks/dataset-notebooks/Bigbench_dataset.ipynb)

+- [LogiQA](https://github.com/Pacific-AI-Corp/langtest/blob/main/demo/tutorials/llm_notebooks/dataset-notebooks/LogiQA_dataset.ipynb)

+- [asdiv](https://github.com/Pacific-AI-Corp/langtest/blob/main/demo/tutorials/llm_notebooks/dataset-notebooks/ASDiv_dataset.ipynb)

➤ How the test looks ?

#### LogiQA

-

+

#### ASDiv

-

+

#### BigBench

-

+

@@ -75,7 +75,7 @@ harness = Harness(

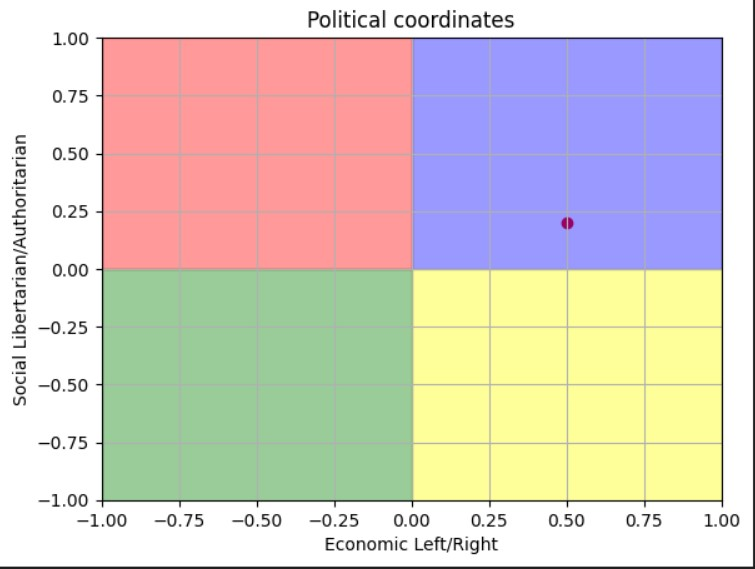

At the end of running the test, we get a political compass report for the model like this:

-

+

The test presents a grid with two axes, typically labeled as follows:

@@ -95,7 +95,7 @@ The primary objective of this test is to assess the model's capability to genera

- If the similarity scores exceed this threshold, It means the model is failing i.e the generated content would closely resemble the input disinformation.

Tutorial Notebook:

-[Disinformation NB](https://github.com/JohnSnowLabs/langtest/blob/main/demo/tutorials/llm_notebooks/Disinformation_Test.ipynb)

+[Disinformation NB](https://github.com/Pacific-AI-Corp/langtest/blob/main/demo/tutorials/llm_notebooks/Disinformation_Test.ipynb)

#### Usage

```

@@ -110,7 +110,7 @@ harness.generate().run().report()

➤ How the test looks ?

-

+

### Adding support for text generation HF models

@@ -121,11 +121,11 @@ It is intended to add the capability to locally deploy and assess text generatio

#### Usage

You can set the hub parameter to huggingface and choose any model from [HF model hub](https://huggingface.co/models?pipeline_tag=text-generation).

-

+

➤ How the test looks ?

-

+

Tutorial Notebook:

diff --git a/docs/pages/docs/langtest_versions/release_notes_1_5_0.md b/docs/pages/docs/langtest_versions/release_notes_1_5_0.md

index 1af8bbb7f..596ed4ac0 100644

--- a/docs/pages/docs/langtest_versions/release_notes_1_5_0.md

+++ b/docs/pages/docs/langtest_versions/release_notes_1_5_0.md

@@ -28,7 +28,7 @@ This test is specifically designed for Hugging Face fill-mask models like BERT,

➤ How the test looks ?

-

+

@@ -41,7 +41,7 @@ The LegalSupport dataset evaluates fine-grained reverse entailment. Each sample

➤ How the test looks ?

-

+

### Adding support for factuality test

@@ -75,11 +75,11 @@ Accuracy is assessed by examining the "pass" column. If "pass" is marked as **Tr

➤ Notebook Link:

-- [Factuality Test](https://github.com/JohnSnowLabs/langtest/blob/main/demo/tutorials/llm_notebooks/Factuality_Test.ipynb)

+- [Factuality Test](https://github.com/Pacific-AI-Corp/langtest/blob/main/demo/tutorials/llm_notebooks/Factuality_Test.ipynb)

➤ How the test looks ?

-

+

@@ -108,7 +108,7 @@ By following these steps, we can gauge the model's sensitivity to negations and

We have used threshold of (-0.1,0.1) . If the eval_score falls within this threshold range, it indicates that the model is failing to properly handle negations, implying insensitivity to linguistic nuances introduced by negation words.

-

+

### Adding support for legal-summarization test

@@ -121,13 +121,13 @@ We have used threshold of (-0.1,0.1) . If the eval_score falls within this thres

The Multi-LexSum dataset consists of legal case summaries. The aim is for the model to thoroughly examine the given context and, upon understanding its content, produce a concise summary that captures the essential themes and key details.

➤ Notebook Link:

-- [Legal Summarization](https://github.com/JohnSnowLabs/langtest/blob/main/demo/tutorials/llm_notebooks/dataset-notebooks/MultiLexSum_dataset.ipynb)

+- [Legal Summarization](https://github.com/Pacific-AI-Corp/langtest/blob/main/demo/tutorials/llm_notebooks/dataset-notebooks/MultiLexSum_dataset.ipynb)

➤ How the test looks ?

The default threshold value is 0.50. If the eval_score is higher than threshold, then the "pass" will be as true.

-

+

## 🐛 Bug Fixes

diff --git a/docs/pages/docs/langtest_versions/release_notes_1_6_0.md b/docs/pages/docs/langtest_versions/release_notes_1_6_0.md

index b881b7de3..e5b8a42ad 100644

--- a/docs/pages/docs/langtest_versions/release_notes_1_6_0.md

+++ b/docs/pages/docs/langtest_versions/release_notes_1_6_0.md

@@ -34,23 +34,23 @@ A heartfelt thank you to our unwavering community for consistently fueling our j

- [PIQA](https://arxiv.org/abs/1911.11641) - The PIQA dataset is designed to address the challenging task of reasoning about physical commonsense in natural language. It presents a collection of multiple-choice questions in English, where each question involves everyday situations and requires selecting the most appropriate solution from two choices.

➤ Notebook Link:

-- [CommonSenseQA](https://github.com/JohnSnowLabs/langtest/blob/main/demo/tutorials/llm_notebooks/dataset-notebooks/CommonsenseQA_dataset.ipynb)

+- [CommonSenseQA](https://github.com/Pacific-AI-Corp/langtest/blob/main/demo/tutorials/llm_notebooks/dataset-notebooks/CommonsenseQA_dataset.ipynb)

-- [SIQA](https://github.com/JohnSnowLabs/langtest/blob/main/demo/tutorials/llm_notebooks/dataset-notebooks/SIQA_dataset.ipynb)

+- [SIQA](https://github.com/Pacific-AI-Corp/langtest/blob/main/demo/tutorials/llm_notebooks/dataset-notebooks/SIQA_dataset.ipynb)

-- [PIQA](https://github.com/JohnSnowLabs/langtest/blob/main/demo/tutorials/llm_notebooks/dataset-notebooks/PIQA_dataset.ipynb)

+- [PIQA](https://github.com/Pacific-AI-Corp/langtest/blob/main/demo/tutorials/llm_notebooks/dataset-notebooks/PIQA_dataset.ipynb)

➤ How the test looks ?

- CommonsenseQA

-

+

- SIQA

-

+

- PIQA

-

+

### Adding support for toxicity sensitivity

@@ -90,10 +90,10 @@ If the evaluation score is greater than 0, the test result is `False`, indicatin

By following these steps, we can gauge the model's sensitivity to toxic words and assess whether it refrain itself to provide toxic words in the output.

➤ Notebook Link:

-- [Tutorial](https://github.com/JohnSnowLabs/langtest/blob/main/demo/tutorials/llm_notebooks/Sensitivity_Test.ipynb)

+- [Tutorial](https://github.com/Pacific-AI-Corp/langtest/blob/main/demo/tutorials/llm_notebooks/Sensitivity_Test.ipynb)

➤ How the test looks ?

-

+

### Adding support for legal-qa datasets (Consumer Contracts, Privacy-Policy, Contracts-QA)

@@ -108,18 +108,18 @@ Adding 3 legal-QA-datasets from the [legalbench ](https://github.com/HazyResearc

➤ Notebook Link:

-- [LegalQA_Datasets](https://github.com/JohnSnowLabs/langtest/blob/release/1.6.0/demo/tutorials/llm_notebooks/dataset-notebooks/LegalQA_Datasets.ipynb)

+- [LegalQA_Datasets](https://github.com/Pacific-AI-Corp/langtest/blob/release/1.6.0/demo/tutorials/llm_notebooks/dataset-notebooks/LegalQA_Datasets.ipynb)

➤ How the test looks ?

#### Consumer Contracts:

-

+

#### Privacy Policy:

-

+

#### Contracts-QA:

-

+

### Adding support for Crows-Pairs common stereotype test

@@ -127,12 +127,12 @@ Adding 3 legal-QA-datasets from the [legalbench ](https://github.com/HazyResearc

This test uses a masked sentence and two tokens to decide whether the model has bias for/against one choice.

➤ Notebook Link:

-- [Crows-Pairs](https://github.com/JohnSnowLabs/langtest/blob/main/demo/tutorials/task-specific-notebooks/Crows_Pairs_Notebook.ipynb)

+- [Crows-Pairs](https://github.com/Pacific-AI-Corp/langtest/blob/main/demo/tutorials/task-specific-notebooks/Crows_Pairs_Notebook.ipynb)

➤ How the test looks ?

-

+

@@ -201,24 +201,24 @@ The evaluation process for synthetic NLP data involves comparing the model's res

➤ Notebook Link:

-- [Sycophancy ](https://github.com/JohnSnowLabs/langtest/blob/aa91cd93dbf30f68af38abe926d66a5bc87d541b/demo/tutorials/llm_notebooks/Sycophancy_test.ipynb)

+- [Sycophancy ](https://github.com/Pacific-AI-Corp/langtest/blob/aa91cd93dbf30f68af38abe926d66a5bc87d541b/demo/tutorials/llm_notebooks/Sycophancy_test.ipynb)

➤ How the test looks ?

#### Synthetic Math Data (Evaluation with Ground Truth)

-

+

#### Synthetic Math Data (Evaluation without Ground Truth)

-

+

#### Synthetic nlp Data (Evaluation with Ground Truth)

-

+

#### Synthetic nlp Data (Evaluation without Ground Truth)

-

+

## 📝 BlogPosts

diff --git a/docs/pages/docs/langtest_versions/release_notes_1_8_0.md b/docs/pages/docs/langtest_versions/release_notes_1_8_0.md

index 5c950f35f..04aead96e 100644

--- a/docs/pages/docs/langtest_versions/release_notes_1_8_0.md

+++ b/docs/pages/docs/langtest_versions/release_notes_1_8_0.md

@@ -49,10 +49,10 @@ Our team has published three enlightening blogs on Hugging Face's community plat

----------------

## 🐛 Bug Fixes

-- Fixed templatic augmentations [PR #851](https://github.com/JohnSnowLabs/langtest/pull/851)

-- Resolved a bug in default configurations [PR #880](https://github.com/JohnSnowLabs/langtest/pull/880)

-- Addressed compatibility issues between OpenAI (version 1.1.1) and Langchain [PR #877](https://github.com/JohnSnowLabs/langtest/pull/877)

-- Fixed errors in sycophancy-test, factuality-test, and augmentation [PR #869](https://github.com/JohnSnowLabs/langtest/pull/869)

+- Fixed templatic augmentations [PR #851](https://github.com/Pacific-AI-Corp/langtest/pull/851)

+- Resolved a bug in default configurations [PR #880](https://github.com/Pacific-AI-Corp/langtest/pull/880)

+- Addressed compatibility issues between OpenAI (version 1.1.1) and Langchain [PR #877](https://github.com/Pacific-AI-Corp/langtest/pull/877)

+- Fixed errors in sycophancy-test, factuality-test, and augmentation [PR #869](https://github.com/Pacific-AI-Corp/langtest/pull/869)

## ⚒️ Previous Versions

diff --git a/docs/pages/docs/langtest_versions/release_notes_1_9_0.md b/docs/pages/docs/langtest_versions/release_notes_1_9_0.md

index 00e5e7f07..69d3d7ca0 100644

--- a/docs/pages/docs/langtest_versions/release_notes_1_9_0.md

+++ b/docs/pages/docs/langtest_versions/release_notes_1_9_0.md

@@ -59,7 +59,7 @@ trainer = Trainer(..., callbacks=[my_callback])

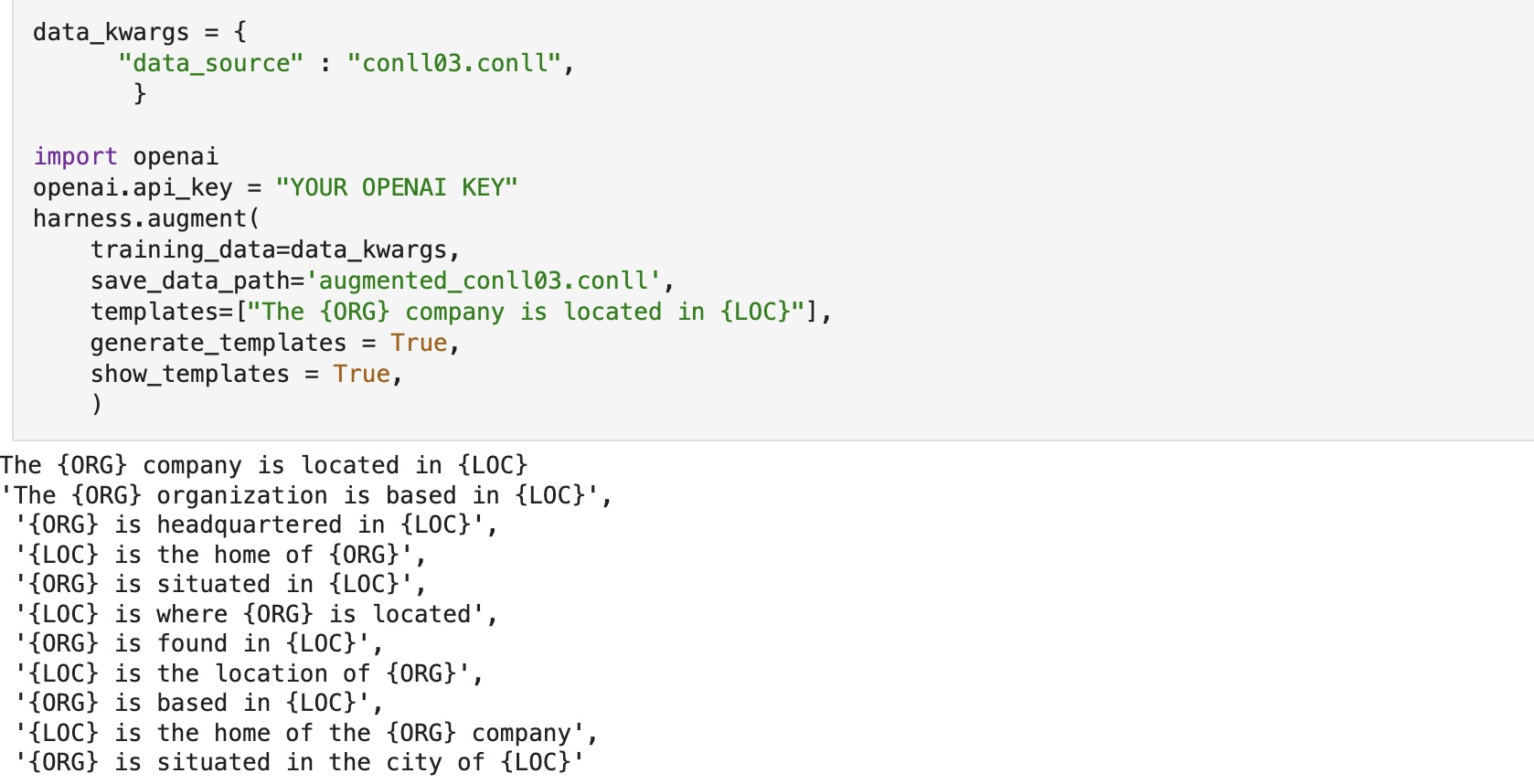

Users can now enable the automatic generation of sample templates by setting generate_templates to True. This feature utilizes the advanced capabilities of LLMs to create structured templates that can be used for templatic augmentation.To ensure quality and relevance, users can review the generated templates by setting show_templates to True.

-

+

### 🚀 Benchmarking Different Models

@@ -76,7 +76,7 @@ We focused on extracting clinical subsets from the MMLU dataset, creating a spec

|------------|-----------|------------------------------------------------------|----------------------------------------------------|------------------|---------------|------|

| robustness | uppercase | Fatty acids are transported into the mitochondria bound to:\nA. thiokinase. B. coenzyme A (CoA). C. acetyl-CoA. D. carnitine. | FATTY ACIDS ARE TRANSPORTED INTO THE MITOCHONDRIA BOUND TO: A. THIOKINASE. B. COENZYME A (COA). C. ACETYL-COA. D. CARNITINE. | D. carnitine. | B. COENZYME A (COA). | False |

-

+

#### OpenBookQA

@@ -93,7 +93,7 @@ each with four answer choices and one correct answer. The questions cover variou

-

+

#### MedMCQA

@@ -106,7 +106,7 @@ The MedMCQA is a large-scale benchmark dataset of Multiple-Choice Question Answe

|------------|-----------|------------------------------------------------------|----------------------------------------------------|------------------|---------------|------|

| robustness | uppercase | Most common site of direct hernia\nA. Hesselbach's triangle\nB. Femoral gland\nC. No site predilection\nD. nan | MOST COMMON SITE OF DIRECT HERNIA A. HESSELBACH'S TRIANGLE B. FEMORAL GLAND C. NO SITE PREDILECTION D. NAN | A | A | True |

-

+

**Dataset info:**

- subset: MedMCQA-Test

- Split: Medicine, Anatomy, Forensic_Medicine, Microbiology, Pathology, Anaesthesia, Pediatrics, Physiology, Biochemistry, Gynaecology_Obstetrics, Skin, Surgery, Radiology

@@ -126,7 +126,7 @@ The MedQA is a benchmark dataset of Multiple choice question answering based on

-

+

## 🚀 Community Contributions:

diff --git a/docs/pages/docs/langtest_versions/release_notes_2_0_0.md b/docs/pages/docs/langtest_versions/release_notes_2_0_0.md

index 48a086295..f676eed4d 100644

--- a/docs/pages/docs/langtest_versions/release_notes_2_0_0.md

+++ b/docs/pages/docs/langtest_versions/release_notes_2_0_0.md

@@ -86,7 +86,7 @@ The integration of [LM Studio](https://lmstudio.ai/) with LangTest enables offl

Simply integrate LM Studio with LangTest to unlock offline utilization of Hugging Face quantized models for your NLP testing needs., below is the demo video for help.

-https://github.com/JohnSnowLabs/langtest/assets/101416953/d1f288d4-1d96-4d9c-9db2-4f87a9e69019

+https://github.com/Pacific-AI-Corp/langtest/assets/101416953/d1f288d4-1d96-4d9c-9db2-4f87a9e69019

### 🚀Text Embedding Benchmark Pipelines with CLI (LangTest + LlamaIndex)

[](https://colab.research.google.com/github/Pacific-AI-Corp/langtest/blob/main/demo/tutorials/benchmarks/Benchmarking_Embeddings(Llama_Index%2BLangtest).ipynb)

@@ -240,29 +240,29 @@ harness.generate().run().report()

## What's Changed

-* Fix accuracy and bugs by @Prikshit7766 in https://github.com/JohnSnowLabs/langtest/pull/945

-* Lm studio by @Prikshit7766 in https://github.com/JohnSnowLabs/langtest/pull/955

-* Remove unused variable and update reference to global_service_context by @chakravarthik27 in https://github.com/JohnSnowLabs/langtest/pull/956

-* Display model response for accuracy by @Prikshit7766 in https://github.com/JohnSnowLabs/langtest/pull/958

-* Update display import with try_import_lib by @chakravarthik27 in https://github.com/JohnSnowLabs/langtest/pull/961

-* Feature/run embedding benchmark pipelines CLI by @ArshaanNazir in https://github.com/JohnSnowLabs/langtest/pull/960

-* Fix llm eval for transformers and lm studio and Code Refactoring by @Prikshit7766 in https://github.com/JohnSnowLabs/langtest/pull/963

-* Feature/add feature to compare models on different benchmark datasets by @chakravarthik27 in https://github.com/JohnSnowLabs/langtest/pull/964

-* Fix/religion bias space issue by @Prikshit7766 in https://github.com/JohnSnowLabs/langtest/pull/966

-* Fixes by @RakshitKhajuria in https://github.com/JohnSnowLabs/langtest/pull/967

-* Renaming sub task by @Prikshit7766 in https://github.com/JohnSnowLabs/langtest/pull/970

-* Fixes/cli issues by @chakravarthik27 in https://github.com/JohnSnowLabs/langtest/pull/972

-* website updates by @ArshaanNazir in https://github.com/JohnSnowLabs/langtest/pull/962

-* Feature/Updated_toxicity_Test by @ArshaanNazir in https://github.com/JohnSnowLabs/langtest/pull/979

-* Fix/datasets by @ArshaanNazir in https://github.com/JohnSnowLabs/langtest/pull/975

-* Fix: CSVDataset and HuggingFaceDataset class by @Prikshit7766 in https://github.com/JohnSnowLabs/langtest/pull/976

-* Llm eval in fairness by @Prikshit7766 in https://github.com/JohnSnowLabs/langtest/pull/974

-* Enhancement/sycophancy math by @RakshitKhajuria in https://github.com/JohnSnowLabs/langtest/pull/977

-* Update dependencies in setup.py and pyproject.toml by @chakravarthik27 in https://github.com/JohnSnowLabs/langtest/pull/981

-* Chore/final website updates by @ArshaanNazir in https://github.com/JohnSnowLabs/langtest/pull/980

-* Release/2.0.0 by @ArshaanNazir in https://github.com/JohnSnowLabs/langtest/pull/983

-

-

-**Full Changelog**: https://github.com/JohnSnowLabs/langtest/compare/1.10.0...2.0.0

+* Fix accuracy and bugs by @Prikshit7766 in https://github.com/Pacific-AI-Corp/langtest/pull/945

+* Lm studio by @Prikshit7766 in https://github.com/Pacific-AI-Corp/langtest/pull/955

+* Remove unused variable and update reference to global_service_context by @chakravarthik27 in https://github.com/Pacific-AI-Corp/langtest/pull/956

+* Display model response for accuracy by @Prikshit7766 in https://github.com/Pacific-AI-Corp/langtest/pull/958

+* Update display import with try_import_lib by @chakravarthik27 in https://github.com/Pacific-AI-Corp/langtest/pull/961

+* Feature/run embedding benchmark pipelines CLI by @ArshaanNazir in https://github.com/Pacific-AI-Corp/langtest/pull/960

+* Fix llm eval for transformers and lm studio and Code Refactoring by @Prikshit7766 in https://github.com/Pacific-AI-Corp/langtest/pull/963

+* Feature/add feature to compare models on different benchmark datasets by @chakravarthik27 in https://github.com/Pacific-AI-Corp/langtest/pull/964

+* Fix/religion bias space issue by @Prikshit7766 in https://github.com/Pacific-AI-Corp/langtest/pull/966

+* Fixes by @RakshitKhajuria in https://github.com/Pacific-AI-Corp/langtest/pull/967

+* Renaming sub task by @Prikshit7766 in https://github.com/Pacific-AI-Corp/langtest/pull/970

+* Fixes/cli issues by @chakravarthik27 in https://github.com/Pacific-AI-Corp/langtest/pull/972

+* website updates by @ArshaanNazir in https://github.com/Pacific-AI-Corp/langtest/pull/962

+* Feature/Updated_toxicity_Test by @ArshaanNazir in https://github.com/Pacific-AI-Corp/langtest/pull/979

+* Fix/datasets by @ArshaanNazir in https://github.com/Pacific-AI-Corp/langtest/pull/975

+* Fix: CSVDataset and HuggingFaceDataset class by @Prikshit7766 in https://github.com/Pacific-AI-Corp/langtest/pull/976

+* Llm eval in fairness by @Prikshit7766 in https://github.com/Pacific-AI-Corp/langtest/pull/974

+* Enhancement/sycophancy math by @RakshitKhajuria in https://github.com/Pacific-AI-Corp/langtest/pull/977

+* Update dependencies in setup.py and pyproject.toml by @chakravarthik27 in https://github.com/Pacific-AI-Corp/langtest/pull/981

+* Chore/final website updates by @ArshaanNazir in https://github.com/Pacific-AI-Corp/langtest/pull/980

+* Release/2.0.0 by @ArshaanNazir in https://github.com/Pacific-AI-Corp/langtest/pull/983

+

+

+**Full Changelog**: https://github.com/Pacific-AI-Corp/langtest/compare/1.10.0...2.0.0

{%- include docs-langtest-pagination.html -%}

diff --git a/docs/pages/docs/langtest_versions/release_notes_2_1_0.md b/docs/pages/docs/langtest_versions/release_notes_2_1_0.md

index 4663461e7..a4e5a036f 100644

--- a/docs/pages/docs/langtest_versions/release_notes_2_1_0.md

+++ b/docs/pages/docs/langtest_versions/release_notes_2_1_0.md

@@ -101,7 +101,7 @@ harness = Harness(

# Generate, Run and get Report

harness.generate().run().report()

```

-

+

### 📂 Streamlined Data Handling and Evaluation

@@ -183,7 +183,7 @@ harness.generate() generates testcases, .run() executes them, and .report() comp

```python

harness.generate().run().report()

```

-

+

### 🖥️ Streamlined Evaluation Workflows with Enhanced CLI Commands

[](https://colab.research.google.com/github/Pacific-AI-Corp/langtest/blob/main/demo/tutorials/benchmarks/Langtest_Cli_Eval_Command.ipynb)

@@ -272,7 +272,7 @@ langtest eval --model \

-c < your configuration file like parameter.json or parameter.yaml>

```

Finally, we can know the leaderboard and rank of the model.

-

+

----

@@ -280,7 +280,7 @@ To visualize the leaderboard anytime using the CLI command

```bash

langtest show-leaderboard

```

-

+

## 📒 New Notebooks

@@ -306,21 +306,21 @@ langtest show-leaderboard

## What's Changed

-* Feautre/integration with web api by @chakravarthik27 in https://github.com/JohnSnowLabs/langtest/pull/986

-* Refactor TestFactory class to handle exceptions in async tests by @chakravarthik27 in https://github.com/JohnSnowLabs/langtest/pull/990

-* data augmentation support for question-answering task by @chakravarthik27 in https://github.com/JohnSnowLabs/langtest/pull/991

-* Updated dependencies by @chakravarthik27 in https://github.com/JohnSnowLabs/langtest/pull/992

-* Fix/implement the multiple dataset support for accuracy tests by @chakravarthik27 in https://github.com/JohnSnowLabs/langtest/pull/998

-* Feature/add support for other file formats by @chakravarthik27 in https://github.com/JohnSnowLabs/langtest/pull/993

-* Bug Fix: Generated results are none by @chakravarthik27 in https://github.com/JohnSnowLabs/langtest/pull/1000

-* Feature/implement load & save for benchmark reports by @chakravarthik27 in https://github.com/JohnSnowLabs/langtest/pull/999

-* Fix/bug fixes langtest 2 1 0 rc1 by @chakravarthik27 in https://github.com/JohnSnowLabs/langtest/pull/1003

-* website updates by @chakravarthik27 in https://github.com/JohnSnowLabs/langtest/pull/1001

-* Fix/bug fixes langtest 2 1 0 rc1 by @chakravarthik27 in https://github.com/JohnSnowLabs/langtest/pull/1004

-* Release/2.0.1 by @chakravarthik27 in https://github.com/JohnSnowLabs/langtest/pull/1005

-

-

-**Full Changelog**: https://github.com/JohnSnowLabs/langtest/compare/2.0.0...2.1.0

+* Feautre/integration with web api by @chakravarthik27 in https://github.com/Pacific-AI-Corp/langtest/pull/986

+* Refactor TestFactory class to handle exceptions in async tests by @chakravarthik27 in https://github.com/Pacific-AI-Corp/langtest/pull/990

+* data augmentation support for question-answering task by @chakravarthik27 in https://github.com/Pacific-AI-Corp/langtest/pull/991

+* Updated dependencies by @chakravarthik27 in https://github.com/Pacific-AI-Corp/langtest/pull/992

+* Fix/implement the multiple dataset support for accuracy tests by @chakravarthik27 in https://github.com/Pacific-AI-Corp/langtest/pull/998

+* Feature/add support for other file formats by @chakravarthik27 in https://github.com/Pacific-AI-Corp/langtest/pull/993

+* Bug Fix: Generated results are none by @chakravarthik27 in https://github.com/Pacific-AI-Corp/langtest/pull/1000

+* Feature/implement load & save for benchmark reports by @chakravarthik27 in https://github.com/Pacific-AI-Corp/langtest/pull/999

+* Fix/bug fixes langtest 2 1 0 rc1 by @chakravarthik27 in https://github.com/Pacific-AI-Corp/langtest/pull/1003

+* website updates by @chakravarthik27 in https://github.com/Pacific-AI-Corp/langtest/pull/1001

+* Fix/bug fixes langtest 2 1 0 rc1 by @chakravarthik27 in https://github.com/Pacific-AI-Corp/langtest/pull/1004

+* Release/2.0.1 by @chakravarthik27 in https://github.com/Pacific-AI-Corp/langtest/pull/1005

+

+

+**Full Changelog**: https://github.com/Pacific-AI-Corp/langtest/compare/2.0.0...2.1.0

## ⚒️ Previous Versions

diff --git a/docs/pages/docs/langtest_versions/release_notes_2_2_0.md b/docs/pages/docs/langtest_versions/release_notes_2_2_0.md

index 9391b9b43..29e6f24e6 100644

--- a/docs/pages/docs/langtest_versions/release_notes_2_2_0.md

+++ b/docs/pages/docs/langtest_versions/release_notes_2_2_0.md

@@ -93,7 +93,7 @@ harness = Harness(

```python

harness.generate().run().report()

```

-

+

**3**. Similarly, do the same steps for the `google/flan-t5-large` model with the same `save_dir` path for benchmarking and the same `config.yaml`

@@ -101,7 +101,7 @@ harness.generate().run().report()

```python

harness.get_leaderboard()

```

-

+

**Conclusion:**

The Model Ranking & Leaderboard system provides a robust and structured method for evaluating and comparing models across multiple datasets, enabling users to make data-driven decisions and continuously improve model performance.

@@ -182,7 +182,7 @@ harness = Harness(

```python

harness.generate().run().report()

```

-

+

**Conclusion:**

Few-Shot Model Evaluation provides valuable insights into model capabilities with minimal data, allowing for rapid and effective performance optimization. This feature ensures that models can be assessed and improved efficiently, even with limited examples.

@@ -234,10 +234,10 @@ harness = Harness(task="ner",

```python

harness.generate().run().report()

```

-

+

Examples:

-

+

**Conclusion:**

Evaluating NER in LLMs allows for accurate entity extraction and performance assessment using LangTest's comprehensive evaluation methods. This feature ensures thorough and reliable evaluation of LLMs on Named Entity Recognition tasks.

@@ -344,7 +344,7 @@ harness.configure(

```python

harness.generate().run().report()

```

-

+

**Conclusion:**

Multi-dataset prompts in LangTest empower users to efficiently manage and test multiple data sources, resulting in more effective and comprehensive language model evaluations.

@@ -372,23 +372,23 @@ Multi-dataset prompts in LangTest empower users to efficiently manage and test m

- Code Organization and Readability in Augmentation Module [#1025]

## What's Changed

-* User prompt handling for multi-dataset testing by @chakravarthik27 in https://github.com/JohnSnowLabs/langtest/pull/1010

-* Bug fix/performance tests by @chakravarthik27 in https://github.com/JohnSnowLabs/langtest/pull/1015

-* NER task support for casuallm models from huggingface, web, and lm-studio by @chakravarthik27 in https://github.com/JohnSnowLabs/langtest/pull/1009

-* `random_age` Class not returning test cases by @chakravarthik27 in https://github.com/JohnSnowLabs/langtest/pull/1020

-* Feature/data augmentation allow access without harness testing by @chakravarthik27 in https://github.com/JohnSnowLabs/langtest/pull/1016

-* Improvements/load and save benchmark report by @chakravarthik27 in https://github.com/JohnSnowLabs/langtest/pull/1012

-* Refactor: Improved the `import_edited_testcases()` functionality in Harness. by @chakravarthik27 in https://github.com/JohnSnowLabs/langtest/pull/1022

-* Implementation of prompt techniques by @chakravarthik27 in https://github.com/JohnSnowLabs/langtest/pull/1018

-* Fix: Summary class to update summary dataframe and handle file path by @chakravarthik27 in https://github.com/JohnSnowLabs/langtest/pull/1024

-* Refactor: Improve Code Organization and Readability by @chakravarthik27 in https://github.com/JohnSnowLabs/langtest/pull/1025

-* Improved: `rank_by` argument add to `harness.get_leaderboard()` by @chakravarthik27 in https://github.com/JohnSnowLabs/langtest/pull/1027

-* website updates by @chakravarthik27 in https://github.com/JohnSnowLabs/langtest/pull/1023

-* updated: langtest version in pip by @chakravarthik27 in https://github.com/JohnSnowLabs/langtest/pull/1028

-* Release/2.2.0 by @chakravarthik27 in https://github.com/JohnSnowLabs/langtest/pull/1029

-

-

-**Full Changelog**: https://github.com/JohnSnowLabs/langtest/compare/2.1.0...2.2.0

+* User prompt handling for multi-dataset testing by @chakravarthik27 in https://github.com/Pacific-AI-Corp/langtest/pull/1010

+* Bug fix/performance tests by @chakravarthik27 in https://github.com/Pacific-AI-Corp/langtest/pull/1015

+* NER task support for casuallm models from huggingface, web, and lm-studio by @chakravarthik27 in https://github.com/Pacific-AI-Corp/langtest/pull/1009

+* `random_age` Class not returning test cases by @chakravarthik27 in https://github.com/Pacific-AI-Corp/langtest/pull/1020

+* Feature/data augmentation allow access without harness testing by @chakravarthik27 in https://github.com/Pacific-AI-Corp/langtest/pull/1016

+* Improvements/load and save benchmark report by @chakravarthik27 in https://github.com/Pacific-AI-Corp/langtest/pull/1012

+* Refactor: Improved the `import_edited_testcases()` functionality in Harness. by @chakravarthik27 in https://github.com/Pacific-AI-Corp/langtest/pull/1022

+* Implementation of prompt techniques by @chakravarthik27 in https://github.com/Pacific-AI-Corp/langtest/pull/1018

+* Fix: Summary class to update summary dataframe and handle file path by @chakravarthik27 in https://github.com/Pacific-AI-Corp/langtest/pull/1024

+* Refactor: Improve Code Organization and Readability by @chakravarthik27 in https://github.com/Pacific-AI-Corp/langtest/pull/1025

+* Improved: `rank_by` argument add to `harness.get_leaderboard()` by @chakravarthik27 in https://github.com/Pacific-AI-Corp/langtest/pull/1027

+* website updates by @chakravarthik27 in https://github.com/Pacific-AI-Corp/langtest/pull/1023

+* updated: langtest version in pip by @chakravarthik27 in https://github.com/Pacific-AI-Corp/langtest/pull/1028

+* Release/2.2.0 by @chakravarthik27 in https://github.com/Pacific-AI-Corp/langtest/pull/1029

+

+

+**Full Changelog**: https://github.com/Pacific-AI-Corp/langtest/compare/2.1.0...2.2.0

{%- include docs-langtest-pagination.html -%}

diff --git a/docs/pages/docs/langtest_versions/release_notes_2_3_0.md b/docs/pages/docs/langtest_versions/release_notes_2_3_0.md

index 0b350701a..c8a1eff60 100644

--- a/docs/pages/docs/langtest_versions/release_notes_2_3_0.md

+++ b/docs/pages/docs/langtest_versions/release_notes_2_3_0.md

@@ -130,7 +130,7 @@ harness = Harness(

```python

harness.generate().run().report()

```

-

+

This enhancement allows for a more efficient and insightful evaluation process, ensuring that models are thoroughly tested and compared across a variety of scenarios.

@@ -194,7 +194,7 @@ harness.configure(

```python

harness.generate().run().report()

```

-

+

This enhancement ensures that medical and pharmaceutical models are evaluated with the highest accuracy and contextual relevance, considering the use of both generic and brand drug names.

@@ -254,7 +254,7 @@ harness.generate().run().report()

```

-

+

This integration ensures that model performance is assessed with a higher degree of accuracy and detail, leveraging the advanced capabilities of the Prometheus model to provide meaningful and actionable insights.

@@ -351,25 +351,25 @@ Significant enhancements to the logging functionalities provide more detailed an

- Adding notebooks and websites changes 2.3.0 [#1063]

## What's Changed

-* chore: update langtest version to 2.2.0 by @chakravarthik27 in https://github.com/JohnSnowLabs/langtest/pull/1031

-* Enhancements/improve the logging and its functionalities by @chakravarthik27 in https://github.com/JohnSnowLabs/langtest/pull/1038

-* Refactor/improve the transform module by @chakravarthik27 in https://github.com/JohnSnowLabs/langtest/pull/1044

-* expand-entity-type-support-in-label-representation-tests by @chakravarthik27 in https://github.com/JohnSnowLabs/langtest/pull/1042

-* chore: Update GitHub Pages workflow for Jekyll site deployment by @chakravarthik27 in https://github.com/JohnSnowLabs/langtest/pull/1050

-* Feature/add support for multi model with multi dataset by @chakravarthik27 in https://github.com/JohnSnowLabs/langtest/pull/1039

-* Add support to the LLM eval class in Accuracy Category. by @chakravarthik27 in https://github.com/JohnSnowLabs/langtest/pull/1053

-* feat: Add SafetyTestFactory and Misuse class for safety testing by @chakravarthik27 in https://github.com/JohnSnowLabs/langtest/pull/1040

-* Fix/alignment issues in bias tests for ner task by @chakravarthik27 in https://github.com/JohnSnowLabs/langtest/pull/1060

-* Feature/integrate prometheus model for enhanced evaluation by @chakravarthik27 in https://github.com/JohnSnowLabs/langtest/pull/1055

-* chore: update dependencies by @chakravarthik27 in https://github.com/JohnSnowLabs/langtest/pull/1047

-* Feature/implement the generic to brand drug name swapping tests and vice versa by @chakravarthik27 in https://github.com/JohnSnowLabs/langtest/pull/1058

-* Fix/bugs from langtest 230rc1 by @chakravarthik27 in https://github.com/JohnSnowLabs/langtest/pull/1062

-* Fix/bugs from langtest 230rc2 by @chakravarthik27 in https://github.com/JohnSnowLabs/langtest/pull/1064

-* chore: adding notebooks and websites changes - 2.3.0 by @chakravarthik27 in https://github.com/JohnSnowLabs/langtest/pull/1063

-* Release/2.3.0 by @chakravarthik27 in https://github.com/JohnSnowLabs/langtest/pull/1065

-

-

-**Full Changelog**: https://github.com/JohnSnowLabs/langtest/compare/2.2.0...2.3.0

+* chore: update langtest version to 2.2.0 by @chakravarthik27 in https://github.com/Pacific-AI-Corp/langtest/pull/1031

+* Enhancements/improve the logging and its functionalities by @chakravarthik27 in https://github.com/Pacific-AI-Corp/langtest/pull/1038

+* Refactor/improve the transform module by @chakravarthik27 in https://github.com/Pacific-AI-Corp/langtest/pull/1044

+* expand-entity-type-support-in-label-representation-tests by @chakravarthik27 in https://github.com/Pacific-AI-Corp/langtest/pull/1042

+* chore: Update GitHub Pages workflow for Jekyll site deployment by @chakravarthik27 in https://github.com/Pacific-AI-Corp/langtest/pull/1050

+* Feature/add support for multi model with multi dataset by @chakravarthik27 in https://github.com/Pacific-AI-Corp/langtest/pull/1039

+* Add support to the LLM eval class in Accuracy Category. by @chakravarthik27 in https://github.com/Pacific-AI-Corp/langtest/pull/1053

+* feat: Add SafetyTestFactory and Misuse class for safety testing by @chakravarthik27 in https://github.com/Pacific-AI-Corp/langtest/pull/1040

+* Fix/alignment issues in bias tests for ner task by @chakravarthik27 in https://github.com/Pacific-AI-Corp/langtest/pull/1060

+* Feature/integrate prometheus model for enhanced evaluation by @chakravarthik27 in https://github.com/Pacific-AI-Corp/langtest/pull/1055

+* chore: update dependencies by @chakravarthik27 in https://github.com/Pacific-AI-Corp/langtest/pull/1047

+* Feature/implement the generic to brand drug name swapping tests and vice versa by @chakravarthik27 in https://github.com/Pacific-AI-Corp/langtest/pull/1058

+* Fix/bugs from langtest 230rc1 by @chakravarthik27 in https://github.com/Pacific-AI-Corp/langtest/pull/1062

+* Fix/bugs from langtest 230rc2 by @chakravarthik27 in https://github.com/Pacific-AI-Corp/langtest/pull/1064

+* chore: adding notebooks and websites changes - 2.3.0 by @chakravarthik27 in https://github.com/Pacific-AI-Corp/langtest/pull/1063

+* Release/2.3.0 by @chakravarthik27 in https://github.com/Pacific-AI-Corp/langtest/pull/1065

+

+

+**Full Changelog**: https://github.com/Pacific-AI-Corp/langtest/compare/2.2.0...2.3.0

{%- include docs-langtest-pagination.html -%}

diff --git a/docs/pages/docs/langtest_versions/release_notes_2_3_1.md b/docs/pages/docs/langtest_versions/release_notes_2_3_1.md

index e6002d1f0..c3aa925d0 100644

--- a/docs/pages/docs/langtest_versions/release_notes_2_3_1.md

+++ b/docs/pages/docs/langtest_versions/release_notes_2_3_1.md

@@ -42,26 +42,26 @@ In this patch version, we've resolved several critical issues to enhance the fun

- Add JSON Output for NER Sample to Support Generative AI Lab [#1099][#1100]

## What's Changed

-* chore: reapply transformations to NER task after importing test cases by @chakravarthik27 in https://github.com/JohnSnowLabs/langtest/pull/1076

-* updated the python api documentation with sphinx by @chakravarthik27 in https://github.com/JohnSnowLabs/langtest/pull/1077

-* Patch/2.3.1 by @chakravarthik27 in https://github.com/JohnSnowLabs/langtest/pull/1078

-* Bug/ner evaluation fix in is_pass() by @chakravarthik27 in https://github.com/JohnSnowLabs/langtest/pull/1080

-* resolved: recovering the transformation object. by @chakravarthik27 in https://github.com/JohnSnowLabs/langtest/pull/1081

-* fixed: consistent issues in augmentation by @chakravarthik27 in https://github.com/JohnSnowLabs/langtest/pull/1085

-* Chore: Add Option to Configure Number of Generated Templates in Templatic Augmentation by @chakravarthik27 in https://github.com/JohnSnowLabs/langtest/pull/1089

-* resolved/augmentation errors by @chakravarthik27 in https://github.com/JohnSnowLabs/langtest/pull/1090

-* Fix/augmentations by @chakravarthik27 in https://github.com/JohnSnowLabs/langtest/pull/1091

-* Feature/add support for the multi label classification model by @chakravarthik27 in https://github.com/JohnSnowLabs/langtest/pull/1096

-* Patch/2.3.1 by @chakravarthik27 in https://github.com/JohnSnowLabs/langtest/pull/1097

-* chore: update pyproject.toml version to 2.3.1 by @chakravarthik27 in https://github.com/JohnSnowLabs/langtest/pull/1098

-* chore: update DataAugmenter to support generating JSON output in GEN AI LAB by @chakravarthik27 in https://github.com/JohnSnowLabs/langtest/pull/1100

-* Patch/2.3.1 by @chakravarthik27 in https://github.com/JohnSnowLabs/langtest/pull/1101

-* implemented: basic version to handling document wise. by @chakravarthik27 in https://github.com/JohnSnowLabs/langtest/pull/1094

-* Fix/module error with openai package by @chakravarthik27 in https://github.com/JohnSnowLabs/langtest/pull/1102

-* Patch/2.3.1 by @chakravarthik27 in https://github.com/JohnSnowLabs/langtest/pull/1103

+* chore: reapply transformations to NER task after importing test cases by @chakravarthik27 in https://github.com/Pacific-AI-Corp/langtest/pull/1076

+* updated the python api documentation with sphinx by @chakravarthik27 in https://github.com/Pacific-AI-Corp/langtest/pull/1077

+* Patch/2.3.1 by @chakravarthik27 in https://github.com/Pacific-AI-Corp/langtest/pull/1078

+* Bug/ner evaluation fix in is_pass() by @chakravarthik27 in https://github.com/Pacific-AI-Corp/langtest/pull/1080

+* resolved: recovering the transformation object. by @chakravarthik27 in https://github.com/Pacific-AI-Corp/langtest/pull/1081

+* fixed: consistent issues in augmentation by @chakravarthik27 in https://github.com/Pacific-AI-Corp/langtest/pull/1085

+* Chore: Add Option to Configure Number of Generated Templates in Templatic Augmentation by @chakravarthik27 in https://github.com/Pacific-AI-Corp/langtest/pull/1089

+* resolved/augmentation errors by @chakravarthik27 in https://github.com/Pacific-AI-Corp/langtest/pull/1090

+* Fix/augmentations by @chakravarthik27 in https://github.com/Pacific-AI-Corp/langtest/pull/1091

+* Feature/add support for the multi label classification model by @chakravarthik27 in https://github.com/Pacific-AI-Corp/langtest/pull/1096

+* Patch/2.3.1 by @chakravarthik27 in https://github.com/Pacific-AI-Corp/langtest/pull/1097

+* chore: update pyproject.toml version to 2.3.1 by @chakravarthik27 in https://github.com/Pacific-AI-Corp/langtest/pull/1098

+* chore: update DataAugmenter to support generating JSON output in GEN AI LAB by @chakravarthik27 in https://github.com/Pacific-AI-Corp/langtest/pull/1100

+* Patch/2.3.1 by @chakravarthik27 in https://github.com/Pacific-AI-Corp/langtest/pull/1101

+* implemented: basic version to handling document wise. by @chakravarthik27 in https://github.com/Pacific-AI-Corp/langtest/pull/1094

+* Fix/module error with openai package by @chakravarthik27 in https://github.com/Pacific-AI-Corp/langtest/pull/1102

+* Patch/2.3.1 by @chakravarthik27 in https://github.com/Pacific-AI-Corp/langtest/pull/1103

-**Full Changelog**: https://github.com/JohnSnowLabs/langtest/compare/2.3.0...2.3.1

+**Full Changelog**: https://github.com/Pacific-AI-Corp/langtest/compare/2.3.0...2.3.1

{%- include docs-langtest-pagination.html -%}

diff --git a/docs/pages/docs/langtest_versions/release_notes_2_4_0.md b/docs/pages/docs/langtest_versions/release_notes_2_4_0.md

index 99de864fd..3e6dd4a10 100644

--- a/docs/pages/docs/langtest_versions/release_notes_2_4_0.md

+++ b/docs/pages/docs/langtest_versions/release_notes_2_4_0.md

@@ -244,19 +244,19 @@ harness.generate().run().report()

- Resolved the Security and Vulnerabilities Issues. [#1112]

## What's Changed

-* Added: implemeted the breaking sentence by newline in robustness. by @chakravarthik27 in https://github.com/JohnSnowLabs/langtest/pull/1109

-* Feature/implement the addtabs test in robustness category by @chakravarthik27 in https://github.com/JohnSnowLabs/langtest/pull/1110

-* Fix/error in accuracy tests for multi label classification by @chakravarthik27 in https://github.com/JohnSnowLabs/langtest/pull/1114

-* Fix/error in accuracy tests for ner task by @chakravarthik27 in https://github.com/JohnSnowLabs/langtest/pull/1116

-* Update transformers version to 4.44.2 by @chakravarthik27 in https://github.com/JohnSnowLabs/langtest/pull/1112

-* Feature/implement the support for multimodal with new vqa task by @chakravarthik27 in https://github.com/JohnSnowLabs/langtest/pull/1111

-* Fix/AttributeError in accuracy tests for multi label classification by @chakravarthik27 in https://github.com/JohnSnowLabs/langtest/pull/1118

-* Refactor fairness test to handle multi-label classification by @chakravarthik27 in https://github.com/JohnSnowLabs/langtest/pull/1121

-* Feature/enhance safety tests with promptguard by @chakravarthik27 in https://github.com/JohnSnowLabs/langtest/pull/1119

-* Release/2.4.0 by @chakravarthik27 in https://github.com/JohnSnowLabs/langtest/pull/1122

-

-

-**Full Changelog**: https://github.com/JohnSnowLabs/langtest/compare/2.3.1...2.4.0

+* Added: implemeted the breaking sentence by newline in robustness. by @chakravarthik27 in https://github.com/Pacific-AI-Corp/langtest/pull/1109

+* Feature/implement the addtabs test in robustness category by @chakravarthik27 in https://github.com/Pacific-AI-Corp/langtest/pull/1110

+* Fix/error in accuracy tests for multi label classification by @chakravarthik27 in https://github.com/Pacific-AI-Corp/langtest/pull/1114

+* Fix/error in accuracy tests for ner task by @chakravarthik27 in https://github.com/Pacific-AI-Corp/langtest/pull/1116

+* Update transformers version to 4.44.2 by @chakravarthik27 in https://github.com/Pacific-AI-Corp/langtest/pull/1112

+* Feature/implement the support for multimodal with new vqa task by @chakravarthik27 in https://github.com/Pacific-AI-Corp/langtest/pull/1111

+* Fix/AttributeError in accuracy tests for multi label classification by @chakravarthik27 in https://github.com/Pacific-AI-Corp/langtest/pull/1118

+* Refactor fairness test to handle multi-label classification by @chakravarthik27 in https://github.com/Pacific-AI-Corp/langtest/pull/1121

+* Feature/enhance safety tests with promptguard by @chakravarthik27 in https://github.com/Pacific-AI-Corp/langtest/pull/1119

+* Release/2.4.0 by @chakravarthik27 in https://github.com/Pacific-AI-Corp/langtest/pull/1122

+

+

+**Full Changelog**: https://github.com/Pacific-AI-Corp/langtest/compare/2.3.1...2.4.0

{%- include docs-langtest-pagination.html -%}

diff --git a/docs/pages/tests/bias/replace_to_asian_firstnames.md b/docs/pages/tests/bias/replace_to_asian_firstnames.md

index 7d2b40622..78f20d5aa 100644

--- a/docs/pages/tests/bias/replace_to_asian_firstnames.md

+++ b/docs/pages/tests/bias/replace_to_asian_firstnames.md

@@ -8,7 +8,7 @@ This test checks if the NLP model can handle input text if the input text has as

**alias_name:** `replace_to_asian_firstnames`

-This data was curated using 2021 US census survey data. To apply this test appropriately in other contexts, please adapt the [data dictionaries](https://github.com/JohnSnowLabs/langtest/blob/main/langtest/transform/constants.py).

+This data was curated using 2021 US census survey data. To apply this test appropriately in other contexts, please adapt the [data dictionaries](https://github.com/Pacific-AI-Corp/langtest/blob/main/langtest/transform/constants.py).To test QA models, we need to use the model itself or other ML model for evaluation, which can make mistakes.

diff --git a/docs/pages/tests/bias/replace_to_asian_lastnames.md b/docs/pages/tests/bias/replace_to_asian_lastnames.md

index 9e65d3390..00b3fdb18 100644

--- a/docs/pages/tests/bias/replace_to_asian_lastnames.md

+++ b/docs/pages/tests/bias/replace_to_asian_lastnames.md

@@ -8,7 +8,7 @@ This test checks if the NLP model can handle input text if the input text has as

**alias_name:** `replace_to_asian_lastnames`

-This data was curated using 2021 US census survey data. To apply this test appropriately in other contexts, please adapt the [data dictionaries](https://github.com/JohnSnowLabs/langtest/blob/main/langtest/transform/constants.py).

+This data was curated using 2021 US census survey data. To apply this test appropriately in other contexts, please adapt the [data dictionaries](https://github.com/Pacific-AI-Corp/langtest/blob/main/langtest/transform/constants.py).To test QA models, we need to use the model itself or other ML model for evaluation, which can make mistakes.

diff --git a/docs/pages/tests/bias/replace_to_black_firstnames.md b/docs/pages/tests/bias/replace_to_black_firstnames.md

index 3369b3f84..a316a8c2b 100644

--- a/docs/pages/tests/bias/replace_to_black_firstnames.md

+++ b/docs/pages/tests/bias/replace_to_black_firstnames.md

@@ -8,7 +8,7 @@ This test checks if the NLP model can handle input text if the input text has bl

**alias_name:** `replace_to_black_firstnames`

-This data was curated using 2021 US census survey data. To apply this test appropriately in other contexts, please adapt the [data dictionaries](https://github.com/JohnSnowLabs/langtest/blob/main/langtest/transform/constants.py).

+This data was curated using 2021 US census survey data. To apply this test appropriately in other contexts, please adapt the [data dictionaries](https://github.com/Pacific-AI-Corp/langtest/blob/main/langtest/transform/constants.py).To test QA models, we need to use the model itself or other ML model for evaluation, which can make mistakes.

diff --git a/docs/pages/tests/bias/replace_to_black_lastnames.md b/docs/pages/tests/bias/replace_to_black_lastnames.md

index 8f8842cc1..78281a245 100644

--- a/docs/pages/tests/bias/replace_to_black_lastnames.md

+++ b/docs/pages/tests/bias/replace_to_black_lastnames.md

@@ -8,7 +8,7 @@ This test checks if the NLP model can handle input text if the input text has bl

**alias_name:** `replace_to_black_lastnames`

-This data was curated using 2021 US census survey data. To apply this test appropriately in other contexts, please adapt the [data dictionaries](https://github.com/JohnSnowLabs/langtest/blob/main/langtest/transform/constants.py).

+This data was curated using 2021 US census survey data. To apply this test appropriately in other contexts, please adapt the [data dictionaries](https://github.com/Pacific-AI-Corp/langtest/blob/main/langtest/transform/constants.py).To test QA models, we need to use the model itself or other ML model for evaluation, which can make mistakes.

diff --git a/docs/pages/tests/bias/replace_to_buddhist_names.md b/docs/pages/tests/bias/replace_to_buddhist_names.md

index b39ee3e1b..139c774ef 100644

--- a/docs/pages/tests/bias/replace_to_buddhist_names.md

+++ b/docs/pages/tests/bias/replace_to_buddhist_names.md

@@ -8,7 +8,7 @@ This test checks if the NLP model can handle input text if the input text has Bu

**alias_name:** `replace_to_buddhist_names`

-This data was curated using [Kidpaw](https://www.kidpaw.com/). Please adapt the [data dictionaries](https://github.com/JohnSnowLabs/langtest/blob/main/langtest/transform/constants.py) to fit your use-case.

+This data was curated using [Kidpaw](https://www.kidpaw.com/). Please adapt the [data dictionaries](https://github.com/Pacific-AI-Corp/langtest/blob/main/langtest/transform/constants.py) to fit your use-case.To test QA models, we need to use the model itself or other ML model for evaluation, which can make mistakes.

diff --git a/docs/pages/tests/bias/replace_to_christian_names.md b/docs/pages/tests/bias/replace_to_christian_names.md

index f4209e638..92e2b3f0d 100644

--- a/docs/pages/tests/bias/replace_to_christian_names.md

+++ b/docs/pages/tests/bias/replace_to_christian_names.md

@@ -8,7 +8,7 @@ This test checks if the NLP model can handle input text if the input text has Ch

**alias_name:** `replace_to_christian_names`

-This data was curated using [Kidpaw](https://www.kidpaw.com/). Please adapt the [data dictionaries](https://github.com/JohnSnowLabs/langtest/blob/main/langtest/transform/constants.py) to fit your use-case.

+This data was curated using [Kidpaw](https://www.kidpaw.com/). Please adapt the [data dictionaries](https://github.com/Pacific-AI-Corp/langtest/blob/main/langtest/transform/constants.py) to fit your use-case.To test QA models, we need to use the model itself or other ML model for evaluation, which can make mistakes.

diff --git a/docs/pages/tests/bias/replace_to_female_pronouns.md b/docs/pages/tests/bias/replace_to_female_pronouns.md

index 13a9a3bc9..cf0e164e8 100644

--- a/docs/pages/tests/bias/replace_to_female_pronouns.md

+++ b/docs/pages/tests/bias/replace_to_female_pronouns.md

@@ -8,7 +8,7 @@ This test checks if the NLP model can handle input text if the input text has fe

**alias_name:** `replace_to_female_pronouns`

-This data was curated using publicly available records. To apply this test appropriately in other contexts, please adapt the [data dictionaries](https://github.com/JohnSnowLabs/langtest/blob/main/langtest/transform/constants.py).

+This data was curated using publicly available records. To apply this test appropriately in other contexts, please adapt the [data dictionaries](https://github.com/Pacific-AI-Corp/langtest/blob/main/langtest/transform/constants.py).To test QA models, we need to use the model itself or other ML model for evaluation, which can make mistakes.

diff --git a/docs/pages/tests/bias/replace_to_high_income_country.md b/docs/pages/tests/bias/replace_to_high_income_country.md

index 2412218be..6dcaadab2 100644

--- a/docs/pages/tests/bias/replace_to_high_income_country.md

+++ b/docs/pages/tests/bias/replace_to_high_income_country.md

@@ -8,7 +8,7 @@ This test checks if the NLP model can handle input text if the input text has co

**alias_name:** `replace_to_high_income_country`

-This data was curated using World Bank data. To apply this test appropriately in other contexts, please adapt the [data dictionaries](https://github.com/JohnSnowLabs/langtest/blob/main/langtest/transform/constants.py).

+This data was curated using World Bank data. To apply this test appropriately in other contexts, please adapt the [data dictionaries](https://github.com/Pacific-AI-Corp/langtest/blob/main/langtest/transform/constants.py).To test QA models, we need to use the model itself or other ML model for evaluation, which can make mistakes.

diff --git a/docs/pages/tests/bias/replace_to_hindu_names.md b/docs/pages/tests/bias/replace_to_hindu_names.md

index 7aeca4a1d..77c91d2c5 100644

--- a/docs/pages/tests/bias/replace_to_hindu_names.md

+++ b/docs/pages/tests/bias/replace_to_hindu_names.md

@@ -8,7 +8,7 @@ This test checks if the NLP model can handle input text if the input text has Hi

**alias_name:** `replace_to_hindu_names`

-This data was curated using [Kidpaw](https://www.kidpaw.com/). Please adapt the [data dictionaries](https://github.com/JohnSnowLabs/langtest/blob/main/langtest/transform/constants.py) to fit your use-case.

+This data was curated using [Kidpaw](https://www.kidpaw.com/). Please adapt the [data dictionaries](https://github.com/Pacific-AI-Corp/langtest/blob/main/langtest/transform/constants.py) to fit your use-case.To test QA models, we need to use the model itself or other ML model for evaluation, which can make mistakes.

diff --git a/docs/pages/tests/bias/replace_to_hispanic_firstnames.md b/docs/pages/tests/bias/replace_to_hispanic_firstnames.md

index 9cb86f5fb..fc57432e7 100644

--- a/docs/pages/tests/bias/replace_to_hispanic_firstnames.md

+++ b/docs/pages/tests/bias/replace_to_hispanic_firstnames.md

@@ -8,7 +8,7 @@ This test checks if the NLP model can handle input text if the input text has hi

**alias_name:** `replace_to_hispanic_firstnames`

-This data was curated using 2021 US census survey data. To apply this test appropriately in other contexts, please adapt the [data dictionaries](https://github.com/JohnSnowLabs/langtest/blob/main/langtest/transform/constants.py).

+This data was curated using 2021 US census survey data. To apply this test appropriately in other contexts, please adapt the [data dictionaries](https://github.com/Pacific-AI-Corp/langtest/blob/main/langtest/transform/constants.py).To test QA models, we need to use the model itself or other ML model for evaluation, which can make mistakes.

diff --git a/docs/pages/tests/bias/replace_to_hispanic_lastnames.md b/docs/pages/tests/bias/replace_to_hispanic_lastnames.md

index 8877fe1d1..b0f1d66a4 100644

--- a/docs/pages/tests/bias/replace_to_hispanic_lastnames.md

+++ b/docs/pages/tests/bias/replace_to_hispanic_lastnames.md

@@ -8,7 +8,7 @@ This test checks if the NLP model can handle input text if the input text has hi

**alias_name:** `replace_to_hispanic_lastnames`

-This data was curated using 2021 US census survey data. To apply this test appropriately in other contexts, please adapt the [data dictionaries](https://github.com/JohnSnowLabs/langtest/blob/main/langtest/transform/constants.py).

+This data was curated using 2021 US census survey data. To apply this test appropriately in other contexts, please adapt the [data dictionaries](https://github.com/Pacific-AI-Corp/langtest/blob/main/langtest/transform/constants.py).To test QA models, we need to use the model itself or other ML model for evaluation, which can make mistakes.

diff --git a/docs/pages/tests/bias/replace_to_inter_racial_lastnames.md b/docs/pages/tests/bias/replace_to_inter_racial_lastnames.md

index 50fe947cc..6cd3ee319 100644

--- a/docs/pages/tests/bias/replace_to_inter_racial_lastnames.md

+++ b/docs/pages/tests/bias/replace_to_inter_racial_lastnames.md

@@ -8,7 +8,7 @@ This test checks if the NLP model can handle input text if the input text has in

**alias_name:** `replace_to_inter_racial_lastnames`

-This data was curated using 2021 US census survey data. To apply this test appropriately in other contexts, please adapt the [data dictionaries](https://github.com/JohnSnowLabs/langtest/blob/main/langtest/transform/constants.py).

+This data was curated using 2021 US census survey data. To apply this test appropriately in other contexts, please adapt the [data dictionaries](https://github.com/Pacific-AI-Corp/langtest/blob/main/langtest/transform/constants.py).To test QA models, we need to use the model itself or other ML model for evaluation, which can make mistakes.

diff --git a/docs/pages/tests/bias/replace_to_jain_names.md b/docs/pages/tests/bias/replace_to_jain_names.md

index a2d222318..6e24cf758 100644

--- a/docs/pages/tests/bias/replace_to_jain_names.md

+++ b/docs/pages/tests/bias/replace_to_jain_names.md

@@ -8,7 +8,7 @@ This test checks if the NLP model can handle input text if the input text has Ja

**alias_name:** `replace_to_jain_names`

-This data was curated using [Kidpaw](https://www.kidpaw.com/). Please adapt the [data dictionaries](https://github.com/JohnSnowLabs/langtest/blob/main/langtest/transform/constants.py) to fit your use-case.

+This data was curated using [Kidpaw](https://www.kidpaw.com/). Please adapt the [data dictionaries](https://github.com/Pacific-AI-Corp/langtest/blob/main/langtest/transform/constants.py) to fit your use-case.To test QA models, we need to use the model itself or other ML model for evaluation, which can make mistakes.

diff --git a/docs/pages/tests/bias/replace_to_low_income_country.md b/docs/pages/tests/bias/replace_to_low_income_country.md

index 5d81cb834..31775998d 100644

--- a/docs/pages/tests/bias/replace_to_low_income_country.md

+++ b/docs/pages/tests/bias/replace_to_low_income_country.md

@@ -8,7 +8,7 @@ This test checks if the NLP model can handle input text if the input text has co

**alias_name:** `replace_to_low_income_country`

-This data was curated using World Bank data. To apply this test appropriately in other contexts, please adapt the [data dictionaries](https://github.com/JohnSnowLabs/langtest/blob/main/langtest/transform/constants.py).

+This data was curated using World Bank data. To apply this test appropriately in other contexts, please adapt the [data dictionaries](https://github.com/Pacific-AI-Corp/langtest/blob/main/langtest/transform/constants.py).To test QA models, we need to use the model itself or other ML model for evaluation, which can make mistakes.

diff --git a/docs/pages/tests/bias/replace_to_lower_middle_income_country.md b/docs/pages/tests/bias/replace_to_lower_middle_income_country.md

index 4a17adc69..04c76e0d3 100644

--- a/docs/pages/tests/bias/replace_to_lower_middle_income_country.md

+++ b/docs/pages/tests/bias/replace_to_lower_middle_income_country.md

@@ -8,7 +8,7 @@ This test checks if the NLP model can handle input text if the input text has co

**alias_name:** `replace_to_lower_middle_income_country`

-This data was curated using World Bank data. To apply this test appropriately in other contexts, please adapt the [data dictionaries](https://github.com/JohnSnowLabs/langtest/blob/main/langtest/transform/constants.py).

+This data was curated using World Bank data. To apply this test appropriately in other contexts, please adapt the [data dictionaries](https://github.com/Pacific-AI-Corp/langtest/blob/main/langtest/transform/constants.py).To test QA models, we need to use the model itself or other ML model for evaluation, which can make mistakes.

diff --git a/docs/pages/tests/bias/replace_to_male_pronouns.md b/docs/pages/tests/bias/replace_to_male_pronouns.md

index 0aa9ac03e..41ce1646a 100644

--- a/docs/pages/tests/bias/replace_to_male_pronouns.md

+++ b/docs/pages/tests/bias/replace_to_male_pronouns.md

@@ -8,7 +8,7 @@ This test checks if the NLP model can handle input text if the input text has ma

**alias_name:** `replace_to_male_pronouns`

-This data was curated using publicly available records. To apply this test appropriately in other contexts, please adapt the [data dictionaries](https://github.com/JohnSnowLabs/langtest/blob/main/langtest/transform/constants.py).

+This data was curated using publicly available records. To apply this test appropriately in other contexts, please adapt the [data dictionaries](https://github.com/Pacific-AI-Corp/langtest/blob/main/langtest/transform/constants.py).To test QA models, we need to use the model itself or other ML model for evaluation, which can make mistakes.

diff --git a/docs/pages/tests/bias/replace_to_muslim_names.md b/docs/pages/tests/bias/replace_to_muslim_names.md

index b30fa085d..cf8764d13 100644

--- a/docs/pages/tests/bias/replace_to_muslim_names.md

+++ b/docs/pages/tests/bias/replace_to_muslim_names.md

@@ -8,7 +8,7 @@ This test checks if the NLP model can handle input text if the input text has Mu

**alias_name:** `replace_to_muslim_names`

-This data was curated using [Kidpaw](https://www.kidpaw.com/). Please adapt the [data dictionaries](https://github.com/JohnSnowLabs/langtest/blob/main/langtest/transform/constants.py) to fit your use-case.

+This data was curated using [Kidpaw](https://www.kidpaw.com/). Please adapt the [data dictionaries](https://github.com/Pacific-AI-Corp/langtest/blob/main/langtest/transform/constants.py) to fit your use-case.To test QA models, we need to use the model itself or other ML model for evaluation, which can make mistakes.

diff --git a/docs/pages/tests/bias/replace_to_native_american_lastnames.md b/docs/pages/tests/bias/replace_to_native_american_lastnames.md

index 5b6326fbf..418d4bd56 100644

--- a/docs/pages/tests/bias/replace_to_native_american_lastnames.md

+++ b/docs/pages/tests/bias/replace_to_native_american_lastnames.md

@@ -8,7 +8,7 @@ This test checks if the NLP model can handle input text if the input text has na

**alias_name:** `replace_to_native_american_lastnames`

-This data was curated using 2021 US census survey data. To apply this test appropriately in other contexts, please adapt the [data dictionaries](https://github.com/JohnSnowLabs/langtest/blob/main/langtest/transform/constants.py).

+This data was curated using 2021 US census survey data. To apply this test appropriately in other contexts, please adapt the [data dictionaries](https://github.com/Pacific-AI-Corp/langtest/blob/main/langtest/transform/constants.py).To test QA models, we need to use the model itself or other ML model for evaluation, which can make mistakes.