-

Notifications

You must be signed in to change notification settings - Fork 20

For Maintainers

This project will likely outlive many iterations of lead maintainers. I'd like for this project, and the committee, to implement the best lessons from its predecessors and peers: past HKN practices, OCF practices, and the wider open-source community and its trials.

I will avoid specifying code-of-conduct practices, for now, trusting future maintainers to avoid conflict when possible and to invest in new developers over short-term progress.

- Have a Project Manager oversee the operation and logistics of the CompServ committee

- This will be someone who has both experience and time to jumpstart ideas and help with immediate issues other committees have

- This person will manage the meetings so that there can be the communication of what is done and needs to be done

- At the discretion of each committee, the circulation of each member holding a leadership role is fine, and a backup can be made as well

Technical standards hold back technical debt: time saved on code now, that we pay in the future with time spent debugging and refactoring.

We can enforce this at various levels:

- Style: PEP8

- Coding: linters (pylint, pycodestyle, pydocstyle), tests (pytest, tox)

- Commit: pre-commit

- Review: pull request reviews, automated builds (Travis CI), automated analyses (LGTM)

At every level we should strive for the following (among others I've forgotten):

- Readability: can future maintainers (or you in the near future) understand this? Or will they miss crucial context?

- Efficiency: is this time / space efficient (algorithmic, db I/Os, latency)?

- Usability: are different use-cases (admins, officers, candidates, students, faculty) easy to use?

- Accessibility: can all users use our site? (vision-impaired, motor-impaired, limited bandwidth, etc.)

- Security: can our data be compromised? Are we vulnerable to DoS?

- Aesthetics: will people enjoy using our site? Does our UI support usability?

Signing code with GPG keys gives us a (better) guarantee that code is written by contributors, and not by bad actors looking to compromise the site. This should be done for commits altering the encrypted blackbox secrets.py file, on which rests the runtime security of hknweb.

Have all code pass review.

There are rare exceptions to this (time-sensitive features which absolutely cannot wait 24 hours), but otherwise all code should have a second glance. Even flawless, tested code should have tradeoffs documented: is efficient code justified if it requires complexity, for our limited demand?

Historical figures of ~500 visitors / day and ~100 officers + candidates / semester are useful for this analysis.

This includes Travis CI tests: if it broke the build, you should probably fix that first.

Critique the code, not the contributor.

High-quality code is good. Long-term contributions are better.

HKN, by its nature, has a high turnover rate, and compserv has always been small. Encouraging long-term contributions should be our first priority, over high-quality code. Allow good, but flawed, code to be merged, and teach people to write better code.

Code can be fixed later, but only if someone is around to fix it.

Don't:

- Insult code

- Insult people

- Rejection without justification

Do:

- Offer alternative implementations / strategies

- Link to specific documentation (i.e. Django API documentation for relevant feature)

- Phrase comments as suggestions, with justifications

- Assume basic competency (we are students at Berkeley)

- Act in good faith (unless otherwise proven beyond a reasonable doubt)

- Implement a bot user like bors, that tests pull requests immediately after merging, not in isolation, to guarantee master never breaks the build. See Not Rocket Science by Graydon Hoare for the rationale.

I've taken a few (slightly controversial) positions on what directions to take:

No heavy front-end frameworks: Angular, React, Vue, ...

These frameworks are known for their expressive power and feature set, especially for building single-page applications that don't require loading new pages but load into the current page (Facebook, Instagram, etc).

I've opted not to use these because of their:

- Steep learning curve: given most contributors are only present for one semester, expecting them to learn an entire framework and implement features, on top of coursework, is a high barrier to entry

- Non-necessity: nearly all of our site's functionality can be done with server-side rendering

- Code complexity: our site doesn't yet support the machinery to run front-end frameworks (no JS bundling, JS package management). Adding it is a significant marginal cost.

- Page load speed: server-side rendering is strictly faster than client-side rendering.

Some exceptional cases, like the course map, may benefit from these frameworks. Of the three I'd opt for Vue if push came to shove, due to its simplicity and ability for incremental adoption.

Tachyons CSS

Tachyons is a CSS library following an Atomic CSS philosophy, using small, reusable CSS classes instead of defining CSS for each element type on a page.

As a style, it falls somewhere between inline CSS and .css files, and allows for localized CSS changes (rather than cascading changes). Traditional CSS, I've noticed, has led to spooky action-at-a-distance CSS effects, whose source can't be easily tracked down:

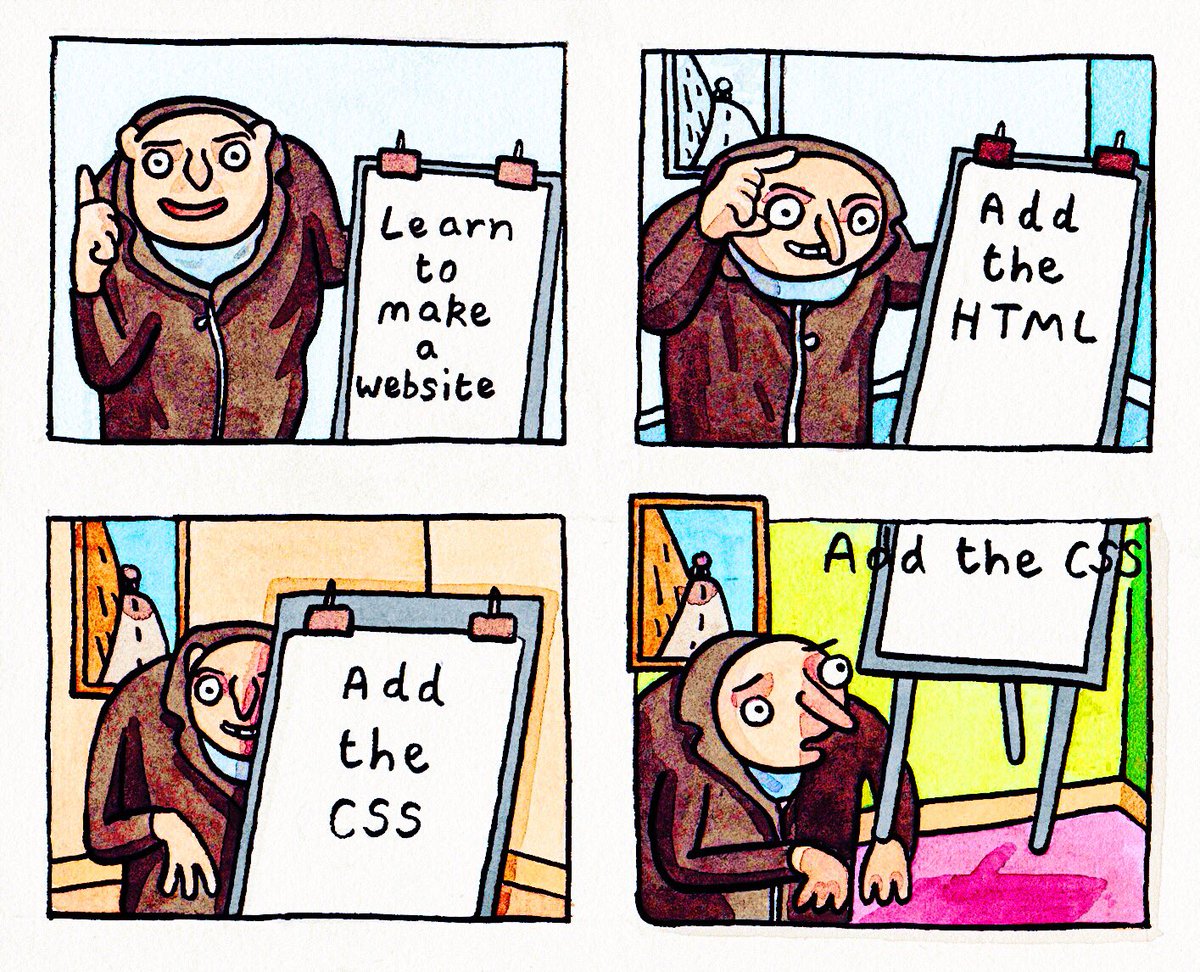

gru makes a website

shitty watercolour (@SWatercolour), March 18, 2018

The style names are exotic at first (class="pv2" for "padding vertical scale 2"), but this approach allows for smaller CSS files, more targeted names, and simpler mobile responsive support (class="pv2 pv3-ns" for a larger vertical padding on not-small screens).

And, failing that, traditional CSS is still an option, and this can be converted incrementally.

No jQuery

The recurring joke is that jQuery does all things, but is also the heaviest object in the universe, while "Vanilla JS" does everything jQuery does at the cost of 0KB.

jQuery is an (old) JS library designed to make DOM manipulation easier: getting the page element with id 'nav-bar' is $('#nav-bar'). It used to be known for its large file size (88 KB), and relative runtime slowness, but with modern computers and 4G internet this is largely a moot point. So why still get rid of it?

- Page download speed: not everyone has 4G internet, and campus AirBears isn't exactly a fast WiFi network. On slow network speeds, page size still matters, and an extra jQuery GET is significant.

- Fewer dependencies: doing everything with standard JS means using standard JS documentation (i.e. MDN). It's guaranteed to work on all browsers with minimal hackery.

- Learning fundamentals: learning to manipulate the DOM without abstraction is reusable across all future frontend frameworks: React, Vue, and whatever happens next.

- Peter Hintjens, "Why Optimistic Merging Works Better"

- Graydon Hoare, "Not Rocket Science"

- All-Contributors

Homepage

Guide

- Basics

- Recommended Onboarding Pacing Schedule

- Comprehensive Setup (Forking, Cloning, and Dev Environment)

- Setup

- Django Development Tutorial

- Other Software Engineering Useful Topics

- Contribution Procedure

- Layout

- Deployment

- Server Administration

- Git Guide

- Style

- FAQ

- For Maintainers

Rails - unmaintained - leftover to serve as source of inspiration for other wiki pages