-

Notifications

You must be signed in to change notification settings - Fork 0

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

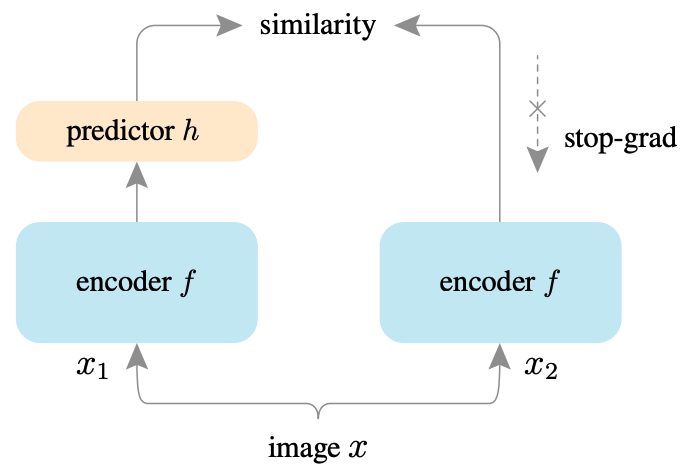

21CVPR#Exploring Simple Siamese Representation Learning #24

Comments

TEN QUESTIONS1. What is the problem addressed in the paper? 2. Is this a new problem? 3. What is the scientific hypothesis that the paper is trying to verify? 4. What are the key related works and who are the key people working on this topic? 5. What is the key to the proposed solution in the paper? 6. How are the experiments designed? 7. What datasets are built/used for the quantitative evaluation? Is the code open-sourced? 8. Is the scientific hypothesis well supported by evidence in the experiments? 9. What are the contributions of the paper? 10. What should/could be done next? |

Paper

The text was updated successfully, but these errors were encountered: