- 2013: Information fusion in navigation systems via factor graph based incremental smoothing

- 2018: Laser-visual-inertial Odometry and Mapping with High Robustness and Low Drift

- 2020: LIO-SAM: Tightly-coupled Lidar Inertial Odometry via Smoothing and Mapping

source: hal

| Markov Chain | Factor Graph |

|---|---|

|

|

|

|

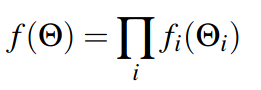

- The maximum a posteriori (MAP) estimate is given by x= argmax p(x|z).

- x: is all the states (that we want to get)

- z: all the sensor measurmnets

Σ can come from VAELet Y be N(μ,σ2), the normal distrubution with parameters μ and σ2.

-

Probability for a distribution is associated with the area under the curve for a particular range of values

-

If the function is a probability distribution, then the first moment is the expected value, the second central moment is the variance, the third standardized moment is the skewness

- Probability is the percentage that a success occur.

- Likelihood is the probability (conditional probability) of an event (a set of success) occur by knowing the probability of a success occur.

- Marginalisation is a method that requires summing over the possible values of one variable to determine the marginal contribution of another.

- Marginalization is a process of summing a variable X which has a joint distribution with other variables like Y, Z, and so on. Considering 3 random variables, we can mathematically express it as

Expected Value- Variance -Covariance NUMPY

In probability, the average value of some random variable X is called the expected value or the expectation. mean, average, and expected value are used interchangeably

- E[X] = sum(x1 * p1, x2 * p2, x3 * p3, ..., xn * pn)

- mu = sum(x1, x2, x3, ..., xn) . 1/n

- mu = sum(x . P(x))

In probability, the variance of some random variable X is a measure of how much values in the distribution vary on average with respect to the mean

- Var[X] = E[(X - E[X])^2]

- Var[X] = sum (p(x1) . (x1 - E[X])^2, p(x2) . (x2 - E[X])^2, ..., p(x1) . (xn - E[X])^2)

- sigma^2 = sum from 1 to n ( (xi - mu)^2 ) . 1 / (n - 1) (minus 1 to correct for a bias)

- cov(X, Y) = E[(X - E[X]) . (Y - E[Y])]

- cov(X, Y) = sum (x - E[X]) * (y - E[Y]) * 1/n

- cov(X, Y) = sum (x - E[X]) * (y - E[Y]) * 1/(n - 1)