--qos=course | For all course-related jobs | 72 | Course users only |

+

+Example:

+```bash

+#SBATCH --qos=course

+```

+

+## Course Directory

+

+Each student is assigned a dedicated **course directory**, and **all work must be done inside it**, including job scripts and outputs.

+

+Directory Format

+```bash

+/course/

+

+- ### [Open OnDemand on Wulver](6_intro_to_OnDemand.md)

+

+ ---

+

+ This webinar will introduce NJIT’s Open OnDemand portal, a browser-based gateway to the Wulver cluster and shared storage. With a focus on streamlining your HPC workflows, you will explore common scenarios and tasks through interactive demos. You will gain a detailed understanding of how to manage your files on the cluster, run interactive applications like Jupyter Notebook and RStudio, launch a full Linux desktop environment in your browser, and submit and monitor SLURM jobs. Additionally, you'll learn how to track resource usage and optimize your job performance for efficient computing on the Wulver cluster.

+

+ #### Key Highlights:

+ * Explore and manage your files on the cluster

+ * Run interactive tools like Jupyter Notebook and RStudio

+ * Launch a full Linux desktop environment in your browser

+ * Submit and monitor SLURM jobs

+ * Track resource usage and optimize job performance

+

+

+

+ [ Download Slides](../../assets/slides/Open_OnDemand_on_Wulver.pdf)

+

+

+

+

+

+- ### [Introduction to Linux](4_intro_to_linux.md)

+

+ ---

+

+ This is the fourth webinar of the 2025 Spring semester, introducing the basics of the Linux operating system. This session is designed to help new users become familiar with Linux, an essential skill for working in High-Performance Computing (HPC) environments.

+

+ #### Key Highlights:

+ * Basics of the Linux operating system

+ * Common commands and file system navigation

+ * Managing files, directories, and permissions

+ * Introduction to shell scripting for automation

+ * Connecting to remote systems and working with HPC cluster

+

+

+

+ [ Download Slides](../../assets/slides/Intro_to_Linux.pdf)

+

+

+

+

+

+- ### [Python and Conda Environments in HPC: From Basics to Best Practices](3_conda_training.md)

+

+ ---

+

+ This is the third webinar of the 2025 Spring semester, focusing on an introductory understanding of using Python for HPC and effectively managing their Python environments using [Conda](conda.md)

+

+ #### Key Highlights:

+ * Learn how to manage Python environments using Conda.

+ * How to create Conda environments in different locations and install Python packages.

+ * Become familiar with common tools and libraries for scientific computing in Python.

+ * Import Conda environment to a different location.

+

+

+

+ [ Download Slides](../../assets/slides/Conda_training_Feb26.pdf)

+

+

+

+

+

+- ### [Introduction to Wulver: Accessing System & Running Jobs](2_intro_to_Wulver_II.md)

+

+ ---

+

+ This is the second webinar of the 2025 Spring semester, focusing on job submission, monitoring, and management on Wulver. This webinar also provides common tips for troubleshooting issues that users may encounter during job submission.

+

+ #### Key Highlights:

+ * How to Access HPC Software

+ * Introduction to SLURM and Its Role in HPC Environments

+ * Manage Slurm Jobs

+ * Troubleshooting Common Issues

+ * Slurm Interactive Jobs and Use GUI Apps

+

+

+

+ [ Download Slides](../../assets/slides/Intro_to_Wulver_II_01_29_2025.pdf)

+

+

+

+

+

+- ### [Introduction to Wulver: Getting Started](1_intro_to_Wulver_I.md)

+

+ ---

+

+ This is the first webinar of the 2025 Spring semester introducing the NJIT HPC environment. This webinar provides basic information about our new High-Performance Computing (HPC) research cluster, [Wulver](wulver.md).

+

+ #### Key Highlights:

+ * Introduction to HPC (High Performance Computing)

+ * Hardware and architecture of Wulver

+ * Guidance on how to obtain an account and login to the cluster

+ * Data Storage systems

+ * Understanding allocations to utilize the shared resources

+

+

+

+ [ Download Slides](../../assets/slides/Intro_to_Wulver_I_01_22_2025.pdf)

+

+

+

+## 2024 Fall

+

+

+- ### [Job Arrays and Advanced Submission Techniques for HPC](3_slurm_advanced.md)

+

+ ---

+

+ This is the final in a series of three webinars in the fall semester. designed to introduce researchers, scientists, and HPC users to the fundamentals of the containers. This session aims to provide useful information on submitting SLURM jobs efficiently by covering job arrays, job dependencies, checkpointing, and addressing common SLURM job issues.

+

+ #### Key Highlights:

+ * Understanding the concept and benefits of job arrays

+ * Syntax for submitting and managing job arrays

+ * Best practices for efficient array job design

+ * Dependency chains and complex workflows

+ * Resource optimization strategies

+ * Using SLURM's advanced options for improved job control

+ * Checkpointing the jobs and use of 3rd party checkpointing tool

+

+

+

+ [ Download Slides](../../assets/slides/HPC_Advanced_SLURM_11-20-2024.pdf)

+

+

+

+

+

+- ### [Introduction to Containers on Wulver](2_containers.md)

+

+ ---

+

+ This is the second in a series of three webinars in the fall semester, designed to introduce researchers, scientists, and HPC users to the fundamentals of the containers. Attendees will learn the fundamentals of Singularity, including installation, basic commands, and workflow, as well as how to create and build containers using definition files and existing Docker images. The training will cover executing containerized applications on HPC clusters and integrating with job schedulers like SLURM, while also addressing security considerations and performance optimization techniques.

+

+ #### Key Highlights:

+ * Introduction to containers and its role in HPC environments

+ * Fundamentals of Singularity, including installation, basic commands, and workflow

+ * Create and build containers using definition files and existing Docker images

+ * How to execute containerized applications on HPC clusters

+ * Use Containers via SLURM

+ * Performance optimization techniques

+

+

+

+

+ [ Download Slides](../../assets/slides/container_HPC_10-16-2024.pdf)

+

+

+

+

+

+- ### [SLURM Batch System Basics](1_slurm.md)

+

+ ---

+

+ This is the first in a series of three webinars in the fall semester. designed to introduce researchers, scientists, and HPC users to the fundamentals of the SLURM (Simple Linux Utility for Resource Management) workload manager. This virtual session will equip you with essential skills to effectively utilize HPC resources through SLURM.

+

+ #### Key Highlights:

+ * Introduction to SLURM and its role in HPC environments

+ * Basic SLURM commands for job submission, monitoring, and management

+ * How to write effective job scripts for various application types

+ * Understanding SLURM partitions, quality of service, and job priorities

+ * Best practices for resource requests and job optimization

+ * Troubleshooting common issues in job submission and execution

+

+

+

+

+ [ Download Slides](../../assets/slides/NJIT_HPC_Seminar-SLURM.pdf)

+

+

+

+## 2024 Spring

+ Since [Wulver](wulver.md) is quite different from the older cluster [Lochness](lochness.md), the HPC training programs are designed to guide both new and existing users on how to use the new cluster. The following trainings provide the basic information on

+

+ * Introduction to HPC

+ * Performance Optimization

+ * Job Submission and Management

+ * Managing Conda Environment

+

+If you still have any questions on HPC usage, please contact the [HPC Facilitator](contact.md).

+

+

+

+

+- ### Getting Started on Wulver: Session I

+

+ ---

+

+ This is the first in a series of three webinars introducing the NJIT HPC environment. This webinar provided the basic information in learning more about our new High Performance Computing (HPC) research cluster, [Wulver](wulver.md).

+

+ #### Key Highlights:

+ * HPC concepts

+ * Hardware and architecture of the Wulver cluster

+ * Guidance on how to obtain an account and receive an allocation to utilize the shared resources.

+

+

+

+ [ Download Slides](../../assets/slides/NJIT_HPC_Seminar-Part-I.pdf)

+

+

+

+

+- ### Getting Started on Wulver: Session II

+

+ ---

+

+ This session offered an overview of the environment on the Wulver cluster, including file management, working with the batch system (SLURM), and accessing software.

+ #### Key Highlights:

+ * HPC allocations

+ * Using [SLURM](../../Running_jobs/index.md)

+ * Job submissions

+

+

+

+ [ Download Slides](../../assets/slides/NJIT_HPC_Seminar-Part-II.pdf)

+

+

+- ### Introduction to Python and Conda

+

+ ---

+ Participants will gain an introductory understanding of using Python for HPC and effectively managing their Python environments using [Conda](conda.md). This knowledge will empower them to leverage the power of Python for their scientific computing needs on HPC systems.

+

+ #### Key Highlights:

+ * Learn how to manage Python environments for HPC using Conda.

+ * Become familiar with common tools and libraries for scientific computing in Python.

+

+

+

+ [ Download Slides](../../assets/slides/intro-to-Python-and-Conda.pdf)

+

+

+

+

diff --git a/docs/HPC_Events_and_Workshops/Workshop_and_Training_Videos/index.md b/docs/HPC_Events_and_Workshops/Workshop_and_Training_Videos/index.md

new file mode 100644

index 000000000..9f56cd2be

--- /dev/null

+++ b/docs/HPC_Events_and_Workshops/Workshop_and_Training_Videos/index.md

@@ -0,0 +1,116 @@

+# HPC Education and Training

+

+NJIT HPC provides practical training in high performance computing for students and researchers at various levels of expertise. HPC training for research professionals aims to enhance their capabilities in utilizing high-performance computing, data-intensive computing, and data analytics within their respective research fields.

+

+

+## 2025 Fall

+

+

+- ### [Intro to Wulver: Resources & HPC](../archived/index.md)

+

+ ---

+

+ This webinar provides essential information about the Wulver cluster, how to get an account, and allocation details, accessing installed software.

+

+ **Key Highlights:**

+

+ * Introduction to HPC (High Performance Computing)

+ * Hardware and architecture of Wulver

+ * Guidance on how to obtain an account and login to the cluster

+ * Data Storage systems

+ * Understanding allocations to utilize the shared resources

+

+

+

+ [ Download Slides](../../assets/slides/Intro_to_Wulver_I_09_17_2025.pdf)

+

+

+

+

+

+- ### [Intro to Wulver: Job Scheduler & Submitting Jobs](../archived/index.md)

+

+ ---

+

+ This webinar provides the basic information on running jobs , how to run batch processing, and submit and manage the Slurm jobs.

+

+ **Key Highlights:**

+

+ * Access the software on Wulver

+ * Batch Processing

+ * Manage Slurm Jobs

+ * Troubleshooting Common Issues

+ * SlurmInteractive Jobs and Use GUI Apps

+

+

+

+ [ Download Slides](../../assets/slides/Intro_to_Wulver_II_10_01_2025.pdf)

+

+

+

+

+

+- ### [Intro to Wulver: Focus on Job Efficiency](../archived/index.md)

+

+ ---

+

+ This webinar provides more in-depth features of SLURM, how to run dependency, array jobs to run efficiently on the cluster.

+

+ **Key Highlights:**

+

+ * Sbatch : Some Examples

+ * salloc command

+ * Job Dependencies

+ * Job Arrays

+ * Checkpointing

+

+

+

+ [ Download Slides](../../assets/slides/Intro_to_Wulver_III_10_08_2025.pdf)

+

+

+

+

+

+- ### [Conda for Shared Environments](../archived/index.md)

+

+ ---

+

+ This webinar provides an introductory understanding of using Python for HPC and effectively managing their Python environments.

+

+ **Key Highlights:**

+

+ * Access Python on Wulver

+ * Introduction to Conda environments

+ * Install, uninstall and upgrade packages

+ * Best Practices for managing conda environments

+ * Common Python libraries for scientific computing

+

+

+

+ [ Download Slides](../../assets/slides/conda_training_11-05-2025.pdf)

+

+

+

+

+

+

+- ### [HPC User Meeting - Introduction to MIG](../archived/index.md)

+

+ ---

+

+ This in-person and virtual session provide an introductory understanding of using Multi-Instance GPUs (MIGs) on Wulver.

+

+ **Key Highlights:**

+

+ * What is MIG?

+ * Why MIG on Wulver?

+ * MIG Configuration Example

+ * Submitting Jobs (`srun` & `sbatch`)

+ * New Billing Model

+

+

+

+ [ Download Slides](../../assets/slides/Wulver_MIG_Dec03_2025.pdf)

+

+

\ No newline at end of file

diff --git a/docs/HPC_Events_and_Workshops/archived/2024/1_slurm.md b/docs/HPC_Events_and_Workshops/archived/2024/1_slurm.md

new file mode 100644

index 000000000..1575ff7a6

--- /dev/null

+++ b/docs/HPC_Events_and_Workshops/archived/2024/1_slurm.md

@@ -0,0 +1,29 @@

+---

+hide:

+ - toc

+---

+

+

+

+# SLURM Batch System Basics

+

+Join us for an informative webinar designed to introduce researchers, scientists, and HPC users to the fundamentals of the SLURM (Simple Linux Utility for Resource Management) workload manager. This virtual session will equip you with essential skills to effectively utilize HPC resources through SLURM.

+

+- Date: Sep 18th 2024

+- Location: Virtual

+- Time: 2:30 PM - 3:30 PM

+

+## Topics Covered

+

+* Introduction to SLURM and its role in HPC environments

+* Basic SLURM commands for job submission, monitoring, and management

+* How to write effective job scripts for various application types

+* Understanding SLURM partitions, quality of service, and job priorities

+* Best practices for resource requests and job optimization

+* Troubleshooting common issues in job submission and execution

+

+Our experienced HPC specialists will guide you through practical examples and provide tips for efficient use of SLURM in your research workflows. Whether you're new to HPC or looking to refine your SLURM skills, this webinar will help you maximize your productivity on SLURM-based clusters.

+

+## Registration

+

+Registration is now closed. Check the [HPC training](../../Workshop_and_Training_Videos/index.md#slurm-batch-system-basics) for the webinar recording and slides.

diff --git a/docs/HPC_Events_and_Workshops/archived/2024/2_containers.md b/docs/HPC_Events_and_Workshops/archived/2024/2_containers.md

new file mode 100644

index 000000000..f8ec42165

--- /dev/null

+++ b/docs/HPC_Events_and_Workshops/archived/2024/2_containers.md

@@ -0,0 +1,29 @@

+---

+hide:

+ - toc

+---

+

+

+

+# Introduction to Containers on Wulver

+

+The HPC training event on using Singularity containers provides participants with a comprehensive introduction to container technology and its advantages in high-performance computing environments.

+

+- Date: Oct 16th 2024

+- Location: Virtual

+- Time: 2:30 PM - 3:30 PM

+

+## Topics Covered

+

+* Introduction to containers and its role in HPC environments

+* Fundamentals of Singularity, including installation, basic commands, and workflow

+* Create and build containers using definition files and existing Docker images

+* How to execute containerized applications on HPC clusters

+* Use Containers via SLURM

+* Performance optimization techniques

+

+

+## Registration

+

+Registration is now closed. Check the [HPC training](../../Workshop_and_Training_Videos/index.md#introduction-to-containers-on-wulver) for the webinar recording and slides.

+

diff --git a/docs/HPC_Events_and_Workshops/archived/2024/3_slurm_advanced.md b/docs/HPC_Events_and_Workshops/archived/2024/3_slurm_advanced.md

new file mode 100644

index 000000000..b5be8a53a

--- /dev/null

+++ b/docs/HPC_Events_and_Workshops/archived/2024/3_slurm_advanced.md

@@ -0,0 +1,32 @@

+---

+hide:

+ - toc

+---

+

+

+

+# Job Arrays and Advanced Submission Techniques for HPC

+

+Elevate your High-Performance Computing skills with our advanced SLURM webinar! This session is designed for HPC users who are familiar with basic SLURM commands and are ready to dive into more sophisticated job management techniques.

+

+- Date: Nov 20th 2024

+- Location: Virtual

+- Time: 2:30 PM - 3:30 PM

+

+## Topics Covered

+

+### Job Arrays

+* Understanding the concept and benefits of job arrays

+* Syntax for submitting and managing job arrays

+* Best practices for efficient array job design

+

+### Advanced Job Submission Techniques

+* Dependency chains and complex workflows

+* Resource optimization strategies

+* Using SLURM's advanced options for improved job control

+* Techniques for balancing resource requests and job efficiency

+

+## Registration

+

+Registration is now closed. Check the [HPC training](../../Workshop_and_Training_Videos/index.md#job-arrays-and-advanced-submission-techniques-for-hpc) for the webinar recording and slides.

+

diff --git a/docs/HPC_Events_and_Workshops/archived/2024/4_nvidia.md b/docs/HPC_Events_and_Workshops/archived/2024/4_nvidia.md

new file mode 100644

index 000000000..1cf059c85

--- /dev/null

+++ b/docs/HPC_Events_and_Workshops/archived/2024/4_nvidia.md

@@ -0,0 +1,33 @@

+---

+hide:

+ - toc

+---

+

+# NVIDIA Workshop

+

+## Fundamentals of Accelerated Data Science

+

+Learn to use GPU-accelerated resources to analyze data. This is an intermediate level workshop that is intended for those who have some familiarity with Python, especially NumPy and SciPy libraries.

+

+- Date: July 15th 2024

+- Location: GITC 3700

+- Time: 9 AM - 5 PM

+

+### Schedule

+

+| Time | Topic |

+|:----:|:-----:|

+| 9:00 AM - 9:15 AM | Introduction |

+| 9:15 AM - 11:30 AM | GPU-Accelerated Data Manipulation |

+| 11:30 AM - 12:00 PM | GPU-Accelerated Machine Learning |

+| 12:00 PM - 1:00PM | Lunch |

+| 1:00 PM - 2:30 PM | GPU-Accelerated Machine Learning (contd) |

+| 2:45 PM - 4:45 PM | Project: Data Analysis to Save the UK |

+| 4:45 PM - 5:00 PM | Assessment and Q&A |

+

+Coffee and Lunch will be provided. See more detail about the workshop [here](https://www.nvidia.com/content/dam/en-zz/Solutions/deep-learning/deep-learning-education/DLI-Workshop-Fundamentals-of-Accelerated-Data-Science-with-RAPIDS.pdf).

+

+

+

+Registration is now closed.

+

diff --git a/docs/HPC_Events_and_Workshops/archived/2024/5_symposium.md b/docs/HPC_Events_and_Workshops/archived/2024/5_symposium.md

new file mode 100644

index 000000000..f2dcfe34a

--- /dev/null

+++ b/docs/HPC_Events_and_Workshops/archived/2024/5_symposium.md

@@ -0,0 +1,71 @@

+---

+hide:

+ - toc

+title: HPC Research Symposium

+---

+

+

+

+# HPC Research Symposium

+

+This past year has been transformative for HPC research at NJIT. The introduction of our new shared HPC cluster, Wulver, has expanded our computational capacity and made research into vital areas more accessible to our faculty. The Advanced Research Computing Services group of Information Services and Technology, in collaboration with Dell Technologies, invites you to a symposium on July 16, 2024, to celebrate the launch of Wulver.

+

+The Symposium will feature a keynote from Anthony Dina, Global Field CTO for Unstructured Data Solutions at Dell Technologies, and invited speakers from NJIT, Dibakar Datta from the Department of Mechanical and Industrial Engineering, and Cambridge Computer Services, Jose Alvarez. The Symposium will also feature several lightning talks from NJIT researchers highlighting the use of High Performance Computing resources in their research.

+

+Please join us to learn how our researchers use HPC resources and connect with the NJIT HPC community.

+

+

+- Date: July 16th, 2024

+- Location: Campus Center Atrium

+- Time: 9AM - 5PM

+

+## Agenda

+

+| Time | Session | Title

+|:-----:|:------:|:-----:

+| 9:00 - 9:15 | Welcome Remarks | **Ed Wozencroft**, Vice President for Digital Strategy & CIO |

+| 9:15 - 9:35 | Research Computing @ NJIT | _Overview of Research Computing Services_ **Gedaliah Wolosh**, Director High Performance Research Computing | +| 9:40 - 10:30 | Keynote | _It’s Time Research Behaves More Like Formula 1_

**Anthony Dina**, Global Field CTO for the Unstructured Data Solutions at Dell Technologies | +| 10:30 - 10:40 | Break | +| 10:40 - 11:20 | Invited Speaker | _Electro-Chemo-Mechanics of Multiscale Active Materials for Next-Generation Energy Storage_

**Dibakar Datta**, Associate Professor, Mechanical & Industrial Engineering | +| 11:20 - 12:00 | Lightning Talks I | _Hemodynamics and Cancer Cell Transport in a Tortuous in vivo Microvessel_

**Ali Kazempour**, Peter Balogh Research Group, Mechanical & Industrial Engineering | +| | | _Running Two-phase Flows on Wulver: Introduction to Basilisk_

**Matthew Cho**, Shahriar Afkhami Research Group, Mathematical Sciences +| | | _Temporal Super-Resolution of Solar Images with Generative AI_

**Jialing Li**, Jason Wang Research Group, Computer Science | +| | | _Numerical study of Thermo-Marangoni flow induced by a warm plate_

**Shivam Verma**, Pushpendra Singh Research Group, Mechanical & Industrial Engineering | +| 12:10 - 13:00 | Lunch | +| 13:00 - 13:30 | Invited Speaker | _Introduction to Grace Hopper and ARM Technology in Higher Education_

**Jose Alvarez**, Vice President Research Computing HPC/AI, Cambridge Computer Services | +| 13:30- 14:20 | Lightning Talks II | _Inference of Nullability Annotations using Machine Learning_

**Kazi Amanul Islam Siddiqui**, Martin Kellogg Research Group, Computer Science | +| | | _Deep Learning for Spatial Image Super-Resolution of Solar Observations_

**Chunhui Xu**, Jason Wang Research Group, Computer Science | +| | | _Volume Integral Method for Electromagnetic Equations_

**Matthew Cassini**, Thi Phong Nguyen Research Group, Mathematical Sciences | +| | | _Enhancing Region-based Image Captioning with Contextual Feature Exploitation_

**Al Shahriar Rubel**, Fadi Deek Research Group, Informatics | +| | | _Instability between the two-layer Poiseuille flow with the VOF method_

**Nastaran Rezaei**, Shahriar Afkhami Research Group, Mathematical Sciences | +| 14:20 - 14:30 | Break | | +| 14:30 - 15:00 | Research Computing @ NJIT | _Introducing Open OnDemand web portal: New Technologies on Wulver_

**Kate Cahill**, Associate Director High Performance Research Computing | +| 15:00 - 15:50 | Lightning Talks III | _Red Blood Cell Modeling Reveals 3D Angiogenic Wall Shear Stress Patterns_

**Mir Md Nasim Hossain**, Peter Balogh Research Group, Mechanical & Industrial Engineering | +| | | _Understanding and Forecasting Space Weather with Artificial Intelligence_

**Hongyang Zhang**, Jason Wang Research Group, Computer Science| +| | | _Entropic pressure on fluctuating solid membranes_

**Rubayet Hassan**, Fatemeh Ahmadpoor Research Group, Mechanical & Industrial Engineering | +| | | _Investigation of PFAS Adsorption on Functionalized COF-300_

**Daniel Mottern**, Joshua Young Research Group, Chemical & Materials Engineering | +| | | _Large Language models (LLM) for hardware_

**Deepak Vungarala**, Shaahin Angizi Research Group, Electrical and Computer Engineering | +| 15:50 - 16:00 | Break | +| 16:00 - 17:00 | Poster Session | _Molecular Dynamic study on Ar nano-bubble stability in water_

**Targol Teymourian**, Jay Meegoda Research Group, Civil & Environmental Engineering | +| | | _Possible mechanism for sonolytic degradation of PFAS_

**Laura Nwanebu**, Jay Meegoda Research Group, Civil & Environmental Engineering | +| | | _Entropic Force Near Fluctuating Surface_

**Rubayet Hassan**, Fatemeh Ahmadpoor Research Group, Mechanical & Industrial Engineering | +| | | _DFT investigations of the enantioselective phase-transfer-catalyzed aza-Michael cyclization of ureas_

**Diana Marlen Castaneda Bagatella**, Pier Champagne Research Group, Chemistry and Environmental Science | +| | | + + diff --git a/docs/HPC_Events_and_Workshops/archived/2024/6_slurm_workshop.md b/docs/HPC_Events_and_Workshops/archived/2024/6_slurm_workshop.md new file mode 100644 index 000000000..85ffba5dc --- /dev/null +++ b/docs/HPC_Events_and_Workshops/archived/2024/6_slurm_workshop.md @@ -0,0 +1,12 @@ +--- +hide: + - toc +--- + +# SLURM Workload Manager Workshop + +Advanced Research Computing Services in collaboration with SchedMD is pleased to announce a two-day workshop on SLURM Workload Manager on **August 13-14, 2024**. This immersive 2-day experience will take you through comprehensive technical scenarios with lectures, demos, and workshop lab environments. The Slurm trainer will assist in identifying commonalities between previously used resources and schedulers, offering increased understanding and adoption of [SLURM](slurm.md) job scheduling, resource management, and troubleshooting techniques. + +Registration is now closed. + + diff --git a/docs/HPC_Events_and_Workshops/archived/2024/7_Intro_to_MPI_Workshop.md b/docs/HPC_Events_and_Workshops/archived/2024/7_Intro_to_MPI_Workshop.md new file mode 100644 index 000000000..35209f771 --- /dev/null +++ b/docs/HPC_Events_and_Workshops/archived/2024/7_Intro_to_MPI_Workshop.md @@ -0,0 +1,12 @@ +--- +hide: + - toc +--- + +# Intro to MPI Workshop + +NJIT is an in-person, satellite location for a two-day HPC workshop hosted by the **Pittsburgh Supercomputing Center (PSC)** on December 10 & 11. This is a great introduction to using MPI programming to scale up your computational research. Attendees will leave with a working knowledge of how to write scalable codes using MPI – the standard programming tool of scalable parallel computing. + +Registration is now closed. + +To download the training materials, check [MPI Workshop Agenda](https://t.e2ma.net/click/mhwgmh/mtd2z9gb/a459ow). diff --git a/docs/HPC_Events_and_Workshops/archived/2025/1_intro_to_Wulver_I.md b/docs/HPC_Events_and_Workshops/archived/2025/1_intro_to_Wulver_I.md new file mode 100644 index 000000000..af60dbc89 --- /dev/null +++ b/docs/HPC_Events_and_Workshops/archived/2025/1_intro_to_Wulver_I.md @@ -0,0 +1,25 @@ +--- +hide: + - toc +--- + +# Introduction to Wulver: Getting Started + +This is the first webinar of the 2025 Spring semester introducing the NJIT HPC environment. This webinar provides basic information about our new High-Performance Computing (HPC) research cluster, [Wulver](wulver.md). + +- Date: Jan 22nd 2025 +- Location: Virtual +- Time: 2:30 PM - 3:30 PM + +## Topics Covered + +* Introduction to HPC (High Performance Computing) +* Hardware and architecture of Wulver +* Guidance on how to obtain an account and login to the cluster +* Understanding allocations to utilize the shared resources + +Our experienced HPC specialists will guide you through practical examples and provide tips for proper use of cluster in your research workflows. + +## Registration + +Registration is now closed. Check the [HPC training](../../Workshop_and_Training_Videos/index.md#introduction-to-wulver-getting-started) for the webinar recording and slides. diff --git a/docs/HPC_Events_and_Workshops/archived/2025/2_intro_to_Wulver_II.md b/docs/HPC_Events_and_Workshops/archived/2025/2_intro_to_Wulver_II.md new file mode 100644 index 000000000..07a36dd19 --- /dev/null +++ b/docs/HPC_Events_and_Workshops/archived/2025/2_intro_to_Wulver_II.md @@ -0,0 +1,24 @@ +--- +hide: + - toc +--- + +# Introduction to Wulver: Accessing System & Running Jobs + +This is the second webinar of the 2025 Spring semester introducing the NJIT HPC environment. This webinar provided the basic information in learning more about our new High Performance Computing (HPC) research cluster, [Wulver](wulver.md). + +- Date: Jan 29th 2025 +- Location: Virtual +- Time: 2:30 PM - 3:30 PM + +## Topics Covered + +* HPC allocations +* How to access HPC software +* Introduction to SLURM and its role in HPC environments +* Basic SLURM commands for job submission, monitoring, and management +* Troubleshooting common issues in job submission and execution + +## Registration + +Registration is now closed. Check the [HPC training](../../Workshop_and_Training_Videos/index.md#introduction-to-wulver-accessing-system-running-jobs) for the webinar recording and slides. diff --git a/docs/HPC_Events_and_Workshops/archived/2025/3_conda_training.md b/docs/HPC_Events_and_Workshops/archived/2025/3_conda_training.md new file mode 100644 index 000000000..1d4ab8eff --- /dev/null +++ b/docs/HPC_Events_and_Workshops/archived/2025/3_conda_training.md @@ -0,0 +1,22 @@ +--- +hide: + - toc +--- + +# Python and Conda Environments in HPC: From Basics to Best Practices + +This is the third webinar of the 2025 Spring semester introducing the NJIT HPC environment. Participants will gain an introductory understanding of using Python for HPC and effectively managing their Python environments using [Conda](conda.md). This knowledge will empower them to leverage the power of Python for their scientific computing needs on HPC systems. + +- Date: Feb 26th 2025 +- Location: Virtual +- Time: 2:30 PM - 3:30 PM + +## Topics Covered + +* Learn how to manage Python environments using Conda. +* How to create Conda environments in different locations and install Python packages. +* Become familiar with common tools and libraries for scientific computing in Python. + + +## Registration +Registration is now closed. Check the [HPC training](../../Workshop_and_Training_Videos/index.md#python-and-conda-environments-in-hpc-from-basics-to-best-practices) for the webinar recording and slides. \ No newline at end of file diff --git a/docs/HPC_Events_and_Workshops/archived/2025/4_intro_to_linux.md b/docs/HPC_Events_and_Workshops/archived/2025/4_intro_to_linux.md new file mode 100644 index 000000000..bd16da433 --- /dev/null +++ b/docs/HPC_Events_and_Workshops/archived/2025/4_intro_to_linux.md @@ -0,0 +1,24 @@ +--- +hide: + - toc +--- + +# Introduction to Linux + +This is the fourth webinar of the 2025 Spring semester, introducing the basics of the Linux operating system. This session is designed to help new users become familiar with Linux, an essential skill for working in High-Performance Computing (HPC) environments. + +- Date: March 26th 2025 +- Location: Virtual +- Time: 2:30 PM - 3:30 PM + +## Topics Covered + +* Basics of the Linux operating system +* Common commands and file system navigation +* Managing files, directories, and permissions +* Introduction to shell scripting for automation +* Connecting to remote systems and working with HPC cluster + + +## Registration +Registration is now closed. Check the [HPC training](../../Workshop_and_Training_Videos/index.md#introduction-to-linux) for the webinar recording and slides. diff --git a/docs/HPC_Events_and_Workshops/archived/2025/5_parallel_computing_with_matlab.md b/docs/HPC_Events_and_Workshops/archived/2025/5_parallel_computing_with_matlab.md new file mode 100644 index 000000000..ce1fcd768 --- /dev/null +++ b/docs/HPC_Events_and_Workshops/archived/2025/5_parallel_computing_with_matlab.md @@ -0,0 +1,26 @@ +--- +hide: + - toc +--- + +# Parallel Computing with MATLAB: Hands on workshop + +During this hands-on workshop, we will introduce parallel and distributed computing in MATLAB with a focus on speeding up application codes and offloading computers. By working through common scenarios and workflows using hands-on demos, you will gain a detailed understanding of the parallel constructs in MATLAB, their capabilities, and some of the common hurdles that you'll encounter when using them. + +- Date: April 16th 2025 +- Location: Virtual +- Time: 1:00 PM - 4:00 PM +- Hosted by Mathworks - A MATLAB account is required to participate.. + +## Topics Covered + +* Multithreading vs multiprocessing +* When to use parfor vs parfeval constructs +* Creating data queues for data transfer +* Leveraging NVIDIA GPUs +* Parallelizing Simulink models +* Working with large data + + +## Registration +Registration is now closed. diff --git a/docs/HPC_Events_and_Workshops/archived/2025/6_intro_to_OnDemand.md b/docs/HPC_Events_and_Workshops/archived/2025/6_intro_to_OnDemand.md new file mode 100644 index 000000000..c3d815161 --- /dev/null +++ b/docs/HPC_Events_and_Workshops/archived/2025/6_intro_to_OnDemand.md @@ -0,0 +1,25 @@ +--- +hide: + - toc +--- + +# Open OnDemand on Wulver + +[Open OnDemand](https://ondemand.njit.edu) is a browser-based gateway to NJIT's Wulver cluster and shared storage. + +- Date: April 30th 2025 +- Location: Virtual +- Time: 2:30 PM - 3:30 PM + +## Topics Covered + +* Explore and manage your files on the cluster +* Run interactive tools like Jupyter Notebook and RStudio +* Launch a full Linux desktop environment in your browser +* Submit and monitor SLURM jobs +* Track resource usage and optimize job performance + + +## Registration +Registration is now closed. Check the [HPC training](../../Workshop_and_Training_Videos/index.md#open-ondemand-on-wulver) for the webinar recording and slides. + diff --git a/docs/HPC_Events_and_Workshops/archived/2025/7_MATLAB_on_Wulver.md b/docs/HPC_Events_and_Workshops/archived/2025/7_MATLAB_on_Wulver.md new file mode 100644 index 000000000..4ec74f46c --- /dev/null +++ b/docs/HPC_Events_and_Workshops/archived/2025/7_MATLAB_on_Wulver.md @@ -0,0 +1,36 @@ +--- +hide: + - toc +--- + +# HPC Summer Workshop: MATLAB Parallel Computing Hands-On Using Wulver + +## Overview +Join us for an interactive webinar hosted at NJIT's HPC facility, where MATLAB expert (Evan Cosgrov) +will guide participants through practical techniques for accelerating code and workflows using MATLAB’s parallel computing tools. +Through live demonstrations and guided examples, you’ll gain a solid understanding of how to parallelize MATLAB code, overcome common challenges, and optimize performance across distributed computing environments. + +Each participant will have access to the [OnDemand Matlab server](https://ondemand.njit.edu/pun/sys/dashboard/batch_connect/sys/jupyter-matlab/session_contexts/new) running on [Wulver](https://hpc.njit.edu/). + +## Guide to access Matlab via OnDemand: +Users need use `Jupyter Matlab Proxy` to use MATLAB on OnDemand. + +## Date and Location: +- Date: June 12th 2025 +- Location: Campus Center 235 +- Time: 1:00 PM - 4.00 PM + +## Topics Covered + +* How to identify bottlenecks in serial MATLAB code and convert them to run in parallel. +* Practical differences between `parfor` and `parfeval`, and how to choose the right one. +* Creating asynchronous tasks and managing outputs with DataQueue. +* Running Simulink models in parallel and accelerating simulation tasks. +* Leveraging GPU resources to boost performance in compute-intensive operations. +* Working with large datasets using tall arrays and distributed arrays. +* Best practices for launching and managing MATLAB parallel pools. + + +## Registration +Registration is now closed. + diff --git a/docs/HPC_Events_and_Workshops/archived/2025/8_PSC_Machine_Learning_workshop.md b/docs/HPC_Events_and_Workshops/archived/2025/8_PSC_Machine_Learning_workshop.md new file mode 100644 index 000000000..9067d77ba --- /dev/null +++ b/docs/HPC_Events_and_Workshops/archived/2025/8_PSC_Machine_Learning_workshop.md @@ -0,0 +1,22 @@ +--- +hide: + - toc +--- + +# HPC Summer Workshop: PSC Machine Learning and BIG DATA Workshop + +## Overview +The Pittsburgh Supercomputing Center is pleased to present a Machine Learning and Big Data workshop. This workshop will focus on topics including big data analytics and machine learning with Spark, and deep learning using Tensorflow. This will be an IN PERSON event hosted by various satellite sites, there WILL NOT be a direct to desktop option for this event. + + +## Date and Location: +- Date: July 29-30, 2025 +- Location: Room 2315A GITC +- Time: 11:00 AM - 5.00 PM + +## Topics Covered +Check details at [PSC Machine Learning and BIG DATA Workshop](https://support.access-ci.org/events/8089) + +## Registration +Registration is now closed. + diff --git a/docs/HPC_Events_and_Workshops/archived/index.md b/docs/HPC_Events_and_Workshops/archived/index.md new file mode 100644 index 000000000..11c29b91b --- /dev/null +++ b/docs/HPC_Events_and_Workshops/archived/index.md @@ -0,0 +1,115 @@ +--- +hide: + - toc +--- + +# Archived HPC Workshops + +## 2025 + + +=== "Fall" + + ```python exec="on" + import re + import pandas as pd + + df = pd.read_csv('docs/assets/tables/trainings/2025_fall.csv', keep_default_na=False) + + def fix_cell(s): + if not isinstance(s, str): + return s + s = re.sub(r'(\()([^)]*?)index\.md', r'\1\2', s) + s = re.sub(r'(\b6_[\w\-.]+)\.md\b', r'\1', s) + s = s.replace('(//', '(/') + + return s + + df = df.applymap(fix_cell) + print(df.to_markdown(index=False)) + ``` + +=== "Summer" + + ```python exec="on" + import re + import pandas as pd + + df = pd.read_csv('docs/assets/tables/trainings/2025_summer.csv', keep_default_na=False) + + def fix_cell(s): + if not isinstance(s, str): + return s + s = re.sub(r'(\()([^)]*?)index\.md', r'\1\2', s) + s = re.sub(r'(\b6_[\w\-.]+)\.md\b', r'\1', s) + s = s.replace('(//', '(/') + + return s + + df = df.applymap(fix_cell) + print(df.to_markdown(index=False)) + ``` +=== "Spring" + + ```python exec="on" + import re + import pandas as pd + + df = pd.read_csv('docs/assets/tables/trainings/2025_spring.csv', keep_default_na=False) + + def fix_cell(s): + if not isinstance(s, str): + return s + s = re.sub(r'(\()([^)]*?)index\.md', r'\1\2', s) + s = re.sub(r'(\b6_[\w\-.]+)\.md\b', r'\1', s) + s = s.replace('(//', '(/') + + return s + + df = df.applymap(fix_cell) + print(df.to_markdown(index=False)) + ``` + +## 2024 + +=== "Fall" + + ```python exec="on" + import re + import pandas as pd + + df = pd.read_csv('docs/assets/tables/trainings/2024_fall.csv', keep_default_na=False) + + def fix_cell(s): + if not isinstance(s, str): + return s + s = re.sub(r'(\()([^)]*?)index\.md', r'\1\2', s) + s = re.sub(r'(\b6_[\w\-.]+)\.md\b', r'\1', s) + s = s.replace('(//', '(/') + + return s + + df = df.applymap(fix_cell) + print(df.to_markdown(index=False)) + ``` + +=== "Summer" + + ```python exec="on" + import re + import pandas as pd + + df = pd.read_csv('docs/assets/tables/trainings/2024_summer.csv', keep_default_na=False) + + def fix_cell(s): + if not isinstance(s, str): + return s + s = re.sub(r'(\()([^)]*?)index\.md', r'\1\2', s) + s = re.sub(r'(\b6_[\w\-.]+)\.md\b', r'\1', s) + s = s.replace('(//', '(/') + + return s + + df = df.applymap(fix_cell) + print(df.to_markdown(index=False)) + ``` diff --git a/docs/HPC_Events_and_Workshops/index.md b/docs/HPC_Events_and_Workshops/index.md new file mode 100644 index 000000000..780c030e7 --- /dev/null +++ b/docs/HPC_Events_and_Workshops/index.md @@ -0,0 +1,33 @@ +--- +hide: + - toc +--- + +# HPC Events + +## 2026 Spring +Please check our workshop schedule for this spring season. Expand each section to view more details about the event. For webinars, the links will be sent to your email once you register. The links to slides and recordings will be updated after each webinar. For the HPC User Meeting, users are encouraged to register using the form provided in the registration link; however, registration is not mandatory. If you forget or miss registering, you are still welcome to stop by the location listed in the schedule below. + +```python exec="on" +import re +import pandas as pd + +df = pd.read_csv('docs/assets/tables/trainings/2026_spring.csv', keep_default_na=False) + +def fix_cell(s): + if not isinstance(s, str): + return s + s = re.sub(r'(\()([^)]*?)index\.md', r'\1\2', s) + s = re.sub(r'(\b6_[\w\-.]+)\.md\b', r'\1', s) + s = s.replace('(//', '(/') + + return s + +df = df.applymap(fix_cell) +print(df.to_markdown(index=False)) +``` +!!! info "Archived Workshops" + + Click here to review our [past workshops](archived/index.md)! + + diff --git a/docs/MIG/index.md b/docs/MIG/index.md new file mode 100644 index 000000000..53e1db287 --- /dev/null +++ b/docs/MIG/index.md @@ -0,0 +1,76 @@ +# MIG Overview + +MIG (Multi-Instance GPU) is a technology introduced by NVIDIA starting with its Ampere architecture (e.g., A100). It enables a single physical GPU to be partitioned into multiple smaller, isolated GPU instances — each with dedicated compute cores, memory, cache, and bandwidth. These instances function independently and appear to software as discrete GPUs. + +This allows multiple users or processes to simultaneously run GPU workloads without interfering with one another, improving resource utilization, reducing wait times in job queues, and increasing throughput in shared computing environments like Wulver. + + +## Why MIG on Wulver + + +

+  +

+  +

+

+

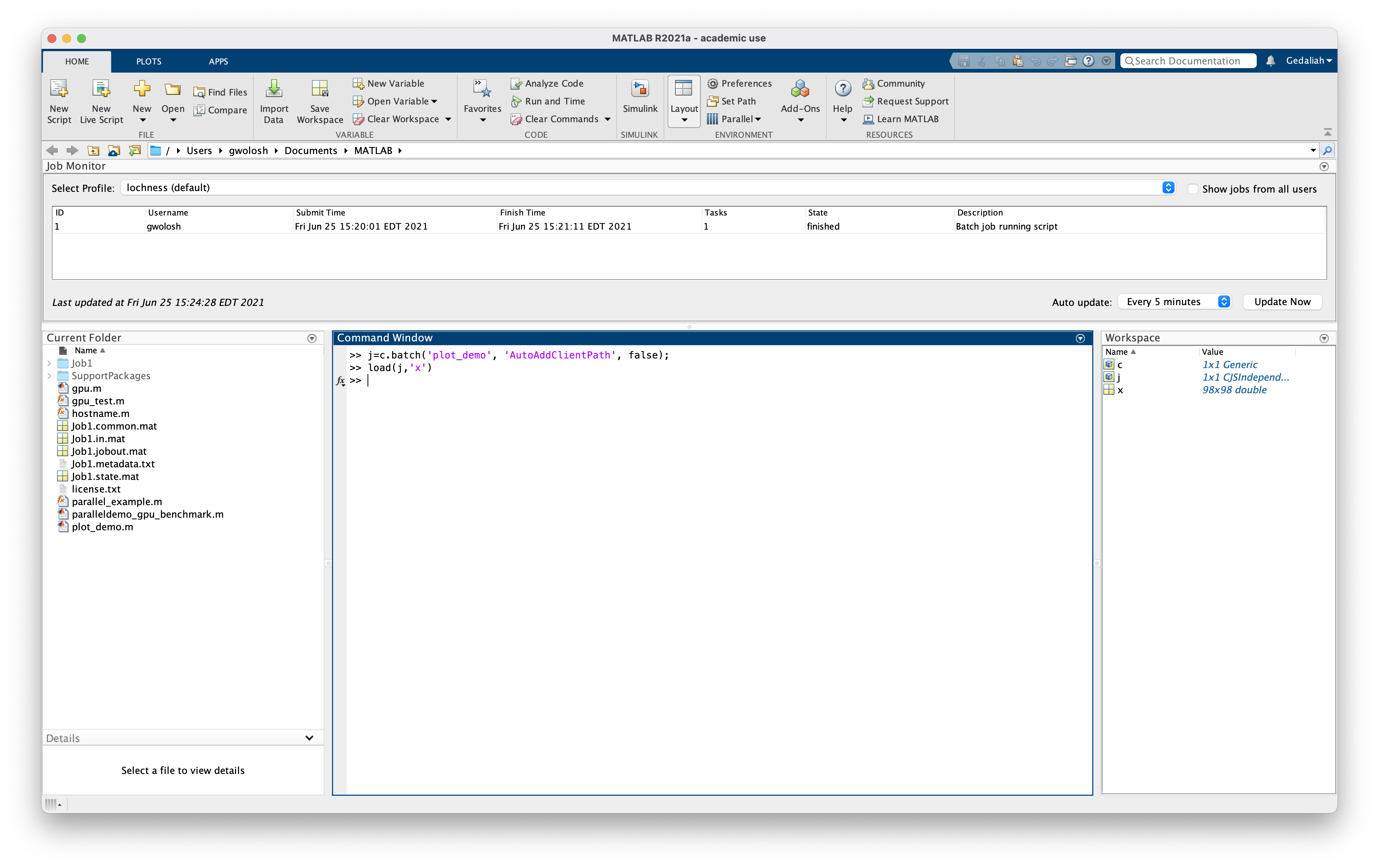

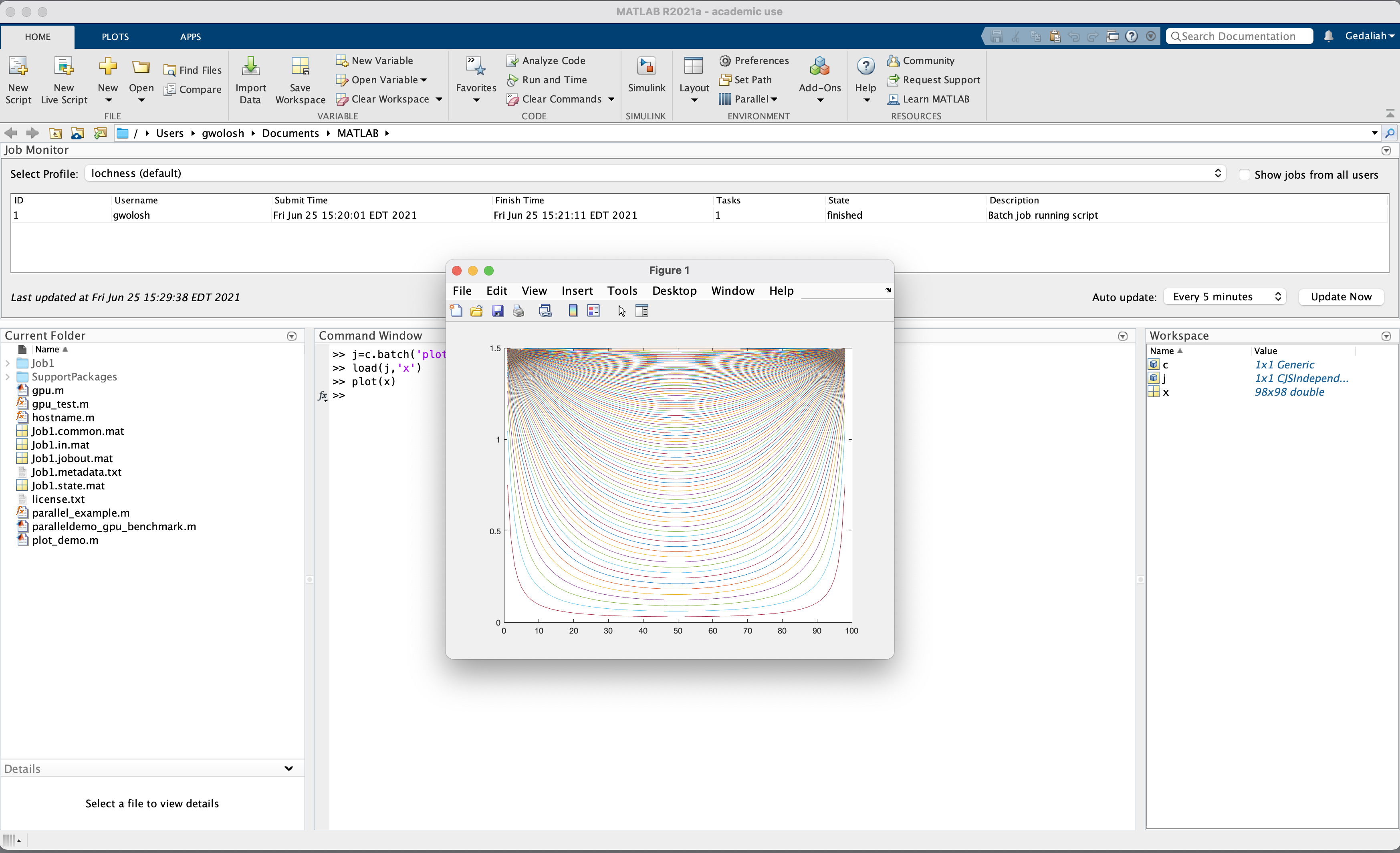

+The plots above show cluster-wide usage of the A100 GPUs over the last 3 months. In short: GPUs were **heavily allocated** but **lightly utilized**, which means lots of capacity sat idle behind single jobs.

+

+- **Allocated vs. used**: GPUs were reserved ~85–95% of the time, while average compute utilization was ~25–50% and memory utilization stayed mostly under 20%.

+

+- **Impact**: Long queue times and poor overall throughput when full GPUs are booked for workloads that only need a fraction of the device.

+

+- **MIG rationale**: Partitioning A100s into 10/20/40 GB slices lets multiple right-sized jobs run concurrently, improving SU efficiency and time-to-results without requiring more hardware.

+

+## MIG Implementation in Wulver

+

+MIG is implemented on selected **NVIDIA A100 80GB** GPUs. Wulver currently supports the following MIG configurations:

+

+- 10gb

+- 20gb

+- 40gb

+

+These profiles correspond to different partitions of compute and memory resources from the A100 80GB GPU. You can view a full comparison in the [Profile Comparison section](../MIG/profile-comparison.md).

+

+Each profile maps to a Service Unit (SU) usage factor that reflects its computational weight — ranging from 2 SU/hour for a 10gb instance up to 16 SU/hour for a full GPU. You can check full SU overview of MIG [here](../MIG/job-submission-and-su-charges.md#understanding-su-charges).

+

+MIGs address key challenges in shared environments:

+

+- **Fair resource sharing**: MIG enables multiple users to share a single GPU without stepping on each other’s performance.

+

+- **Right-sizing workloads**: Users can request GPU capacity that matches their actual workload requirements.

+

+- **Improved scheduling efficiency**: MIG instances are smaller and easier to schedule than full GPUs.

+

+- **Reduced idle time**: MIG reduces GPU underutilization by splitting large resources into usable chunks.

+

+

+## Why You Should Use MIG Instances

+

+**Lower SU Cost for Smaller Workloads**: +

+  +

++MIG lets you select a GPU slice that meets your job’s needs without paying for more power than you use. +For example: + +- A small training job or inference script may only require `10–20` GB of GPU memory. +- Running such a job on a `10gb` or `20gb` MIG instance will consume only a fraction of the SUs compared to using a full GPU. + +**No Need to Reserve a Full GPU**:

+Some jobs — like unit testing, data preprocessing with GPU acceleration, or light model inference — simply don’t need the full resources of an 80GB A100. MIG allows users to avoid bottlenecks and free up resources by choosing an appropriately sized instance.This is especially valuable when: + +- Cluster demand is high +- Your jobs don’t need massive memory or compute +- You're trying to run multiple parallel tasks independently + +This is especially important if you’re working within a research group’s annual SU allocation on Wulver. + +**Isolated and Predictable Performance**: