Note : This All Notebook Contains Step by Step code of TCN in different domain Application.

pip install keras-tcn| Topic | Github | Colab |

|---|---|---|

| MNIST Dataset | MNIST Dataset | |

| IMDB Dataset | IMDA Dataset | |

| Time Series Dataset Milk | Time Series Dataset Milk | |

| Many to Many Regression Approach | MtoM Regression | |

| Self Generated Dataset Approach | Self Generated Dataset | |

| Cifar10 Image Classification | Cifar10 Image Classification |

Article : https://arxiv.org/pdf/1803.01271.pdf

github : https://github.com/philipperemy/keras-tcn

- TCNs exhibit longer memory than recurrent architectures with the same capacity.

- Constantly performs better than LSTM/GRU architectures on a vast range of tasks (Seq. MNIST, Adding Problem, Copy Memory, Word-level PTB...).

- Parallelism, flexible receptive field size, stable gradients, low memory requirements for training, variable length inputs...

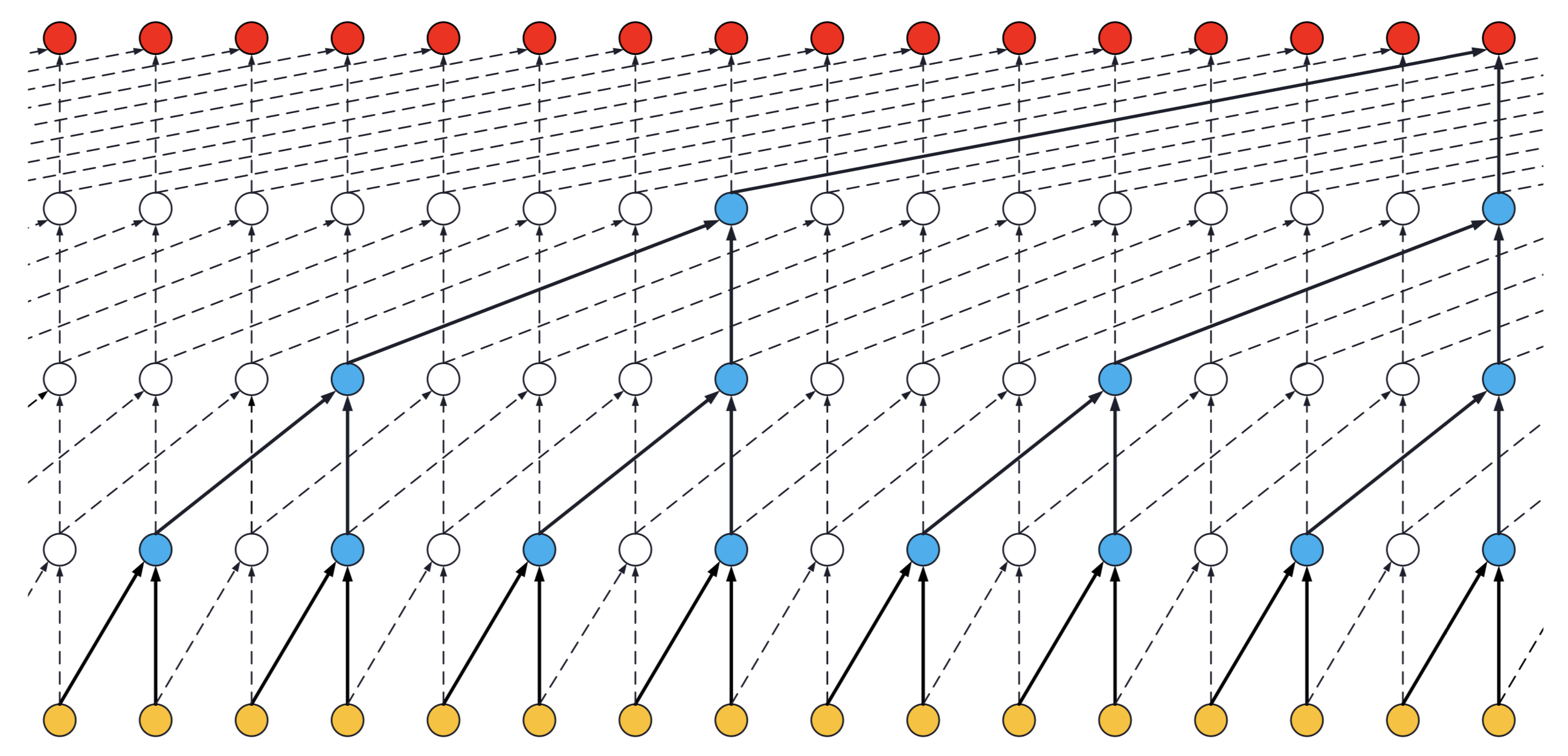

Visualization of a stack of dilated causal convolutional layers (Wavenet, 2016)

Visualization of a stack of dilated causal convolutional layers (Wavenet, 2016)

TCN(nb_filters=64, kernel_size=2, nb_stacks=1, dilations=[1, 2, 4, 8, 16, 32], padding='causal', use_skip_connections=False, dropout_rate=0.0, return_sequences=True, activation='relu', kernel_initializer='he_normal', use_batch_norm=False, **kwargs)

nb_filters: Integer. The number of filters to use in the convolutional layers. Would be similar tounitsfor LSTM.kernel_size: Integer. The size of the kernel to use in each convolutional layer.dilations: List. A dilation list. Example is: [1, 2, 4, 8, 16, 32, 64].nb_stacks: Integer. The number of stacks of residual blocks to use.padding: String. The padding to use in the convolutions. 'causal' for a causal network (as in the original implementation) and 'same' for a non-causal network.use_skip_connections: Boolean. If we want to add skip connections from input to each residual block.return_sequences: Boolean. Whether to return the last output in the output sequence, or the full sequence.dropout_rate: Float between 0 and 1. Fraction of the input units to drop.activation: The activation used in the residual blocks o = activation(x + F(x)).kernel_initializer: Initializer for the kernel weights matrix (Conv1D).use_batch_norm: Whether to use batch normalization in the residual layers or not.kwargs: Any other arguments for configuring parent class Layer. For example "name=str", Name of the model. Use unique names when using multiple TCN.

3D tensor with shape (batch_size, timesteps, input_dim).

timesteps can be None. This can be useful if each sequence is of a different length: Multiple Length Sequence Example.

- if

return_sequences=True: 3D tensor with shape(batch_size, timesteps, nb_filters). - if

return_sequences=False: 2D tensor with shape(batch_size, nb_filters).

- Regression (Many to one) e.g. adding problem

- Classification (Many to many) e.g. copy memory task

- Classification (Many to one) e.g. sequential mnist task

For a Many to Many regression, a cheap fix for now is to change the number of units of the final Dense layer.

- Receptive field = nb_stacks_of_residuals_blocks * kernel_size * last_dilation.

- If a TCN has only one stack of residual blocks with a kernel size of 2 and dilations [1, 2, 4, 8], its receptive field is 2 * 1 * 8 = 16. The image below illustrates it:

ks = 2, dilations = [1, 2, 4, 8], 1 block

ks = 2, dilations = [1, 2, 4, 8], 1 block

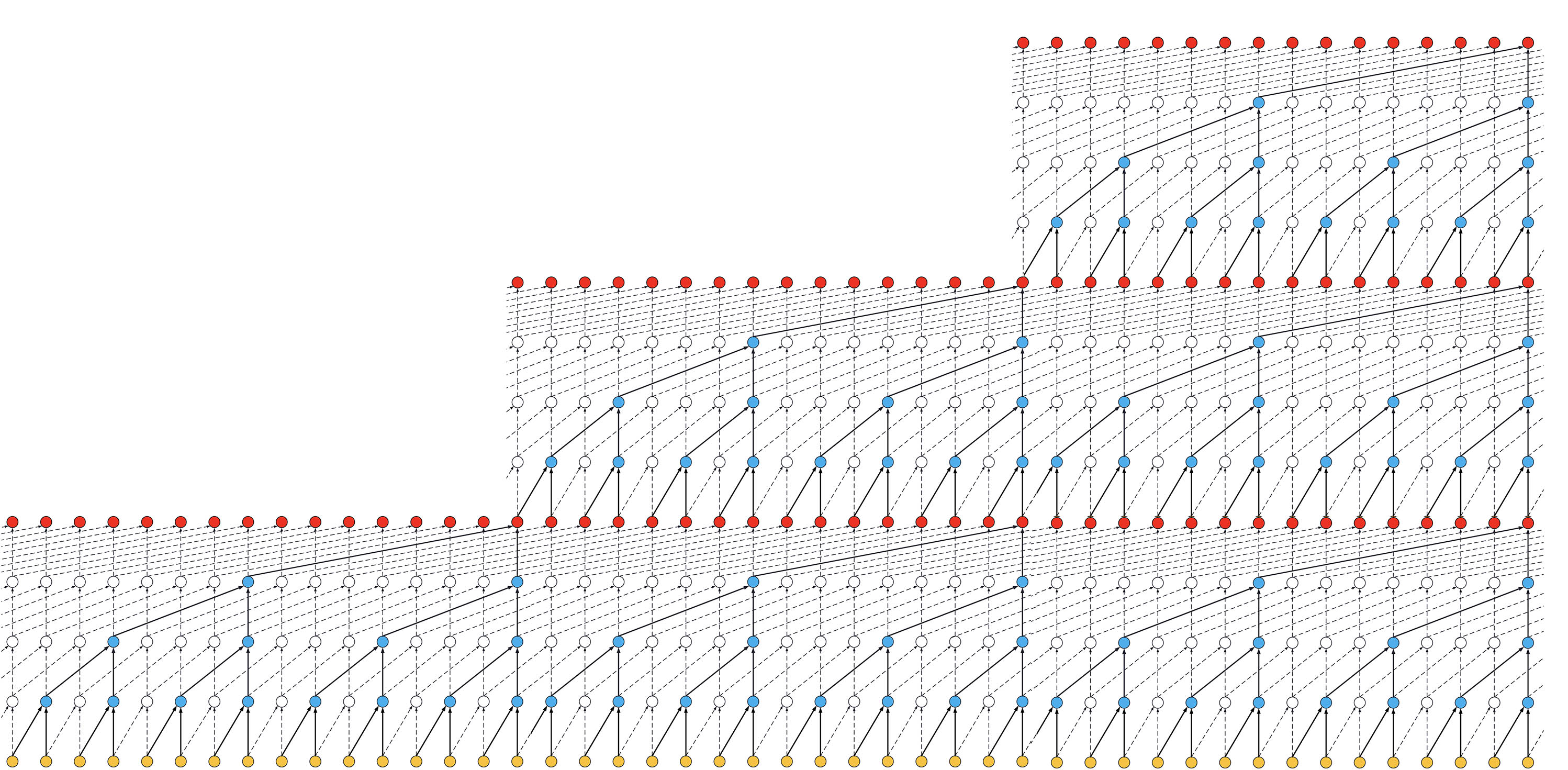

- If the TCN has now 2 stacks of residual blocks, wou would get the situation below, that is, an increase in the receptive field to 32:

ks = 2, dilations = [1, 2, 4, 8], 2 blocks

ks = 2, dilations = [1, 2, 4, 8], 2 blocks

- If we increased the number of stacks to 3, the size of the receptive field would increase again, such as below:

ks = 2, dilations = [1, 2, 4, 8], 3 blocks

ks = 2, dilations = [1, 2, 4, 8], 3 blocks

Thanks to @alextheseal for providing such visuals.

Making the TCN architecture non-causal allows it to take the future into consideration to do its prediction as shown in the figure below.

However, it is not anymore suitable for real-time applications.

Non-Causal TCN - ks = 3, dilations = [1, 2, 4, 8], 1 block

Non-Causal TCN - ks = 3, dilations = [1, 2, 4, 8], 1 block

To use a non-causal TCN, specify padding='valid' or padding='same' when initializing the TCN layers.

- https://github.com/philipperemy/keras-tcn ( TCN Keras Version)

- https://github.com/locuslab/TCN/ (TCN for Pytorch)

- https://arxiv.org/pdf/1803.01271.pdf (An Empirical Evaluation of Generic Convolutional and Recurrent Networks for Sequence Modeling)

- https://arxiv.org/pdf/1609.03499.pdf (Original Wavenet paper)

- Note : All the rights reserved by original Author. This Repository creation intense for Educational purpose only.