[To-Do]

Notebook 0 : Loading and Visualizing the Coco Dataset

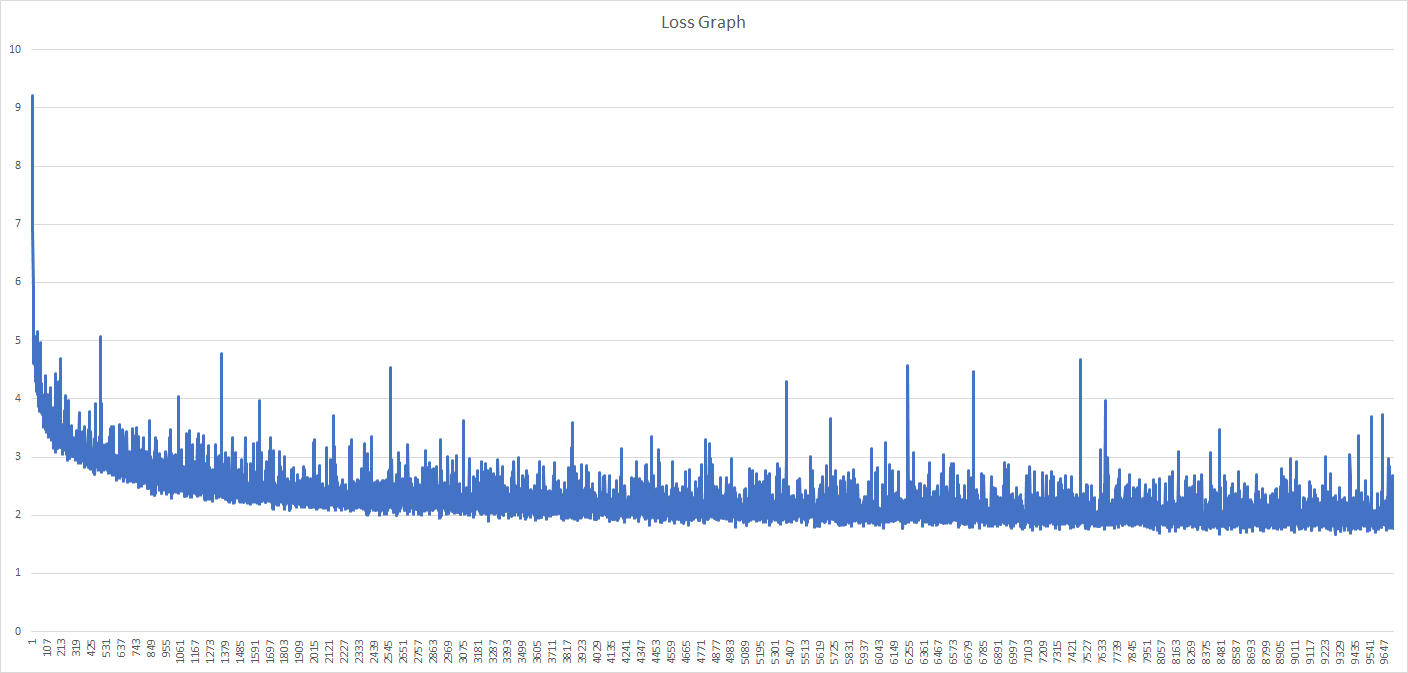

Notebook 1 : Setting up the Project and verify the Dataset and the Model

Notebook 2 : Training the CNN-RNN Model to predict the Caption

Notebook 3 : Validating the model and Do some fun with it

- Clone this repo: https://github.com/cocodataset/cocoapi

git clone https://github.com/cocodataset/cocoapi.git

- Setup the coco API (also described in the readme here)

cd cocoapi/PythonAPI

make

cd ..

- Download some specific data from here: http://cocodataset.org/#download (described below)

-

Under Annotations, download:

- 2014 Train/Val annotations [241MB] (extract captions_train2014.json and captions_val2014.json, and place at locations cocoapi/annotations/captions_train2014.json and cocoapi/annotations/captions_val2014.json, respectively)

- 2014 Testing Image info [1MB] (extract image_info_test2014.json and place at location cocoapi/annotations/image_info_test2014.json)

-

Under Images, download:

- 2014 Train images [83K/13GB] (extract the train2014 folder and place at location cocoapi/images/train2014/)

- 2014 Val images [41K/6GB] (extract the val2014 folder and place at location cocoapi/images/val2014/)

- 2014 Test images [41K/6GB] (extract the test2014 folder and place at location cocoapi/images/test2014/)

- The project is structured as a series of Jupyter notebooks that are designed to be completed in sequential order (

0_Dataset.ipynb, 1_Preliminaries.ipynb, 2_Training.ipynb, 3_Inference.ipynb).