-

Notifications

You must be signed in to change notification settings - Fork 89

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Garbage collector in Ginkgo #993

Comments

|

This would not be an issue if we were storing temporary data inside solvers, right? |

|

Yes, in general for any class, you could have a temporary storage in place of this, but that would mean:

I think have temporary data inside solvers (or their base classes) is a good first step, but maybe it is not general enough and I think this approach is a more general alternative. |

|

What you are describing (eliminating all |

|

Something may be related to it.

|

|

Yes, I agree that if each class (or one of its base class) has temporary array members, these can be used instead and to the most point the behaviour would be equivalent (except the deferred/selective allocation). The management for this would also have to be done individually for the classes. In this case, the allocation can be either in the generate or the (first) apply. I think that is just an implementation detail and not too important, atleast for the discussion here. What I wanted to propose is more on a object level rather than a class level, in which the object takes care of the deallocation rather than having class level temporaries. This might allow us to have more expressive code and slightly lesser memory usage. For example, consider the case below: #include <ginkgo/ginkgo.hpp>

__global__ kernel(int* data){

// Do something

}

int main(){

// Initialize executor and setup

.

.

.

{

// arr1 is deallocated when exiting this scope,

// that is a cudaFree is called.

gko::Array<int> arr1(cuda, 100);

kernel<<<1,1,0,stream1>>>(arr1.data());

}

// Despite operating on different data

// and on different streams, the kernels and streams are

// synchronized.

gko::Array<int> arr2(cuda, 100);

kernel<<<1,1,0,stream2>>>(arr2.data());

}Now imagine something like a garbage collector (probably not a good name) that does not necessarily call #include <ginkgo/ginkgo.hpp>

__global__ kernel(int* data){

// Do something

}

int main(){

// Initialize executor and setup

.

.

.

{

// Now arr1 is not deallocated at the scope exit, but the deletion is deferred.

gko::Array<int> arr1(cuda, 100, deferring_deleter);

kernel<<<1,1,0,stream1>>>(arr1.data());

}

// Due to no synchronizing calls between the kernels,

// and given that they are on different streams, the operations are executed

// asynchronously.

gko::Array<int> arr2(cuda, 100);

kernel<<<1,1,0,stream2>>>(arr2.data());

}There are multiple ways this deferring deleter could be implemented:

|

|

Thank you for the clarification! That is a much more limited view that I could potentially get on board with. It slightly reminds me of Herb Sutter's deferred_ptr proposal. I think the right place to do this kind of deferred deletion would be the Executor, since it outlives all other objects involved. We could have adeletion queue in our executors that will be freed regularly, e.g. on synchronize or if a certain amount of time has passed (i.e. run all deferred The other question would be how this can be integrated into Array. We could either defer all free calls, or add a flag/custom deleter that calls On terminology: Garbage Collection usually refers to a function that marks objects for deletion based on their reachability, what you are describing is slightly different, since the Array objects notify the Executor directly of their destruction, so this is more like a memory pool without data reuse. Extending the allocator functionality of Executor is definitely worth investigating though, especially for small objects. In general, I am not sure whether this is the right solution to your problem though: Due to the use of Array, all of our |

|

Thanks for pinging me @upsj. When I saw the title I wanted to comment already :). In general, I think this is a good idea or at least it might be necessary. On the other hand, I think a proper implementation, like in many runtime systems, might be a bit tricky and we would need to find the right balance in terms of complexity/features. I also wonder if there's any library we can just reuse for this as that might be easier, but I did not check for what exists. This essentially boils down to adding a memory pool/memory cache, but it needs:

On the flip side, that would open up the ability to have permanent work buffers on the devices which we can reuse between kernels, managed by that system. |

|

I think if we want to take on allocation, one important change would be adding a size parameter to |

|

@pratikvn in your example, if run the function several times, is the arr1 from different run on the same memory or different?

|

|

Have you considered using cudaMallocAsync + cudaFreeAsync to solve the problem described in the original post? You can even use cudaFreeAsync to free a pointer allocated by cudaMalloc. |

|

Closing this after discussion in the group |

In many cases, especially when doing things in an asynchronous fashion, there is a need to not synchronize with respect to either the host or the default execution stream (context). This is especially relevant on GPU devices. Most of the functions, kernel launches, device(host) to device(host) memory copies can be made asynchronous with respect to the host/default stream because they can take a stream/execution context to perform the operation on. In most cases, there are two functions that are not necessarily asynchronous:

From empirical observations, it looks like many of the programming models that are relevant to us (CUDA, HIP and DPCPP), by default can execute

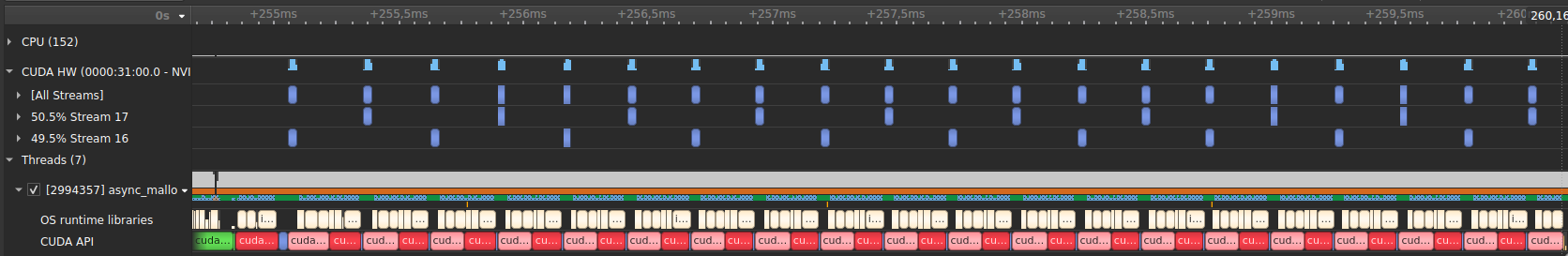

mallocfunctions in an asynchronous fashion, butfreefunctions are necessarily synchronizing to ensure correctness and prevent use after free issues. Below is a simple reproducer that demonstrates the above observation with the CUDA programming model. The same can be duplicated for the HIP programming model withs/cuda/hip(with the correct HIP header).resulting in a timeline that looks like this:

You can see that the streams are serialized here due to the interleaved

cudaFrees. If we for example, change the loop to:you can have concurrent execution of the two streams, but

due to the

cudaFree, each loop index is asynchronized with respect to the next and you see a timeline like this:But if you move the

cudaFreeoutside the loop, with malloc's still inside the loop, you can get concurrent execution between two loop indices as well. For example:results in the following timeline:

This means that a lot can be gained by deferring

cudaFreeto a later stage, mainly in terms of asynchronous execution.To handle this, I would like to maybe propose something like a garbage collector or device allocated memory. This garbage collector basically, would be some kind of a deferred

deleterfor an object and could be implemented through thedeletersemantics for example, within theArrayclass.Some things to discuss:

I just wanted to throw this idea out and not necessarily advocate implementing it right now, but if we could live with some of the disadvantages, then I think this could be a nice thing to have.

An additional note: I believe CUDA and HIP libraries such as cublas, hipblas etc, could be using their handle objects(

cublasHandle_t,hipblasHandle_t) to implement some variant of this, so that the allocation is done when necessary, but the free is only done at the end when destroying the handle.The text was updated successfully, but these errors were encountered: