-

Notifications

You must be signed in to change notification settings - Fork 61

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Request to incorporate InterpAny-Clearer's technology in Practical-RIFE #69

Comments

|

I feel a little confused. I found that gram matrix loss mainly affects the performance of anime scenes. I don't know if most people will like it. Also, I'm concerned that the demo of comparing [D, R] RIFE to this project may be misleading: |

Great news! Thank you! This is the most important thing for me as a frame interpolation enthusiast. I understand that it won't be easy or fast, but many people will be very grateful to you for this.

Unfortunately, I am not a programmer and was not aware that the code for InterpAny-Clearer has not been published in full. So far, zzh-tech has been eager to share his achievements, including his revolutionary real-world dataset RBI, which allows to train frame interpolation models to remove the worst artefacts caused by motion blur, which is larger the more dynamic the movement of a person or object between frames. These artefacts cannot be eliminated by training with either the X4K1000FPS dataset or the SNU-FILM dataset, because this is impossible to achieve with a dataset made with a single camera. So since he has shared such a treasure as the RBI dataset, I think he will also share the code and help with the InterpAny-Clearer implementation. Especially since he responded positively to my request and was open to collaboration: zzh-tech/InterpAny-Clearer#12 (comment) |

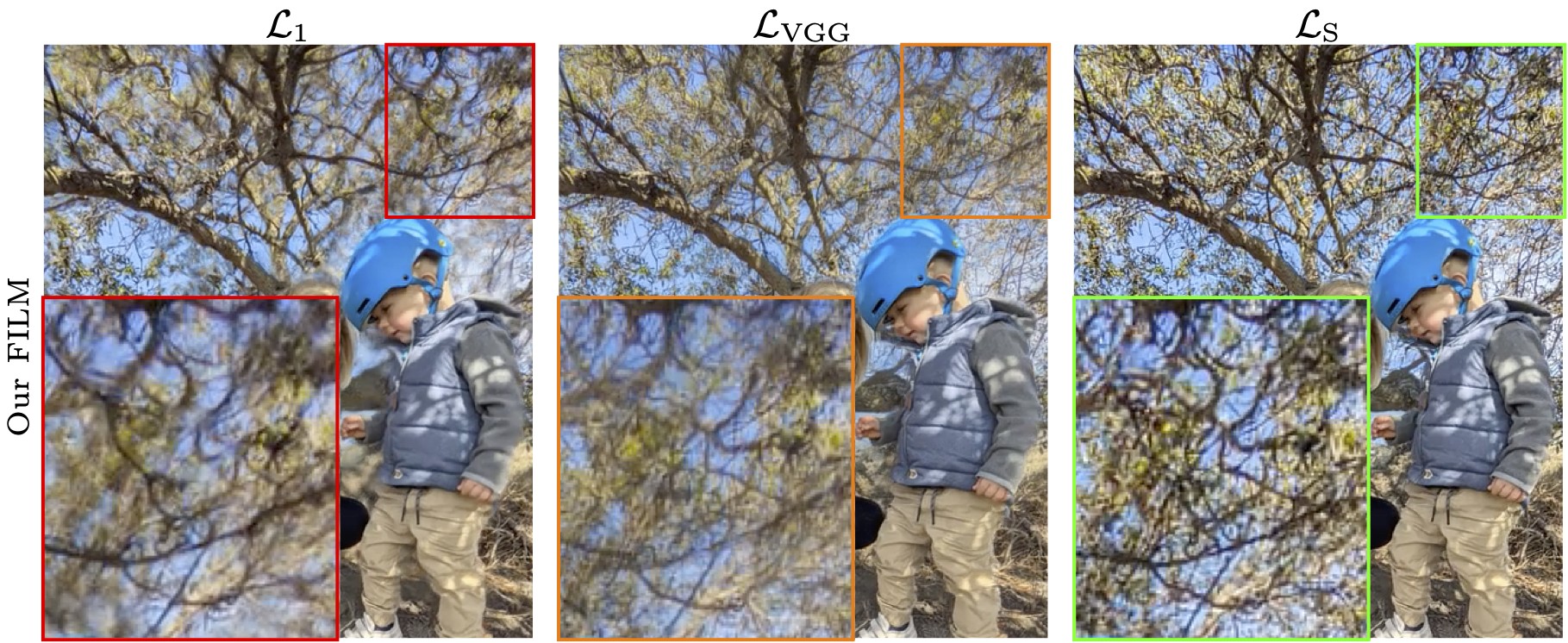

Yes, gram matrix loss affects the performance of anime scenes, but it also improves live action videos, as can be seen in Fig. 1 in Supplementary Material. However, the most interesting thing is this visual comparison of the three loss functions. In my opinion, Style loss clearly gives the best result perceptually:

The first time Style loss to train the video frame interpolation model was used by Google Research when training their FILM-𝓛S model, link: Both models achieved some of the best and maybe even the best LPIPS results (because we do not have a direct comparison with the other two models of the top four, and the results are very close to each other): Vimeo-90K triplet: LPIPS<=0.017 [excluding LPIPS(SqueezeNet) results]

Therefore, please continue to use this loss function in future practical RIFE models. |

Please, incorporate InterpAny-Clearer's technology in Practical-RIFE. I changed the title a minute ago, as this is the most important thing for me. I realise how different Practical-RIFE is from other models that, for publication at conferences, are generally only trained on one database and how this affects their practical application. Due to your commitment, I dream that maybe one day you will undertake to train a practical model of Joint Video Deblurring and Frame Interpolation. |

I would like to thank you very much for continually adding new, practical RIFE models. For this last one I am particularly grateful to you. Firstly because you are open to the work of others that can improve the already awesome RIFE. Secondly, because I was very interested in adding just this loss function to RIFE, which I requested from the developer InterpAny-Clearer here: Request for [D,R] RIFE trained on Style loss, also called Gram matrix loss (the best perceptual loss function)

As you have applied gram matrix loss to RIFE v4.17 training, I would like to ask you to conduct x128 frame interpolation tests of RIFE v4.15 and v4.17 for the files I0_0.png and I0_1.png and also I1_0.png and I1_1.png available here: https://github.com/zzh-tech/InterpAny-Clearer/tree/main/demo and posting their results as GIF files in the Practical-RIFE repository or here. There will be a total of 4 GIF files.

I think this test will be of interest to any Practical-RIFE enthusiast and will answer an important question: can gram matrix loss really improve the performance of practical RIFE models? And, in particular, whether it eliminates or at least mitigates to some extent the undesirable distortions occurring with VGG loss, which can be seen particularly clearly in the lower example for the column: [D,R] RIFE-vgg (Ours) in the table available at this link: https://github.com/zzh-tech/InterpAny-Clearer?tab=readme-ov-file#time-indexing-vs-distance-indexing

This test will not only compare the differences in the loss function for version 4.15 and version 4.17 of RIFE, but will also give an answer to the question of whether the application of the InterpAny-Clearer enhancement will further improve the clarity of frame interpolation for practical RIFE models, just as it improves it for the base RIFE model, as shown in the table to which I have provided a link above.

We will have a total comparison of 5 different versions of RIFE - 3 already available:

[T] RIFE - RIFE base model

[D,R] RIFE - RIFE base model with full InterpAny-Clearer upgrade

[D,R] RIFE-vgg - RIFE base model with VGG loss and full InterpAny-Clearer upgrade

and 2 versions of the practical RIFE models, which I ask you to compare here:

RIFE v4.15 with standard perceptual loss

RIFE v4.17 with gram matrix loss

This comparison will hopefully give evidence to justify the use of gram matrix loss in all future RIFE practical models. Also, I hope it will demonstrate the need for the use of InterpAny-Clearer in the next RIFE v4.18 model.

I would also like to use these GIF files of 5 different versions of RIFE (of course with links to the sources of the comparisons) in the introduction to my rankings Video Frame Interpolation Rankings and Video Deblurring Rankings, where I would like to encourage other researchers to use the best and above all proven solutions for practical applications when developing new models.

I hope that the next GIF file will already be for RIFE v4.18 with gram matrix loss and the InterpAny-Clearer enhancement. The clarity of the frame interpolation is more important than the super resolution, especially when new monitors already reach 500Hz and the original frames can be seen on the screen very briefly and most of the time the interpolated frames are visible.

The text was updated successfully, but these errors were encountered: