-

Notifications

You must be signed in to change notification settings - Fork 166

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

SLOW OR FAILED (500 ERROR) NODE.JS DOWNLOADS #1993

Comments

|

@nodejs/build-infra |

|

Same here, using tj/n, the download just terminates after a while |

|

@targos what machine is the top output from? Fwiw, only infra team members are: https://github.com/orgs/nodejs/teams/build-infra/members and Gibson isn't current, he should be removed. |

|

I wonder what traffic looks like... could be a DOS We've talked about it in the past, but we really should be serving these resources via a CDN... I believe that the issue was maintaining our metrics. I can try and find some time to help out with the infrastructure here if others don't have the time |

|

It could also be that 13.x is super popular? Hard to know without access to access logs. @MylesBorins the infrasture team is even less staffed than the build team as a whole, if you wanted to pick that up, it would be 🎉 fabulous. |

|

The issue has been the metrics in terms of why they are not on a CDN, @joaocgreis and @rvagg do have an active discussion on what we might do on that front. @MylesBorins if you can get help for this specific issue that might be good (please check with @rvagg and @joaocgreis ). Better would be ongoing sustained engagement and contribution to the overall build work. |

|

@sam-github it's from |

|

I don't even have non-root access, I guess you do because you are on the release team. We'll have to wait for an infra person. |

|

I can help take a look after the TSC meeting. |

|

In addition to downloads only intermittently succeeding, when they do succeed it seems that sometimes (?) the bundled For linux builds with successful downloads of Node.js v8.x and v10.x, For windows builds with successful downloads of Node.js v8.x and v10.x, I'm seeing errors like this: That is, in the case of windows |

|

@michaelsbradleyjr I suspect that the node-gyp failures would have been related to downloading header files. |

|

Was looking into this with @targos. It seems to have resolved itself at this point. Looking at the access logs it was a bit hard to tell if there was more traffic or not as the files for the previous day are compressed. We should be able to compare tomorrow more easily. |

|

Top on the machine looks pretty much the same as it did while there were issues as well |

|

hm, that top doesn't look exceptional (load average would have been nice to see though @targos, should be up the top right of top). Cloudflare also monitors our servers for overload and switches between them as needed and they're not recording any incidents. It's true that this is a weak point in our infra and that needing to get downloads fully CDNified is a high priority (and we're working on it but are having trouble getting solid solutions that solve all our needs), but I'm not sure we can fully blame this on our main server, there might be something network-related at play that we don't have insight to. btw, it's myself and @jbergstroem having the discussion about this @mhdawson, not so much @joaocgreis, although you and he are also in the email chain. @MylesBorins one thing that has come up that might be relevant to you is that if we can get access to Cloudflare's Logpush feature (still negotiating) then we'd need a place to put logs that they support. GCP Storage is an option for that, so maybe getting hold of some credits may be handy in this instance. |

|

@rvagg sorry right @jbergstroem, got the wrong name. |

|

Possibly unrelated, but I did see a complaint of a completely different site reporting slow traffic around the same time. |

|

I can ask my company if they'd be interested in sponsoring us with a hosted Elasticsearch and Kibana setup that can pull all our logs and server metrics and make them easily accessible. This will also give us the ability to send out alerts etc. Would we be interested in that? |

|

Thanks @watson, it's not the metrics gathering process that's difficult, it's gathering the logs in the first place and doing it in a reliable enough way that we have the confidence that it'll keep on working even if we're not checking it regularly, we don't have dedicated infra staff to do that level of monitoring. |

|

Hi folks, this seems to be cropping up again this morning. |

|

Current load average: cat /proc/loadavg

2.12 2.15 1.96 1/240 15965 |

|

load average seems faily steady: cat /proc/loadavg |

|

captured ps -ef to /root/processes-oct-24-11am so that we can compare later on. |

|

Hi all, |

|

Small update: we are now carefully testing a caching strategy for the most common (artifact) file formats. If things proceed well (as it does seem to), we will start covering more parts of the site and be more generous with TTL's. |

|

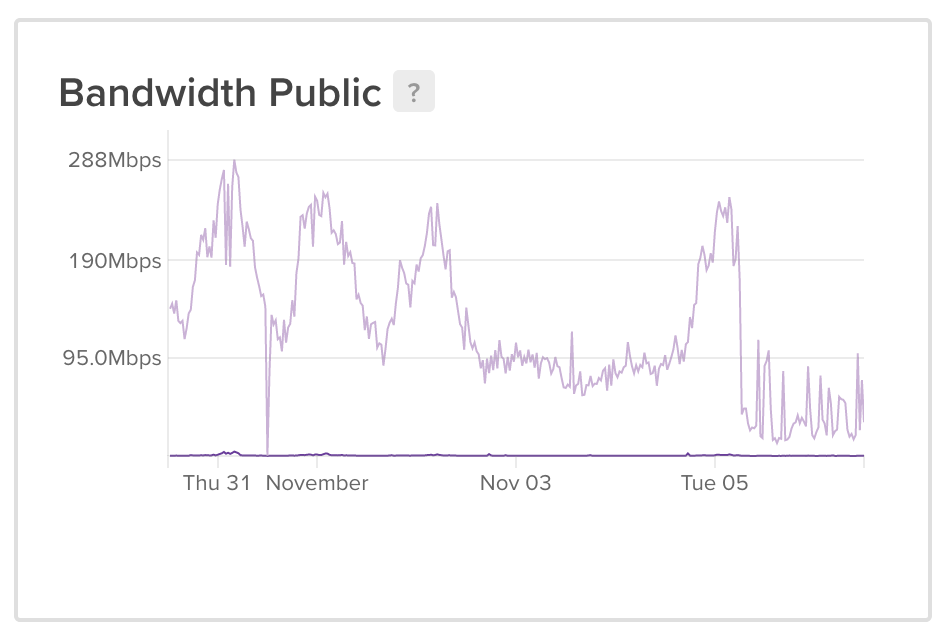

I don't believe we'll see this particular incarnation of problems with nodejs.org from now on. Future problems are likely to be of a different nature, so I'm closing this issue. We're now fully fronting our downloads with CDN and it appears to be working well. It's taken considerable load off our backend servers of course and we're unlikely to hit the bandwidth peaks at the network interface that appears to have been a problem previously. Primary server bandwidth: Cloudflare caching: |

|

Thank you to everyone for their hard work, sending virtual 🤗. |

|

Apparently encountering this type of issue still. For the past two days I get around a 20% failure rate consistently: |

|

Where are you @matsaman? could be a cloudflare problem in your region. See https://www.cloudflarestatus.com/ and check whether one of the edges close to you is having trouble. Without further reports of problems or something we can reproduce ourselves our best guess is going to be that it's a problem somewhere between Cloudflare and your computer since CF should have it cached. |

|

Items on that page in my region say 'Operational' at this time. Should I encounter the issue again I will check that page after my tests and include my findings here. |

|

I'm getting fast downloads. Please open a new issue if you believe there is still a problem versus commenting on this close issue. |

|

@appu24 tar failure doesn't necessarily indicate a download error. Are you able to get more log output for that to see exactly what it's failing on. I would assume something in the chain is generating some stderr logging at least. |

|

very slow download for me |

|

Experiencing issues for weeks now; from different IPs and servers. Is there any known reason for this? |

Yes, the origin server is being overloaded with traffic, in part due to every release of Node.js purging the Cloudflare cache for the entire domain. There are a bunch of issues open that aim to help rectify this:

|

|

nodejs.org file delivery is currently not working again. This might be a working mirror: |

|

Hi there, can't download node. Continued "Failed - Network Error" from my mac |

Edited by the Node.js Website Team

Learn more about this incident at https://nodejs.org/en/blog/announcements/node-js-march-17-incident

tl;dr: The Node.js website team is aware of ongoing issues with intermittent download instability.More Details: #1993 (comment)

Original Issue Below

All binary downloads from nodejs.org are very slow currently. E.g.

https://nodejs.org/dist/v13.0.1/node-v13.0.1-darwin-x64.tar.xz.In many cases, they will time out, which affects CI systems like Travis who rely on nvm to install Node.js.

According to @targos, the CPU of the server is spinning 100% being used by nginx, but we can't figure out what's wrong.

The text was updated successfully, but these errors were encountered: