January 2021

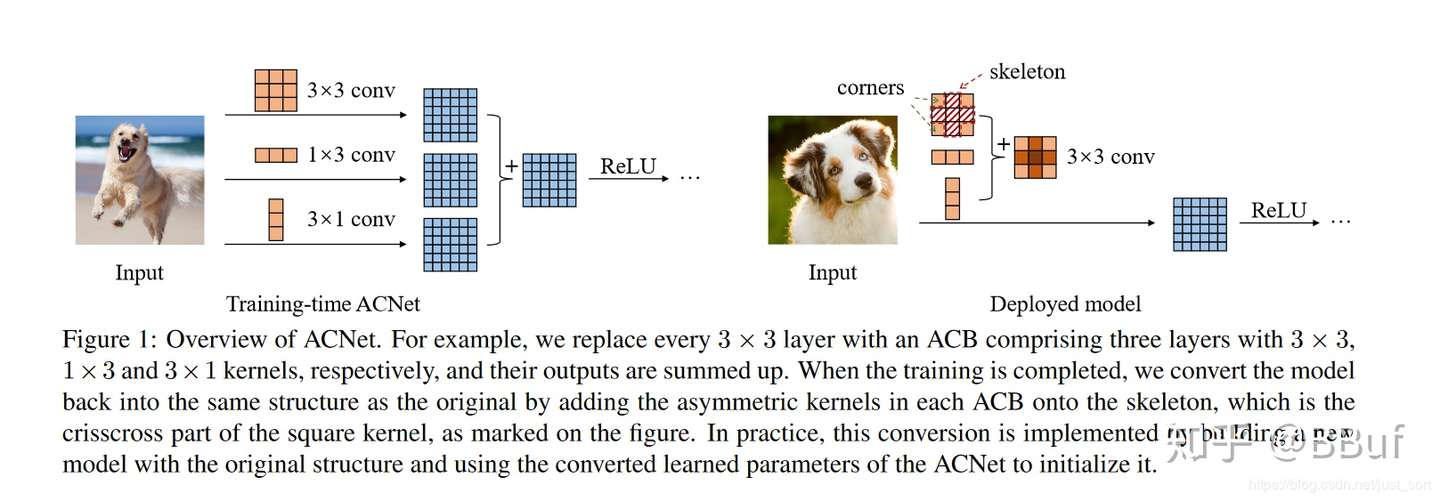

tl;dr: Train with 3x3, 3x1 and 1x3, but deploy with fused 3x3.

This paper take the idea of BN fusion during inference to a new level, by fusing conv kernels. It has no additional hyperparameters during training, and no additional parameters during inference, thanks to the fact that additivity holds for convolution.

It directly inspired RepVGG, a follow-up work by the same authors.

- Asymmetric convolution block (ACB)

- During training, replace every 3x3 by 3 parallel branches, 3x3, 3x1 and 1x3.

- During inference, merge the 3 branches into 1, through BN fusion and branch fusion.

- ACNet strengthens the skeleton

- Skeletons are more important than corners. Removing corners causes less harm than skeletons.

- ACNet aggravates this imbalance

- Adding ACB to edges cannot diminish the importance of other parts. Skeleton is still very important.

- Breaking large kernels into asymmetric convolutional kernels can save computation and increase receptive field cheaply.

- ACNet can enhance the robustness toward rotational distortions. Train upright, and infer on rotated images. --> but the improvement in robustness is quite marginal.