Replies: 5 comments 22 replies

-

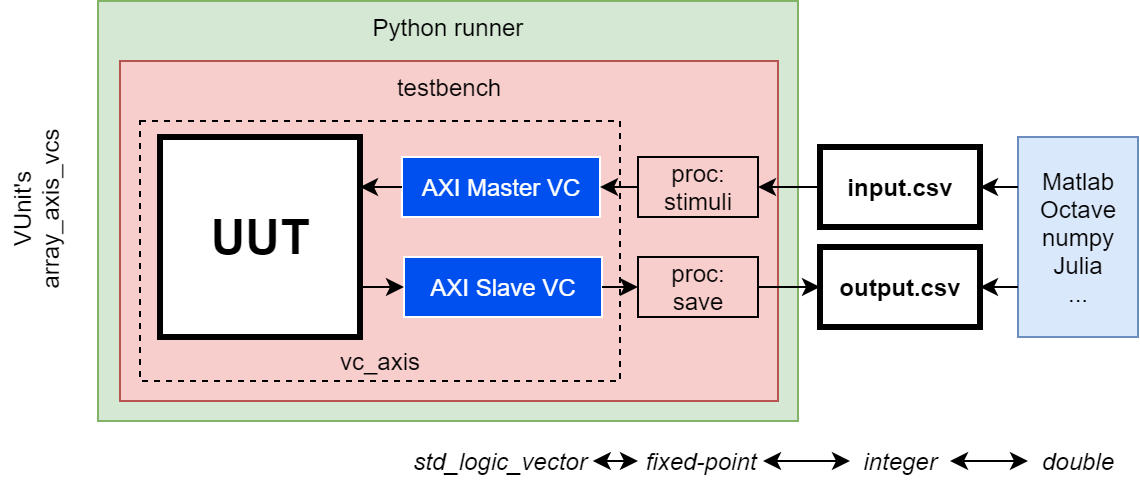

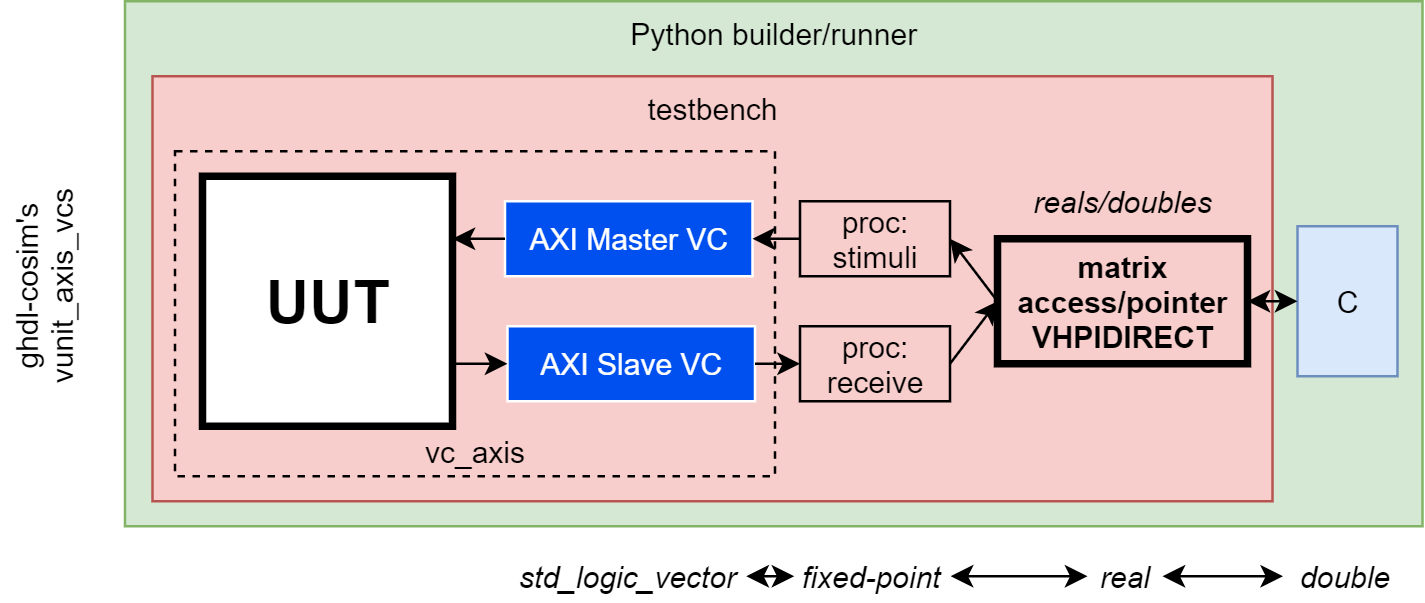

AXI StreamThe AXI Stream loopback example available in VUnit's repository (https://github.com/VUnit/vunit/tree/master/examples/vhdl/array_axis_vcs/src) is an AXI Stream slave connected to an AXI Stream master through a FIFO. That is used as a foundation for any streaming DSP processing, by placing custom logic either before, after or in-between the FIFO. Say, for instance a CORDIC component (either pipelined or iterative, since I/O are async/FIFO). I believe that example is interesting because I've used it for didactic/demo purposes in other open source project documentation sites and in academia/research. For instance, in https://ghdl.github.io/ghdl-cosim/vhpidirect/examples/arrays.html#array-and-axi4-stream-verification-components it is modified for using direct cosimulation as an alternative to CSV files for sharing data with foreign functions/tools. For didactic purposes too, VHDL's fixed_generic_pkg is used. That is further related to dbhi/vboard. The same example was used in DBHI: towards decoupled functional hardware-software co-design on SoCs. 28th ACM/SIGDA International Symposium on Field-Programmable Gate Arrays (FPGA 2020). It's the same architecture, but a Dynamic Binary Modification tool is used for replacing a function call in a binary application (without access to the sources). Yet, as you see, that is all related to simulation/cosimulation and to testing the accelerator itself through Verification Components. Synthesis is not covered/documented in any open source repo (yet). When using MicroBlaze, having AXI Stream accelerators is quite nice. They used to have these Fast Simplex Link (FSL) interfaces (https://www.xilinx.com/support/documentation/sw_manuals/mb_ref_guide.pdf) which were then replaced with Stream Link Interfaces (https://www.xilinx.com/support/documentation/sw_manuals/xilinx2018_2/ug984-vivado-microblaze-ref.pdf). Same software framework in both cases: specific ASM instructions for writing/reading to/from some specific registers, which are mapped to the hardware "Links", i.e. AXI Stream ports. On Zynq, that's slightly uglier. There is no built-in AXI Stream between the hard ARM cores and the Programmable Logic (PL). Therefore, a DMA or an AXI-Lite to Stream bridge needs to be used. Not a huge deal, but a whole source of potential bugs and configuration issues when one wants to just test some software and some accelerator together. NEORV has a Wishbone component, which is labeled as en External bus of type Wishbone b4 or AXI4-Lite. I assume it is a single master port to be connected to some interconnect, in case multiple external peripherals are added. Therefore, the integration would need to be similar to the Zynq. However, since RISC-V is suppossed to be easy to extend, open, and because NEORV32 is also open, I wonder if we can do better. @stnolting, what do you think? Can we provide a configurable number of "Links" which 1) can be of type out only, in only or "instantiate" one of each; 2) are mapped in the CPU memory; and 3) are usable through specific instructions? I guess it is a two stage question. The first one is adding a configurable number of links. The second one is whether it's worth having specific instruction for that. I must say I know nothing specific about RISC-V and CPU architecture is not my field. Please, excuse me if I'm making some stupid assumptions. For now, I'm not concerned about performance, but about having a solution which is as simple as technically possible. It is meant for students to understand the whole system, CPU, interface and Core. That's why AXI-Lite to Stream bridges or DMAs are not desirable as the first use case. That should addressed after they are familiar with the most simple Stream/FIFO interface (which is otherwise pretty common in low-level RTL). |

Beta Was this translation helpful? Give feedback.

-

AXI-LiteAXI-Lite is an obvious candidate for any not complex addressable register/memory. In umarcor/SIEAV, there is some content I use for teaching cosimulation and testing/verification with VHDL and open source tooling (GHDL, VUnit, Octave...). The system we use as a reference is a typical closed-loop control system with a controller, a plant, and drivers/actuators and capture/holds in-between. The design is complemented with an AXI Slave component, in order to modify the setpoint and/or the constants/parameters of the controller, at runtime. Similarly to the AXI Stream example above, we use a Verification Component and cosimulation for testing the software-hardware interaction: The motivation is to abstract away the specific implementation of the CPU and/or any other peripheral in the SoC, and focus on the "logic" of our application only, both software and the Core. The actual implementation (synthesis) of the whole system is out of the scope of the course (for now). Hence, I didn't advance further. For future courses (and for enhancing the learning resources about using VHDL with open source tooling), I would like to add a working example with a minimal synthesisable setup: However, I was missing some free and open source CPU written in VHDL, with a trivial build procedure (for someone used to hardware design in VHDL) and with a responsive maintainer 😄. As you might guess, NEORV32 is a nice candidate for showcasing how to go ahead with the system integration, synthesis and implementation: I would like to prototype this on a Fomu, using GHDL, Yosys and nextpnr. As commented in some other issues, @tmeissner did already contribute a setup for UPduino v3.0 and I added the CI plumbing for it. Next step is to conciliate the structure with https://github.com/im-tomu/fomu-workshop/tree/master/hdl and/or https://github.com/dbhi/vboard/tree/main/vga. That is, having some common Moreover, for some reason, I was not watching this repo, and I did not see the PRs that @LarsAsplund opened these last days. Since I'm already using VUnit in umarcor/SIEAV, I think I might add NEORV32 as a submodule, and execute the VUnit tests in CI. I believe there is no explicit public example about that yet: two repos (maintained by different people) submoduling each another repo, both of them using VUnit. @stnolting, where do you suggest me to start reading? What is the closest to "How to add an external AXI4-Lite peripheral to the Wishbone bus in NEORV32"? |

Beta Was this translation helpful? Give feedback.

-

|

@umarcor |

Beta Was this translation helpful? Give feedback.

-

|

I am currently working on a "Stream Link Interface" that is compatible to the AXI4-Stream base protocol. The interface will support up to 8 independent RX and TX links - each link provides a configurable internal FIFO. This is what the top entity might look like: -- Stream link interface --

SLINK_NUM_TX : natural := 0; -- number of TX links (0..8)

SLINK_NUM_RX : natural := 0; -- number of TX links (0..8)

SLINK_TX_FIFO : natural := 1; -- TX fifo depth, has to be a power of two

SLINK_RX_FIFO : natural := 1; -- RX fifo depth, has to be a power of two

-- TX stream interfaces (available if SLINK_NUM_TX > 0) --

slink_tx_dat_o : out sdata_8x32_t; -- output data

slink_tx_val_o : out std_ulogic_vector(7 downto 0); -- valid output

slink_tx_rdy_i : in std_ulogic_vector(7 downto 0) := (others => '0'); -- ready to send

-- RX stream interfaces (available if SLINK_NUM_RX > 0) --

slink_rx_dat_i : in sdata_8x32_t := (others => (others => '0')); -- input data

slink_rx_val_i : in std_ulogic_vector(7 downto 0) := (others => '0'); -- valid input

slink_rx_rdy_o : out std_ulogic_vector(7 downto 0); -- ready to receiveThe top signals always implement all 8 links even if less links are configured by the generics (the remaining links are terminated internally; so no extra logic). Of course one could constrain the simple I am not sure about additional "tag" signals like I know a stream link is basically a simple FIFO interface that should not be too hard to verify even with a simple testbench. However, I would like to do some "stress tests" someday (like randomized traffic). I am looking through VUnit's streaming verification components (https://vunit.github.io/verification_components/vci.html#stream-master-vci) but I couldn't find any example setups so far. @LarsAsplund @umarcor do you have any hints? 😉 |

Beta Was this translation helpful? Give feedback.

-

|

thank you

…--------------原始邮件--------------

发件人:"stnolting ***@***.***>;

发送时间:2024年12月7日(星期六) 中午1:59

收件人:"stnolting/neorv32" ***@***.***>;

抄送:"汪龙河 ***@***.***>;"Comment ***@***.***>;

主题:Re: [stnolting/neorv32] How to add AXI-Lite and AXI Stream peripherals (Discussion #52)

-----------------------------------

请问如何实现AXI4-Full到AXI4-Stream的转换呢

How to realize AXI4-Full to AXI4-Stream conversion?

If you are using AMD then I can highly recommend the AXI Streaming FIFO: IP module: https://www.xilinx.com/products/intellectual-property/axi_fifo.html#overview

For an open source version, this looks promising - but I haven't tested it myself.

Btw, English is the default language here. So please use deepl or any other translator. 😉

—

Reply to this email directly, view it on GitHub, or unsubscribe.

You are receiving this because you commented.Message ID: ***@***.***>

|

Beta Was this translation helpful? Give feedback.

-

After getting familiar with the structure of the project, it's time to look into some practical use case. 🚀

My background is developing ad-hoc accelerators for non-trivial DSP, say machine-learning/image-processing, say algebraic kernels in VHDL. During testing and verification, those need to be complemented with some CPU, in order to move data and results between a workstation/laptop and the accelerator. I used MicroBlaze and Zynq (ARM A9). The interfaces are either AXI-Lite or AXI Stream, despite some cores using Wishbone internally. However, those CPUs are overkill for orchestration purposes only, and the toolchains/frameworks provided by the vendor are not the most comfortable out there.

I'm willing to learn how to use NEORV32 SoC. Although not all my designs are open source, I believe there are a few examples which can be useful enough for didactic purposes.

Beta Was this translation helpful? Give feedback.

All reactions