-

Notifications

You must be signed in to change notification settings - Fork 12

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Error while processing the prediction output #57

Comments

|

@bartbutenaers I will try to answer your questions:

|

|

Hi @pyu10055,

Ah ok, I wasn't aware of that since the prediction doesn't throw an exception when I pass not-normalized images to it. But I assume in that case it will be slower and less accurate? I will implement it. In this codelab code snippet, it is used to normalize (for some reason) the prediction result. Is that also required?

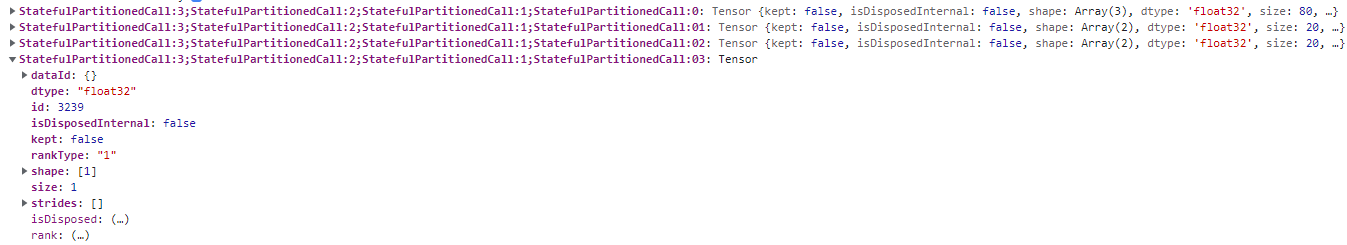

Yes you are correct. In my prediction output I get 4 tensors: I'm afraid I am going to ask a noob question now :-( |

I assume these 4 tensors represent this:

What I for example don't understand how to get the bounding box dimensions array [top, left, bottom, right] from this output... |

Using the following code snippet, I can extract some useful data from the output: At first sight this seems to be working fine, but it must be my most ugliest code snippet ever. |

|

Now that I can do some basic detection, I wanted to prepare my input tensor based on your feedback above: However then the

My variables have these data types:

Since the model expects uint8, I assume I am not allowed to normalize my image because otherwise I can only pass 0 or 1? Thanks!! My brain started melting at the moment... |

|

BTW I realize that this Github issue might not be the best place to ask for help. But I had understood from @mattsoulanille |

Dear,

I am trying to integrate the code from the Coral codelab into the Node-RED application, to allow our community to detect objects fast in images (e.g. persons in IP cam images for video surveillance). Our current solution can already draw rectangles around detected objects (via tfjs), but I would like to add Coral support.

First I load the a pre-trained TfLite model for object detection (which I download from this site):

Once the model is loaded, the input of my code is an image (as a NodeJs buffer):

However the

tf.multhrows following exception:The

predictionobject looks like this:Noob question: does this mean perhaps that it has recognized 4 different classes of objects in my image, and that I need to loop these 4 tensors (simply via

Object.keys(...))? Which I then must map to a label, and somehow extract the coordinates of the bounding box?It would also be nice if somebody could explain (in simple words ;-) ) why the

expandDims(img, 0)is required, and what thetf.div(tf.mul(prediction, tf.scalar(100)), tf.scalar(255))is doing.Thanks!!!

Bart Butenaers

The text was updated successfully, but these errors were encountered: