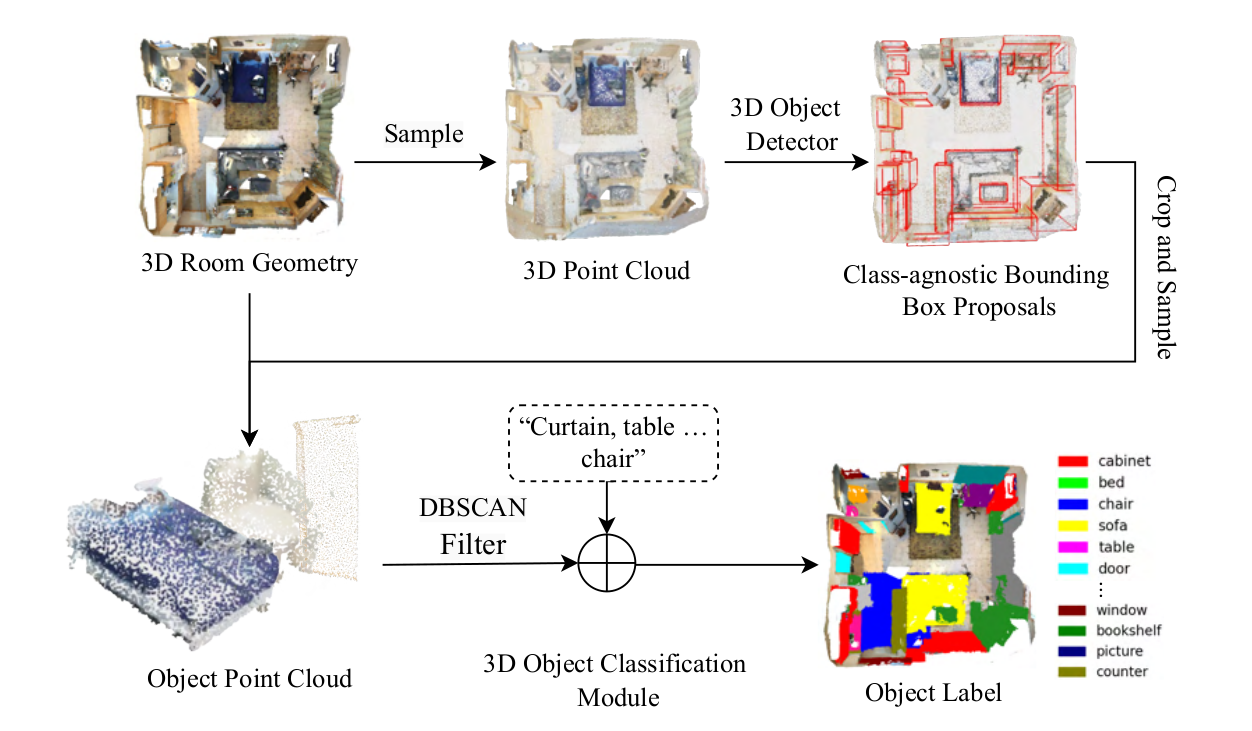

This is the implementation of Object Detection and Classification module of the paper "Point2Graph: An End-to-end Point Cloud-based 3D Open-Vocabulary Scene Graph for Robot Navigation".

Authors: Yifan Xu, Ziming Luo, Qianwei Wang, Vineet Kamat, Carol Menassa

[2025/02] Our paper is accepted by ICRA2025 🎉🎉🎉

This module consists of two stages: (1) detection and localization using class-agnostic bounding boxes and DBSCAN filtering for object refinement, and (2) classification via cross-modal retrieval, connecting 3D point cloud data with textual descriptions, without requiring annotations or RGB-D alignment.

Step 1. Create a conda environment and activate it.

conda env create -f point2graph.yaml

conda activate point2graphStep 2. install Minkowski Engine.

git clone https://github.com/NVIDIA/MinkowskiEngine.git

cd MinkowskiEngine

python setup.py install --blas_include_dirs=${CONDA_PREFIX}/include --blas=openblasStep 3. install mmcv.

pip install openmim

mim install mmcv-full==1.6.1Step 4. install third party support.

cd third_party/pointnet2/ && python setup.py install --user

cd ../..

cd utils && python cython_compile.py build_ext --inplace

cd ..Scannet Data

- Download ScanNet v2 data HERE. Move/link the

scansfolder such that underscansthere should be folders with names such asscene0001_01. - Open the 'scannet' folder. Extract point clouds and annotations (semantic seg, instance seg etc.) by running

python batch_load_scannet_data.py, which will create a folder namedscannet_train_detection_datahere.

You should

- download the 3D Object Detection pre-trained model V-DETR, and put it in

./models/folder. - download the 3D Object Classification pre-trained model Uni-3D and the clip model, and put them in

./Uni3D/downloads/folder.

The test script is in the run.sh file. Once you have the datasets and model prepared, you can test this models as

bash run.shThe script performs two functions:

- Get a set of point cloud of objects with unknown class and store them at

./results/objects/ - Retrieve and visualize the 3D object point cloud most relevant to the user's query

Point2Graph is built on the V-DETR, and Uni3D.

If you find this code useful in your research, please consider citing:

@misc{xu2024point2graphendtoendpointcloudbased,

title={Point2Graph: An End-to-end Point Cloud-based 3D Open-Vocabulary Scene Graph for Robot Navigation},

author={Yifan Xu and Ziming Luo and Qianwei Wang and Vineet Kamat and Carol Menassa},

year={2024},

eprint={2409.10350},

archivePrefix={arXiv},

primaryClass={cs.RO},

url={https://arxiv.org/abs/2409.10350},

}