Controllable image synthesis with user scribbles has gained huge public interest with the recent advent of text-conditioned latent diffusion models. The user scribbles control the color composition while the text prompt provides control over the overall image semantics. However, we find that prior works suffer from an intrinsic domain shift problem wherein the generated outputs often lack details and resemble simplistic representations of the target domain. In this paper, we propose a novel guided image synthesis framework, which addresses this problem by modeling the output image as the solution of a constrained optimization problem. We show that while computing an exact solution to the optimization is infeasible, an approximation of the same can be achieved while just requiring a single pass of the reverse diffusion process. Additionally, we show that by simply defining a cross-attention based correspondence between the input text tokens and the user stroke-painting, the user is also able to control the semantics of different painted regions without requiring any conditional training or finetuning. Human user study results show that the proposed approach outperforms the previous state-of-the-art by over 85.32% on the overall user satisfaction scores.

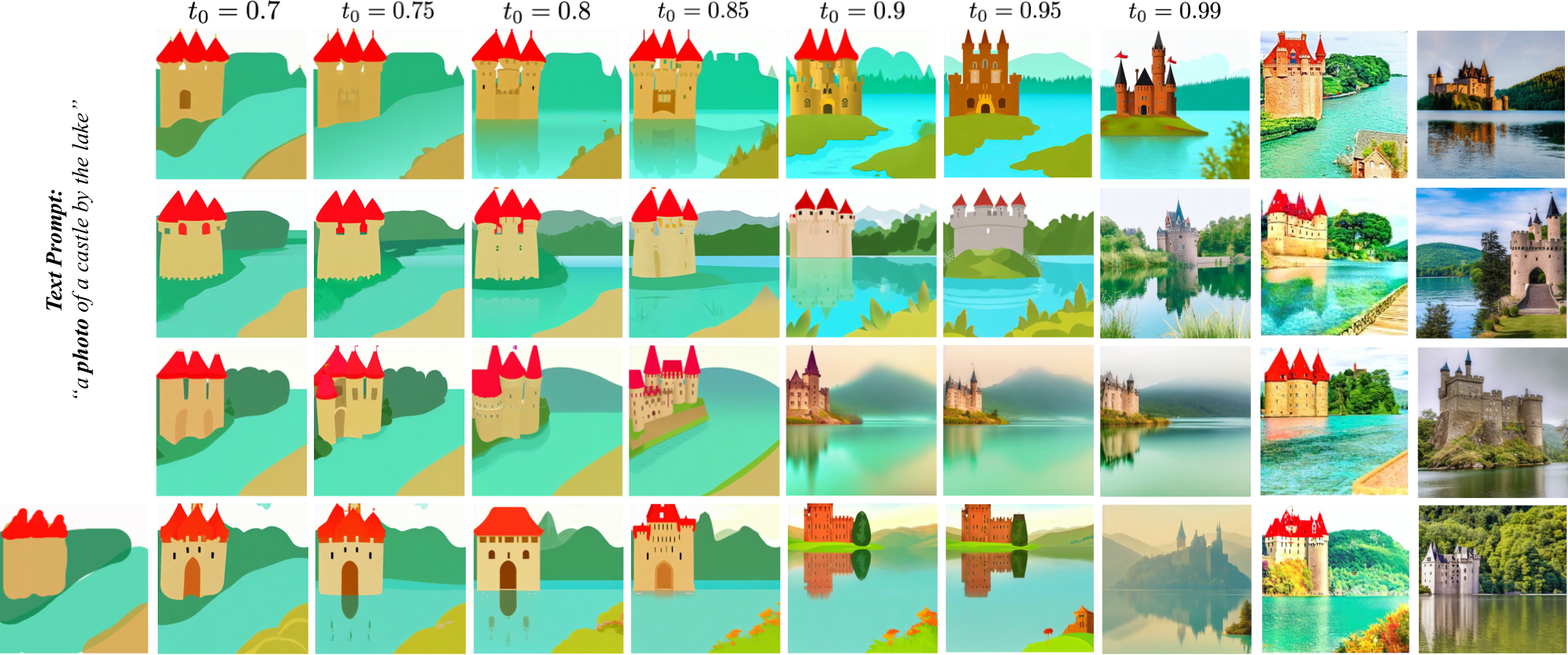

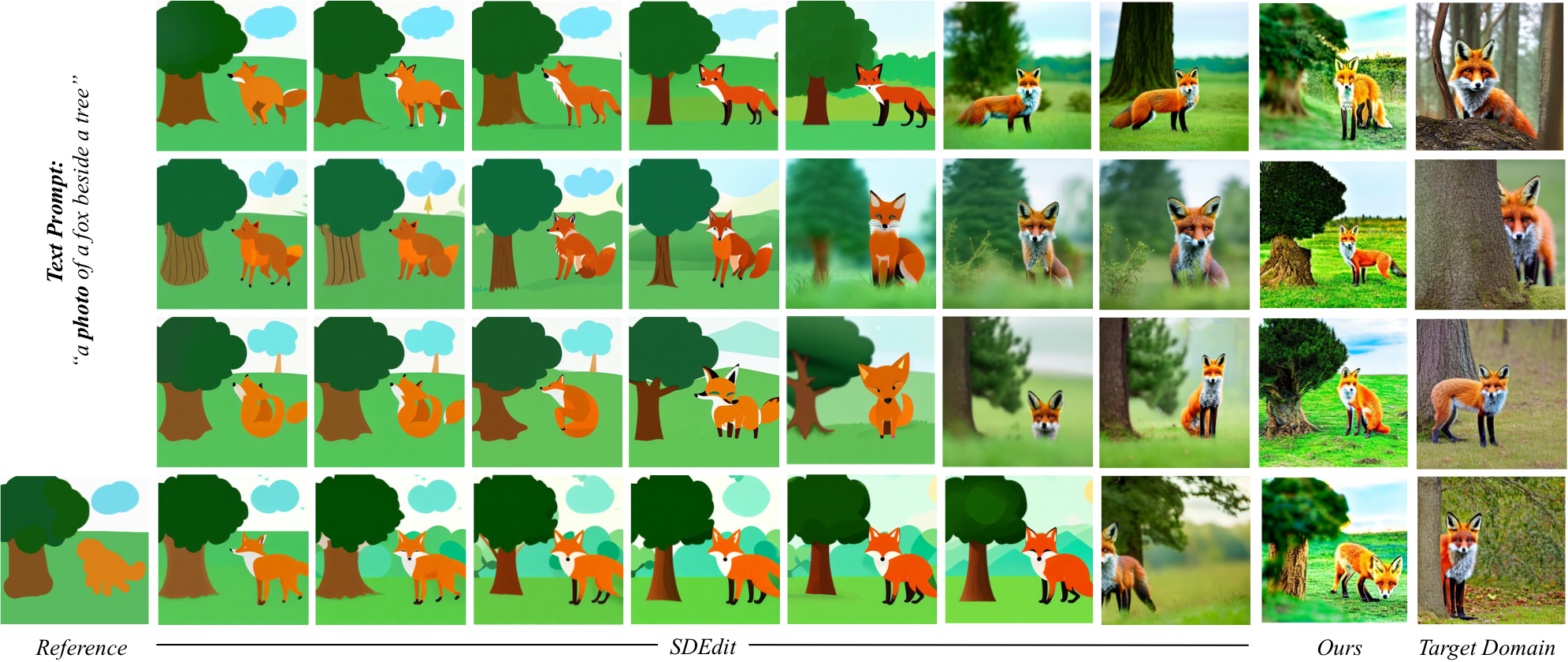

We propose a novel stroke based guided image synthesis framework which (Left) resolves the intrinsic domain shift problem in prior works (b), wherein the final images lack details and often resemble simplistic representations of the target domain (e) (generated using only text-conditioning). Iteratively reperforming the guided synthesis with the generated outputs (c) seems to improve realism but it is expensive and the generated outputs tend to lose faithfulness with the reference (a) with each iteration. (Right) Additionally, the user is also able to specify the semantics of different painted regions without requiring any additional training or finetuning.

Official implementation of our CVPR 2023 paper with streamlit demo. By modelling the guided image synthesis output as the solution of a constrained optimization problem, we improve output realism w.r.t the target domain (e.g. realistic photos) when performing guided image synthesis from coarse user scribbles.

- (20/06/23) Our project code and demo are online. Try performing realistic stroke-to-image synthesis right from your browser!

gradop-demo-v5.mp4

- Linux or macOS

- NVIDIA GPU + CUDA CuDNN (CPU may be possible with some modifications, but is not inherently supported)

- Python 3

- Tested on Ubuntu 20.04, Nvidia RTX 3090 and CUDA 11.5 (though will likely run on other setups without modification)

- Dependencies:

Our code builds on the requirement of the official Stable Diffusion repository. To set up the environment, please run:

conda env create -f environment/environment.yaml

conda activate gradop-guided-synthesis

Our code uses the Hugging Face diffusers library for downloading the Stable Diffusion v1.4 text-to-image model.

The GradOP+ model is provided in a simple diffusers pipeline for easy use:

- First load the pipeline with Stable Diffusion Weights

from pipeline_gradop_stroke2img import GradOPStroke2ImgPipeline

pipeline = GradOPStroke2ImgPipeline.from_pretrained("CompVis/stable-diffusion-v1-4",torch_dtype=torch.float32)- Simply load user-scribbles image and perform inference using GradOP+

# define the guidance inputs: 1) text prompt and 2) guidance image containing coarse scribbles

seed = 0

prompt = "a photo of a fox beside a tree"

stroke_img = Image.open('./input-images/fox.png').convert('RGB').resize((512,512))

# perform img2img guided synthesis using gradop+

generator = torch.Generator(device=device).manual_seed(seed)

out = pipeline.gradop_plus_stroke2img(prompt, stroke_img, strength=0.8, num_iterative_steps=3, grad_steps_per_iter=12, generator=generator)Notes:

- Increase the number of

grad_steps_per_iter(8-16) for improving faithfulness with the input reference. - Increasing the

num_iterative_stepsbetween 3-5 can also help with the same. - We can also compare the performance with standard (SDEdit-based) diffusers img2img predictions for same seed,

generator = torch.Generator(device=device).manual_seed(seed)

out = pipeline.sdedit_img2img(prompt=prompt, image=stroke_img, generator=generator)- Similarly, visualization of the target data subspace (images conditioned only on the text prompt) can be done as follows,

prompt = "a photo of a fox beside a tree"

text_conditioned_outputs = pipeline.text2img_prediction(prompt, num_images_per_prompt=4).imagesTo generate an image, you can also simply run run.py script. For example,

python run.py --img_path ./input-images/fox.png --prompt "a photo of a fox beside a tree" --seed 0 --num_iterative_steps=3 --grad_steps_per_iter=12Notes:

- Increase the number of

--grad_steps_per_iter(8-16) for improving faithfulness with the input reference. - Increasing the

--num_iterative_stepsbetween 2-5 can also help with the same. - For generating baseline images with standard (SDEdit-based) diffusers img2img prediction use

--method=sdeditoption as,

python run.py --method sdedit --img_path ./input-images/fox.png --prompt "a photo of a fox beside a tree" --strength=0.8 --seed 0 We also provide a demo Jupyter notebook for detailed analysis and comparison with prior SDEdit based guided synthesis. Please see notebooks/demo-gradop.ipynb

for step-by-step analysis including:

- Comparison with SDEdit under changing hyperparameter

- Additional results across diverse data modalities (e.g. realistic photos, anime scenes etc.)

- Visualization of GradOP+ outputs under changing number of gradient descent steps.

As compared to prior works, our method provides a more practical approach for improving output realism (with respect to the target domain) while still maintaining the faithfulness with the reference painting.

A key component of the proposed GradOP/GradOP+ solution, is to model the guided image synthesis problem as a constrained optimization problem and solve the same approximately using simple gradient descent. Here, we visualize the variation in output performance as the number of gradient descent steps are increased.

As the number of gradient steps for GradOP+ optimization increase (left to right) the output converges to more and more faithful (yet realistic) representation of the input reference painting / scribbles.

Our approach allows the user to easily generate realistic image outputs across a range of data modalities.

This code is builds on the code from the img2img stable diffusion pipeline from the diffusers library.

If you use this code for your research, please consider citing:

@inproceedings{singh2023high,

title={High-Fidelity Guided Image Synthesis With Latent Diffusion Models},

author={Singh, Jaskirat and Gould, Stephen and Zheng, Liang},

booktitle={Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition},

pages={5997--6006},

year={2023}

}