Project Page | Paper | Video

Gaochang Wu1,

Yingqian Wang2,

Yebin Liu3,

Lu Fang4,

Tianyou Chai1

1State Key Laboratory of Synthetical Automation for Process Industries, Northeastern University

2College of Electronic Science and Technology, Nation University of Defense Technology (NUDT)

3Department of Automation, Tsinghua University

4Tsinghua-Berkeley Shenzhen Institute

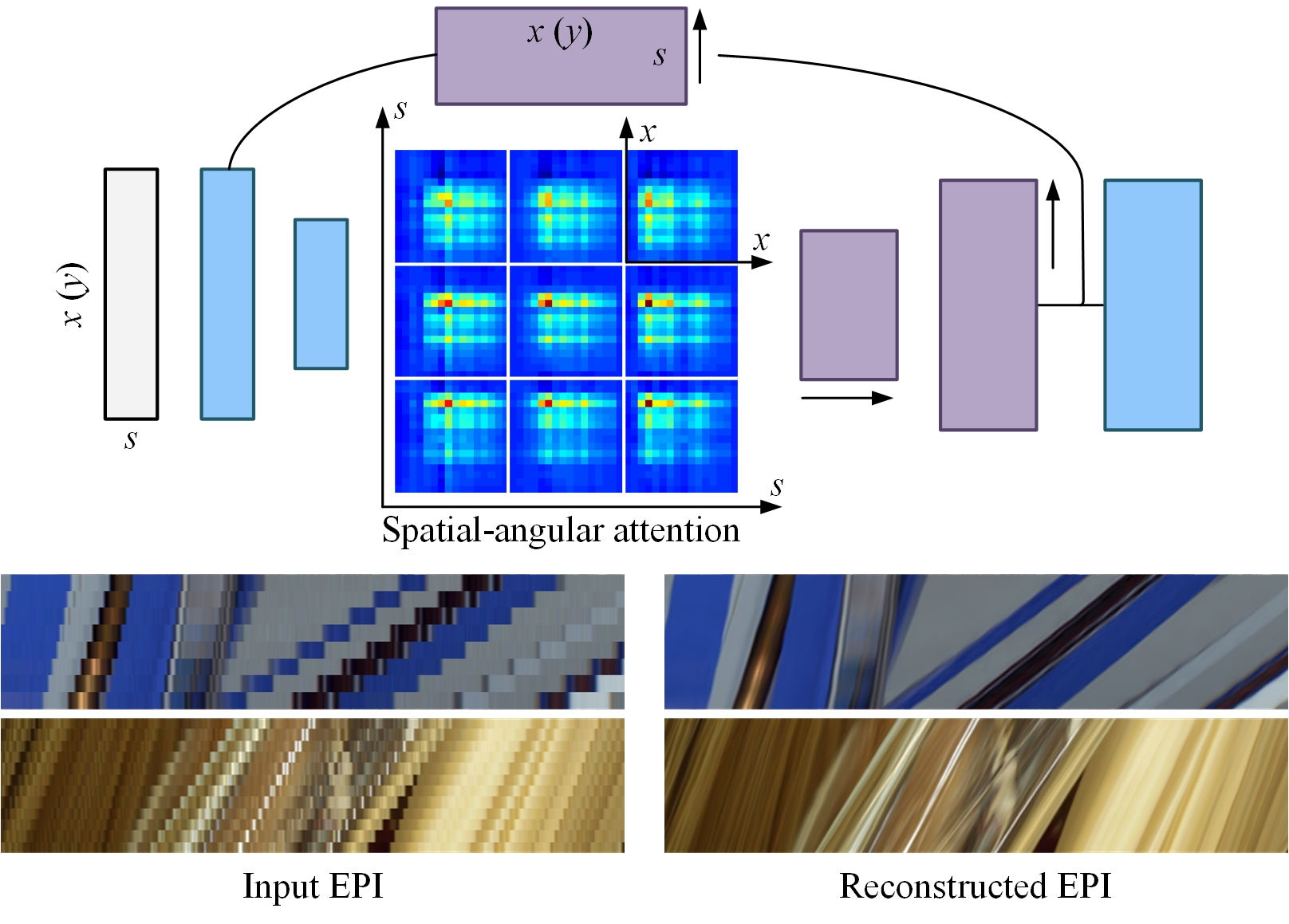

Typical learning-based light field reconstruction methods demand in constructing a large receptive field by deepening their networks to capture correspondences between input views. In this paper, we propose a spatial-angular attention network to perceive non-local correspondences in the light field, and reconstruct high angular resolution light field in an end-to-end manner. Motivated by the non-local attention mechanism, a spatial-angular attention module specifically for the high-dimensional light field data is introduced to compute the response of each query pixel from all the positions on the epipolar plane, and generate an attention map that captures correspondences along the angular dimension. Then a multi-scale reconstruction structure is proposed to efficiently implement the non-local attention in the low resolution feature space, while also preserving the high frequency components in the high-resolution feature space. Extensive experiments demonstrate the superior performance of the proposed spatial-angular attention network for reconstructing sparsely-sampled light fields with non-Lambertian effects.

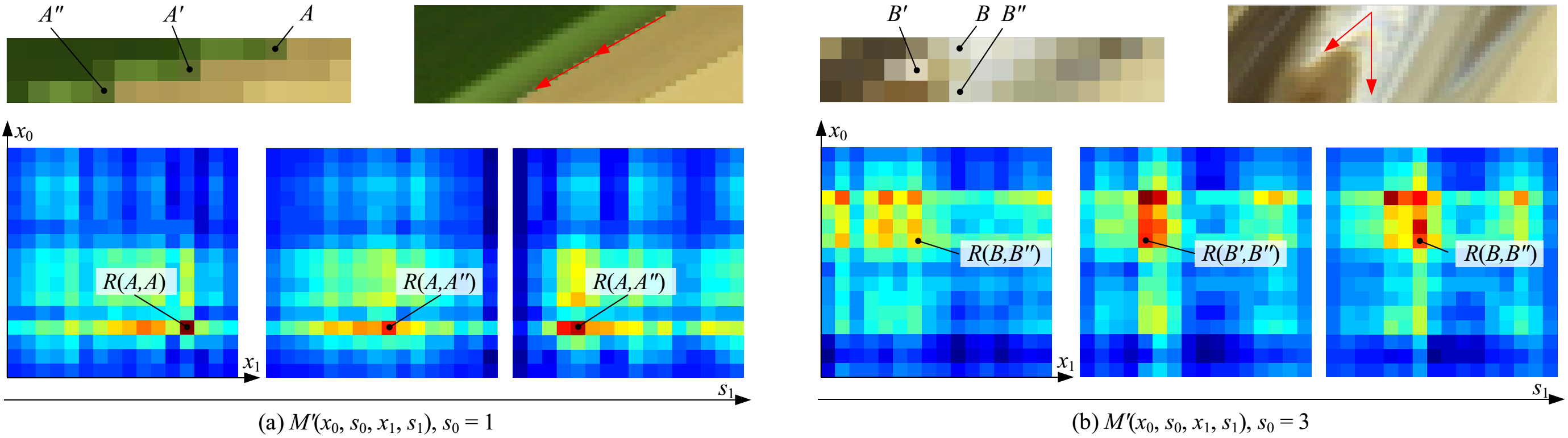

Demonstration of attention map on scenes with (a) large disparity and (b) non-Lambertian effect.

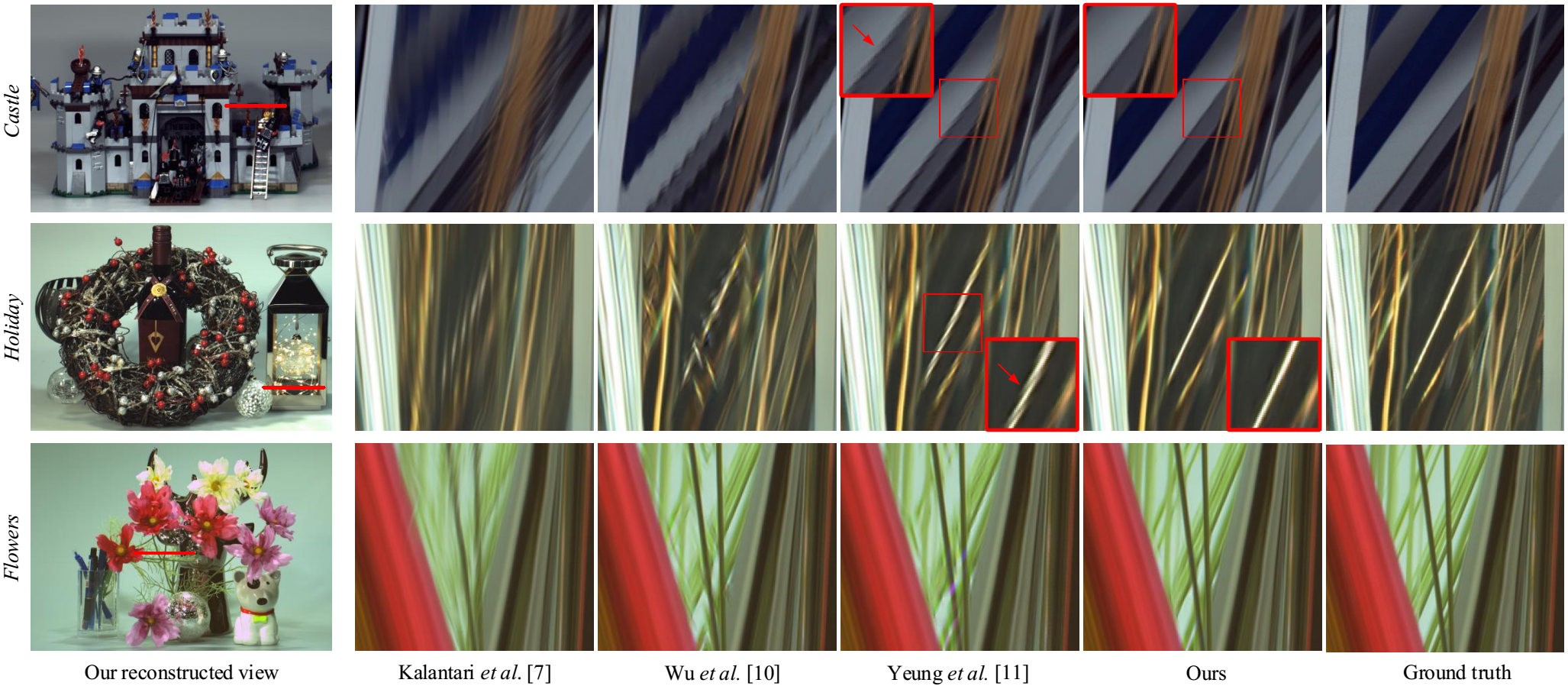

Comparison of the results on the light fields from the CIVIT Dataset (16x upsampling).

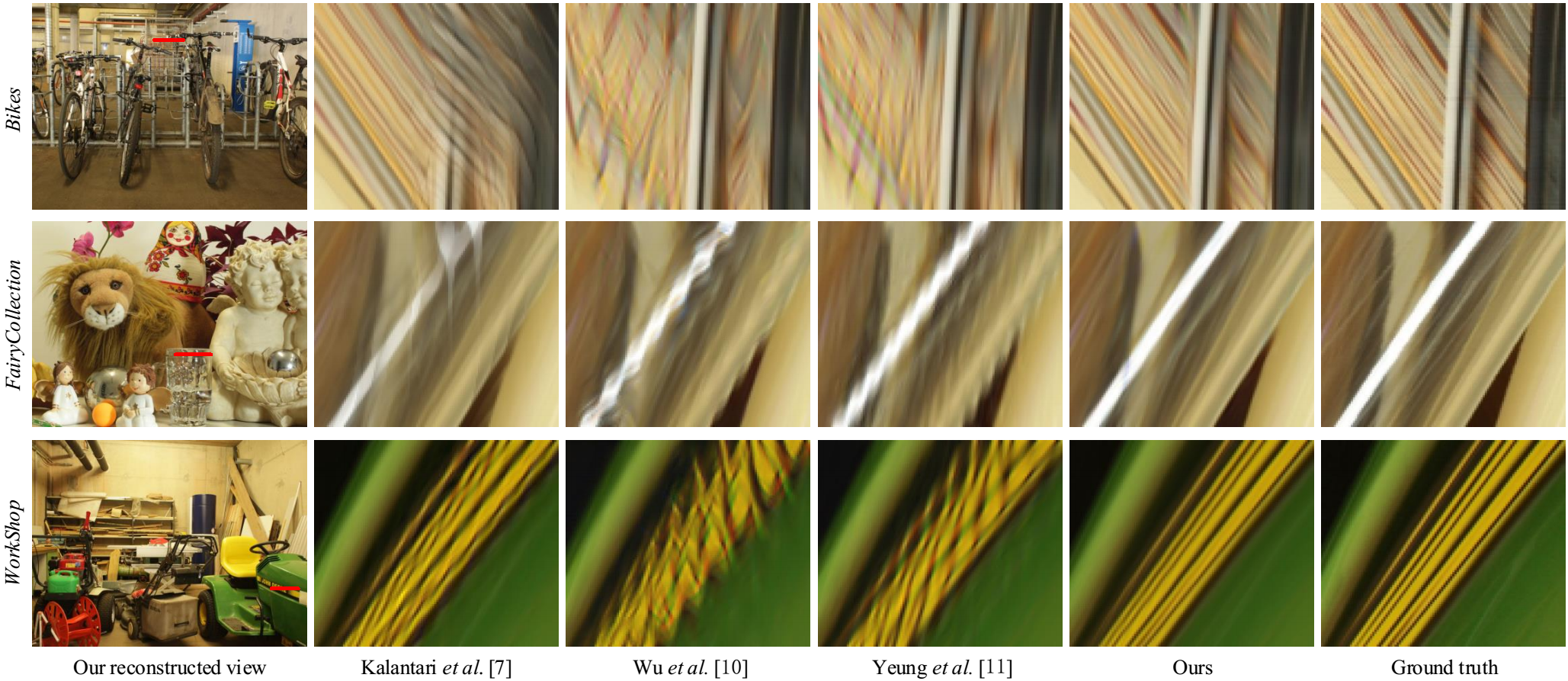

Comparison of the results on the light fields from the MPI Light Field Archive (16x upsampling).

-

Environment -Python 3.7.4, tensorflow-gpu==1.13.1

-

You should first upload your light fields to "./Datasets/".

-

The code for 3D light field (1D angular and 2D spatial) reconstruction is "main3d.py". Recommend using the model with upsampling scale \alpha=3 for x8 or x9 reconstruction, and the model with upsampling scale \alpha=4 for x16 reconstruction.

-

The code for 4D light field reconstruction is "main4d.py".

-

Please cite our paper if it helps, thank you!