-

Notifications

You must be signed in to change notification settings - Fork 71

Home

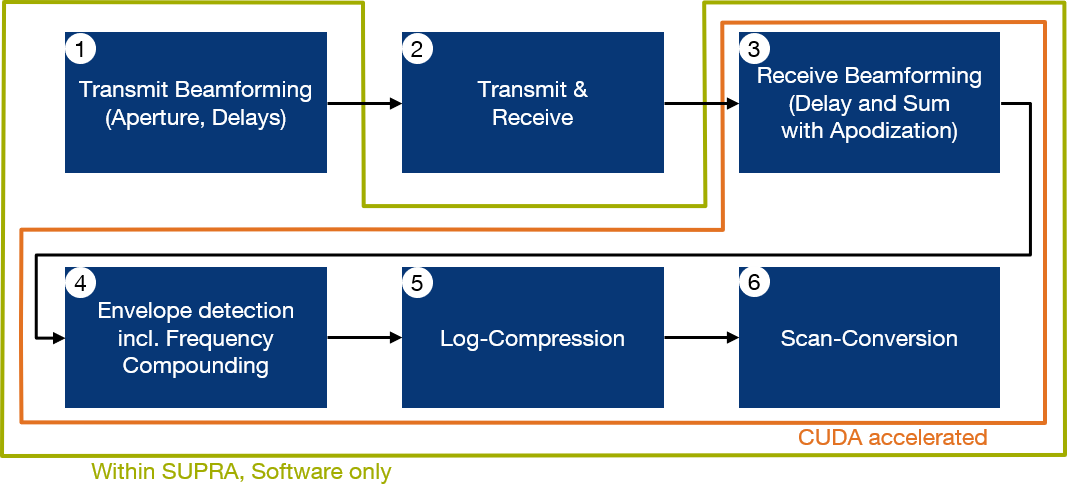

The aim of SUPRA is to turn ultrasound (US) systems from a black-box into a white-box. With that we mean the goal to substitute the processing that happens in closed US systems with open-source implementations. This facilitates the comparison of imaging components, as they can be compared with the same surrounding steps in place. By that, we reduce the number of variables and also unknowns for evaluations, which in turn helps to increase the reproducibility of research.

For an introduction to the concepts behind SUPRA, have a look at the recording or the slides of the 1st SUPRA-con!

We designed SUPRA around the following use-cases:

-

Easy access to intermediate data:

Besides the open algorithms, one key advantage of imaging with SUPRA is the way the independant processing steps are coupled. The framework allows you to quickly gain access to all intermediate data streams within it. There is no need to use vendor-specific interfaces to receive images, RF data or any other intermediate representation. The streams can be accessed either programmatically, streamed through S.I.M.P.L.E, ROS, OpenIGTLink or stored as MetaImages.

-

Prototyping platform:

While SUPRA is very flexible, we took special care to make implementations of processing steps within it very simple and with the least amount of boilerplate as possible. With that and the example processing modules, it becomes the platform for prototyping. What's more, as it is designed for real-time processing, you can use it to bring your method so much closer to clinical practice.

-

Usage for baseline

With the algorithms implemented in SUPRA, it is perfectly suited as a baseline for comparisons against YOUR method! Of course this also includes the option for offline processing of prerecorded data.

In general, you can specify the processing pipeline through a XML config file, that describes which algorithms are used, how their in- and outputs are connected and how they are parametrized.

Having that said, there are three options for interfacing the framework:

-

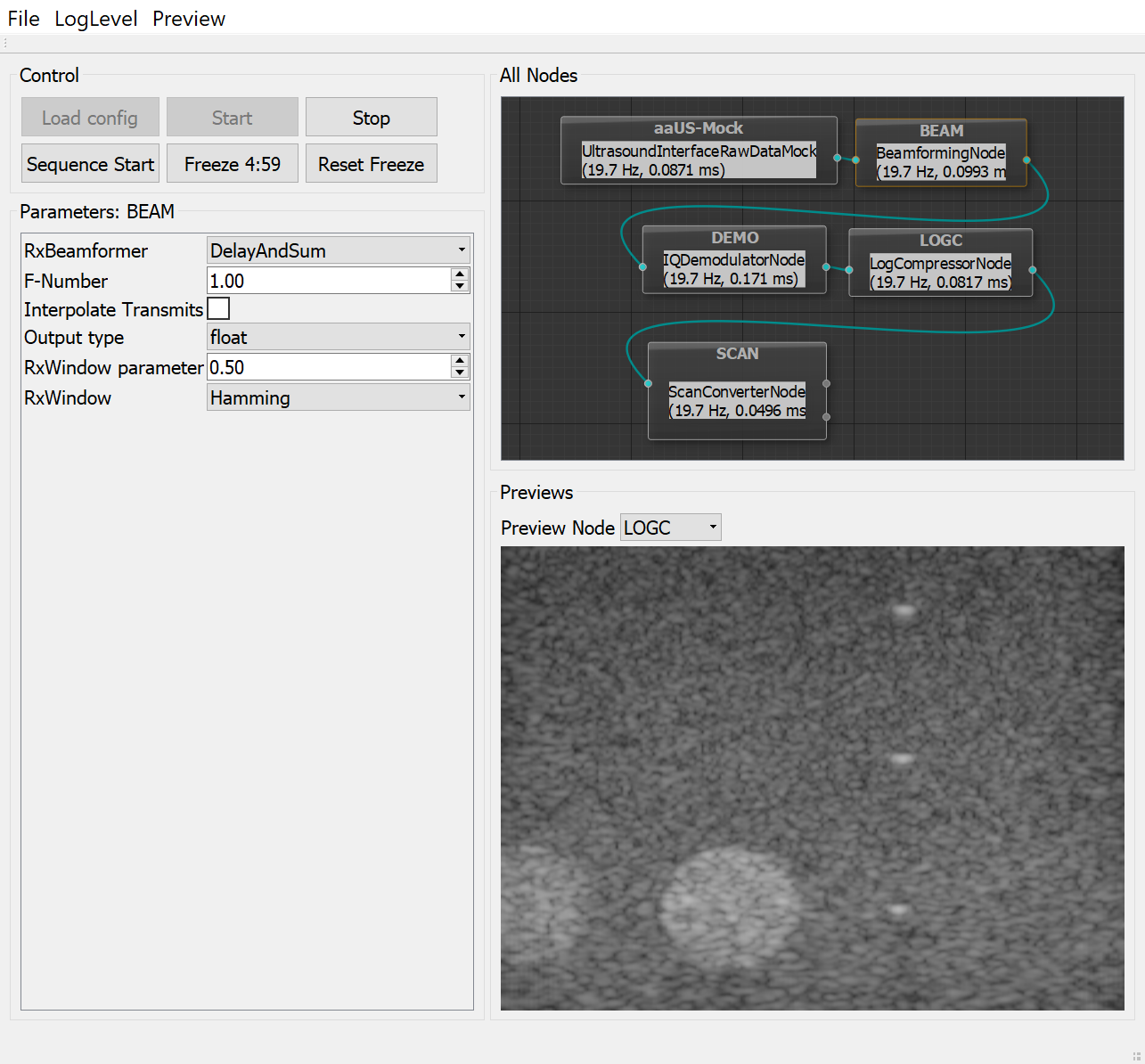

Graphical interface:

With this QT based GUI you can immediately display images from each processing step, modify the pipeline during run-time and even inspect and modify all parameters.

-

ROS interface:

For integration into robotic ultrasound systems, SUPRA provides a ROS interface. This includes publishing of images as ROS messages, as well as ROS-native access to the algorithm parameters through a ROS-service.

-

XML-only:

For those cases where the goal is to process prerecorded data offline, e.g. for evaluation of different methods, there is a batch version of SUPRA. In contrast to the GUI and the ROS interface which can drop images to allow real-time processing, the executor does not drop any frames.

With the interfaces you not only can in one way or the other deal with images, but as mentioned also parameters. Most of the algorithms in image processing are parametrized in some way. And this is also true for the algorithms in SUPRA. To handle that, every processing unit, every “box” in the pipeline, that is every compute node can expose their parameters through a generic interface.

Those parameters can then be accessed online through the GUI, ros or simply set to a value in the config file. The parameter system handles different types, and algorithms get notified of changes of their parameters. It is possible to restrict the range of allowed values for each of them either as a continous set or by specifying a discrete set. Although the parameter system is very flexible, it is simple to use.

The processing in SUPRA is modelled as a dataflow graph using the Intel TBB. Each node within the graph is as slim as possible. This not only limits the complexity of the nodes, but also allows the functions to be executed independently of each other. Ultimately this is what makes SUPRA well suited for prototyping.

We distinguish three types of nodes:

Image input nodes

- Input nodes: They usually connect to some device via the vendor specific API (e.g. for Cephasonics and Ultrasonix US systems), or a network interface (e.g. ROS or IGTL). Using the data from the device, this type of node constructs a SUPRA data-structure lik

USImage, orUSRawDataand passes it into the dataflow graph. - Processing nodes: This type of node performs some type of computation on its input data and the resulting output data is passed into the dataflow graph for each input. They are triggered by the availability of input data.

- Output nodes: They take input data and send it via a network interface or store it to a file. This type of node gets activated by incoming data like the processing nodes, but unlike those it cannot bring new messages into the dataflow graph.

The Intel TBB handles the message passing between nodes. Because we are only passing around shared pointers within the same process, communication is very lightweight and fast. In fact, we already have observed message passing with 400kHz.

The memory buffers (called Container) for images and raw data are smart and handle different data types, and keep track of their location, that is whether it is CPU or GPU memory.

Additionally, we reuse the buffers where ever possible in order to reduce the number of CUDA allocations which would degrade performance. Instead perform garbage collection in regular intervals and of course under memory pressure.

With all those architectural measures, implementing a new processing node requires almost no boilerplate, and for the little that remains, we have examples. So, you don’t have to worry about the “boring” stuff, but can directly focus on the cool things you want to do!

The following functionality is present in SUPRA, realized as nodes.

| Node Type | Function | Valid Input Type | Output Type |

|---|---|---|---|

BeamformingNode |

Performs beamforming on raw-data. It integrates the "delay" portion, i.e. dynamic receive focusing, with the combination of data from the elements (e.g. summation in delay-and-sum). Supports delay-and-sum, delay-standard-deviation-and-sum and test-signal. | USRawData |

USImage scanlines as RF-data |

RawDelayNode |

This node delays its input raw-data in the same way that BeamformingNode would, effectively placing all signals to be used for the reconstruction for one depth at the same time-point, also called dynamic receive focusing. |

USRawData |

USRawData delayed |

BeamformingMVNode |

Implements a Minimum Variance beamformer with parametrizable temporal and sub-aperture averaging, using full matrix inversion. |

USRawData delayed |

USImage scanlines as RF-data. |

BeamformingMVpcgNode |

Implements a Minimum Variance beamformer with parametrizable temporal and sub-aperture averaging, using a PCG solver. |

USRawData delayed |

USImage scanlines as RF-data. |

RxEventLimiterNode |

Modifies Raw data and only keeps certain receive events (scanlines). Use this if you only with to process part of the acquired scanlines. | USRawData |

USRawData |

| Node Type | Function | Valid Input Type | Output Type |

|---|---|---|---|

HilbertEnvelopeNode |

Computes the envelope of RF-data using the hilbert transform, implemented through FFT. |

USImage scanlines as RF-data |

USImage scanline envelopes |

HilbertFirEnvelopeNode |

Computes the envelope of RF-data using the hilbert transform, implemented through a FIR filter. |

USImage scanlines as RF-data. |

USImage scanline envelopes |

IQDemodulatorNode |

Computes the envelope of RF-data using tuned filters, which also allows for frequency compounding. |

USImage scanlines as RF-data. |

USImage scanline envelopes |

LogCompressorNode |

Performs log-compression on scanline data. |

USImage scanlines (usually envelopes). |

USImage Bmode scanlines |

ScanConverterNode |

Converts scanline-data to rectified image. |

USImage scanlines |

USImage scan-converted |

| Node Type | Function | Valid Input Type | Output Type |

|---|---|---|---|

ImageProcessingCpuNode |

Example node for processing of images on the CPU. | USImage |

USImage |

ImageProcessingBufferCudaNode |

Example node for processing of images on the GPU, using Buffers. | USImage |

USImage |

ImageProcessingCudaNode |

Example node for processing of images on the GPU. | USImage |

USImage |

BilateralFilterCudaNode |

Performs a bilateral filter on the image. | USImage |

USImage |

FilterSradCudaNode |

Applies SRAD (Speckle-reducing anisotropic diffusion) to the image. | USImage |

USImage |

MedianFilterCudaNode |

Performs a median filter on the image. | USImage |

USImage |

| Node Type | Function | Valid Input Type | Output Type |

|---|---|---|---|

TorchNode |

Applies a torch model to an image. |

USRawData or USImage

|

Configurable, depending on torch model |

TemporalFilterNode |

Performs element-wise temporal smoothing on an image at any stage. |

USRawData or USImage

|

Same as input |

NoiseNode |

Adds parametrized noise (additive and multiplicative) to an image. |

USRawData or USImage

|

Same as input |

TimeGainCompensationNode |

Applies digital time gain compensation to an image, by depth dependent scaling of image / raw-data intensities. |

USRawData or USImage

|

Same as input |

DarkFilterThresholdingCudaNode |

Implements a rejection filter by suppressing intensities below a set threshold. | USImage |

USImage |

| Node Type | Function | Valid Input Type | Output Type |

|---|---|---|---|

AutoQuitNode |

A node that exits SUPRA after it received a user-configurable number of messages. Useful for offline processing and performance evaluation. | Any | None |

FrequencyLimiterNode |

Performs no computations, but limits the frequency of messages it passes along to be under a user-configurable frequency. This is of particular interest when storing high-bandwidth data to disk. | Any | Same as input |

StreamSyncNode |

This node synchronizes messages from different streams by grouping those messages with the timestamp closest to a main stream. One use-case of that is when collecting tracking data along with images to be able to store and stream tracked data. | Any | Same as input |

TemporalOffsetNode |

In combination with the StreamSyncNode this node node allows to correct for temporal offset in the acquistion of data-streams by applying a temporal calibration. |

Any | Same as input |

StreamSynchronizer |

Upon reception of an image message, this node waits for the (eventual) GPU processing regarding that image to be completed, by waiting for the CUDA stream it is processed on to finish. This can be used to measure computing times of GPU methods. | Any | Same as input |

| Node Type | Function | Message Types |

|---|---|---|

UsIntCephasonicsCc |

This node provides access to Cephasonics US systems in the raw-data capture mode. This enables access to the flexible transmit as well. | USRawData |

UltrasoundInterfaceRawDataMock |

This nodes can load prerecorded ultrasound raw-data and send it into the dataflow graph. This is useful for offline processing. | USRawData |

UltrasoundInterfaceBeamformedMock |

This nodes can load prerecorded beamformed ultrasound data and send it into the dataflow graph. This is useful for offline processing. | USImage |

UsIntCephasonicsBmode |

Provides an interface to Cephasonics US systems using their imaging pipeline. | USImage |

UsIntCephasonicsBtcc |

Using the raw-data interface to Cephasonics US systems, this node provides a transducer element connectivity test. | USImage |

UltrasoundInterfaceSimulated |

Provides synthetic images containing noise for testing purposes. | USImage |

UltrasoundInterfaceUltrasonix |

This node allows to connect to Ultrasonix US systems through the ulterius API and aquire images with access to the imaging parameters. Currently, Bmode and color-doppler are supported, both in their RF-data and final form. | USImage |

TrackerInterfaceIGTL |

Allows to receive tracking data through an IGTL connection. | TrackerDataSet |

TrackerInterfaceROS |

Allows to receive tracking data through a connection to a ROS network. | TrackerDataSet |

TrackerInterfaceSimulated |

Provides synthetic tracking data for testing purposes. | TrackerDataSet |

| Node Type | Function | Valid Message Types |

|---|---|---|

MetaImageOutputDevice |

Stores image streams (and linked tracking streams) to meta-image files. |

USRawData, USImage, TrackerDataSet, SyncRecordObject

|

OpenIGTLinkOutputDevice |

Connected through OpenIGTLink, this node streams images and linked tracking data over a network connection. | USImage |

RosImageOutputDevice |

This node streams images and tracking to a ROS environment, allowing easy integration into robotic US setups. |

USImage, SyncRecordObject

|