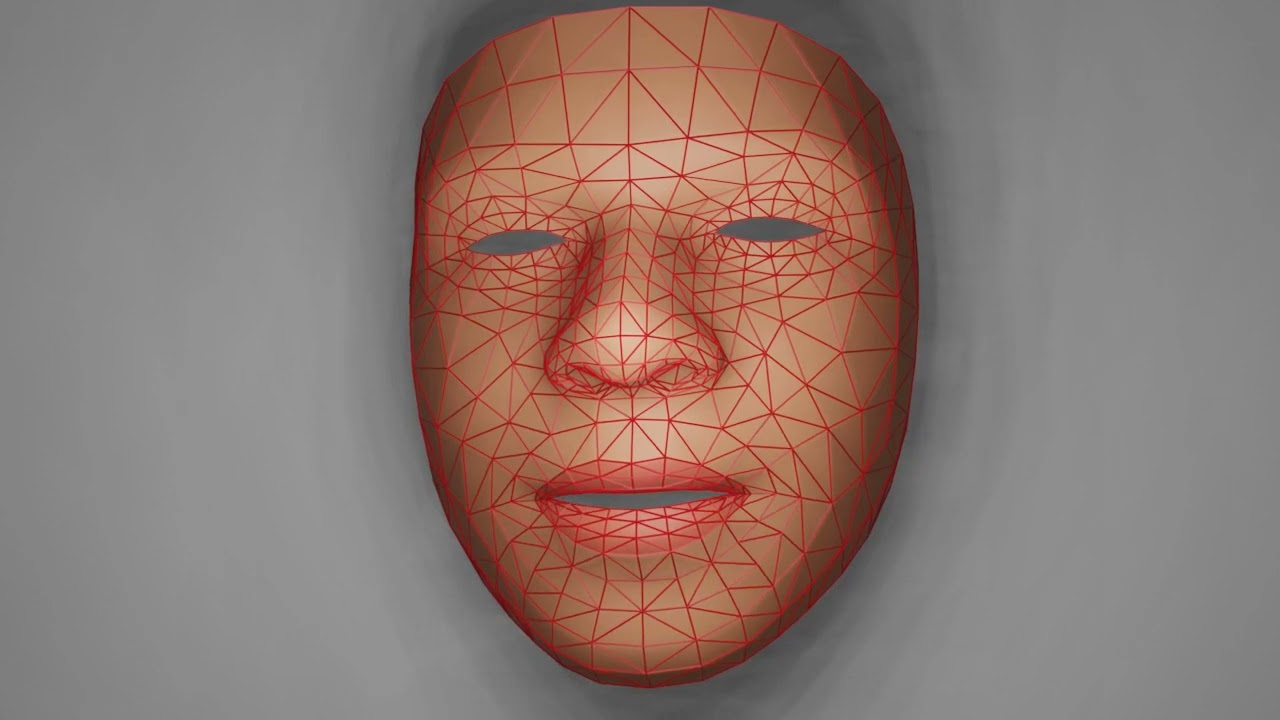

Our model predicts 3D face animation for a given Tamil audio speech with considering the coarticulation effects. Our model achieved a Root Mean Square Error of 0.0648 in our test data split and achieved an 83% of overall subjective accuracy. Further, the Turing test confirmed that participants were unable to distinguish our predicted animation from the ground truth.

SHORT SPEECH

|

LONG SPEECH

|

FAST SPEECH

|

SLOW SPEECH

|

MIXED LANGUAGE SPEECH

|

librosa 0.8.1ffmpeg 4.3.1opencv-python 4.5.2scipy 1.6.2tensorflow 2.6.0sklearn 0.22.2Blender 2.92.0pickle 4.0numpy 1.20.0matplotlib 3.3.4tqdm 4.59.0keras-tuner 1.1.2mediapipe 0.8.9.1

- Place all recorded training videos inside VIDEOs directory

- Run

PDM.pyandPreprocess.pyin the specified order to perform feature extraction - Run

HyperparameterTuning.pyto pick best possible hyperparameter combination (optional) - Set the best hyperparameter values in the

BLSTM_128_64.pyfile (optional) - Run

BLSTM_128_64.pyto train the deeplearning model

- Record the input Tamil speech audio and place it inside the

VIDEOs/AUDIOs/directory - Set Blender path in Prediction.py in

cmdvariable - Run command

python Prediction.py <fileName> <audioFormat>to generate animation

To try our trained model download the preporocessor and model weights from WeightsAndPreprocessor.zip and unzip them inside the logs directory