-

-

Notifications

You must be signed in to change notification settings - Fork 4.6k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Bugfix for IntegralImageNormalEstimation #191

base: master

Are you sure you want to change the base?

Conversation

This commit implements the fixes proposed by James Stout in pcl-dev mailing list (http://www.pcl-developers.org/Bugs-in-IntegralImageNormalEstimation-distance-map-computation-td5708055.html). Specifically: - In depth change map computation the diagonal neighbors are now considered. - In distance map computation Chessboard distance is used instead of an approximation of Euclidean distance. - Use distance map values as smoothing window radius (instead of width).

This commit implements the change to IntergalImageNormalEstimation proposed in pcl-dev mailing list (http://www.pcl-developers.org/Bugs-in-IntegralImageNormalEstimation-distance-map-computation-td5708055.html).

| @@ -731,30 +731,65 @@ | |||

| memset (depthChangeMap, 255, input_->points.size ()); | |||

|

|

|||

| unsigned index = 0; | |||

| for (unsigned int ri = 0; ri < input_->height-1; ++ri) | |||

| for (unsigned int ri = 1; ri < input_->height-1; ++ri) | |||

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

The first row and first column are now getting skipped. Most of them get touched by the NE and SW checks, but that's not sufficient (for example if there is a left-right or up-down discontinuity in the first row it won't be counted).

Actually this bug was also somewhat present before (e.g. left-right discontinuities were not checked in the last row). This seems to get handled later by BORDER_POLICY_IGNORE, although it looks like BORDER_POLICY_MIRROR isn't doing the right thing. Maybe just zero out the borders and columns? This effectively makes BORDER_POLICY_MIRROR a no-op, but honestly I'm not sure how useful that mode is (seems like it would produce really bad normals).

|

Thanks for the comments. I also think that As for the |

|

Regarding the border policies: your proposals sound good. I'm not sure if we can actually remove BORDER_POLICY_MIRROR since it's part of the public interface, but we could at least make it a no-op. |

|

Another thing I mentioned is so-called depth dependent smoothing. When enabled, float smoothing = (std::min)(distanceMap[index] * 2 + 1, normal_smoothing_size_ + static_cast<float>(depth)/10.0f);Assuming that depth is less than 10 m, this addend is always less than 1. If the user sets |

|

Hi Sergey, Sorry for the slow response! I've been super busy lately. D * 2 + 1 is what you want. Think of the discontinuity as occurring at the edge between pixels, and the distances are from the edge of the current pixel to that discontinuity. Then a distance of 1 means we can get away with a 3x3 pixel square. It makes sense that using D*2-1 would fix it, but only because it's hiding a bug elsewhere. Regarding the casting that I think may causing trouble, I'm referring to the static_cast in the line where the smoothing is passed to setRectSize. Try a round instead. The cast you added looks fine. |

|

Hi James, Consider the following depth buffer: Assuming 10 is the max depth change, the depth_change map will be: This will yield the following distance map: According to |

Yes, I got that. I added round there, but it did not seem to change anything. In my comment I actually questioned the utility of this whole "depth dependent smoothing" thing. As I said, it boils down to increasing |

|

The diagonal case is a good example; I hadn't considered that. This seems like a failure of the distance mapping itself, not the window sizing. Specifically, the base-case we are propagating is disobeying its contract. There are a few ways we could fix this:

Both options should fix the base case and inductively the rest of the distance map. The tradeoff is that the first option is slower, but it would sometimes lead to a larger window size where the 2nd case is too conservative. I'm fine with either solution. I don't think this is the cause of the strange artifacts. As the 2nd solution points out, all this bug really does is double the max depth change parameter. It won't let any really severe discontinuities in. |

|

Also, is there an easy way to run the tests you posted earlier? Are those just existing unit tests? When I have some time I'd like to run them and take a closer look at what might be going wrong. |

|

What is the consensus on this issue? |

|

This issue became irrelevant for my work in TOCS so I haven't had enough time/motivation to continue with it. Perhaps I will come to it later, but for now we may put this pull request on hold. |

|

I'm in a similar situation. This isn't actually a problem for me, it's just On Mon, Dec 16, 2013 at 9:00 AM, Sergey Alexandrov <[email protected]

|

|

This issue is very relevant to my current work. I agree that the proposed fixes result in the improvements discussed above, which I have tested for the COVARIANCE_MATRIX case. Would it be possible to merge this issue in the next version of PCL? |

|

This pull request is not finished. As you can see there were some discussions/suggestions/ideas after my initial attempt, but I never got back to work on them. We can not merge the pull request as is, but you are welcome address all the issues we discussed and submit a new one :) |

|

I wonder whether this problem will be solved ? |

|

This pull request has been automatically marked as stale because it hasn't had Come back whenever you have time. We look forward to your contribution. |

|

Marking this as stale due to 30 days of inactivity. Commenting or adding a new commit to the pull request will revert this. |

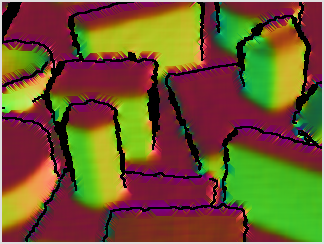

This pull request fixes the bugs in IntegralImageNormalEstimation reported by James Stout in this pcl-dev tread and also introduces a new minimum normal smoothing size parameter.

The introduced fixes seem to play well with

COVARIANCE_MATRIX

SIMPLE_3D_GRADIENT

AVERAGE_DEPTH_CHANGE

(the green borders is an okay thing for this method)

As for AVERAGE_3D_GRADIENT, there are weird artifacts. Unfortunately I did not have time to dig deeper into this problem.