Various methods of scraping websites for Data are explored here.

Workflows for scraping data from a web-page, in XML or HTML formats, are outlined.

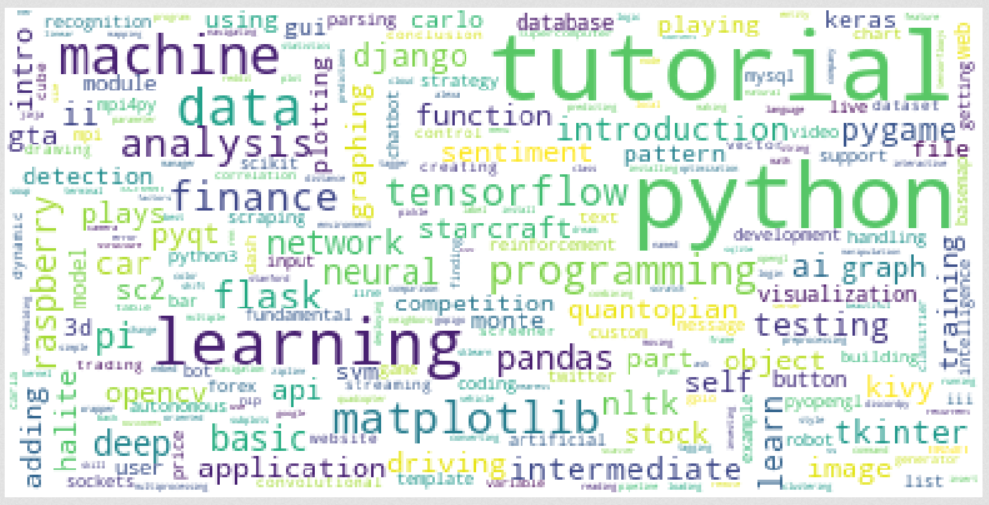

URLs available from the XML page were extracted, and then processed so that they could be visualized as a wordcloud:

A table with information on Diabetes occurrences in children was extracted and read into Python as a DataFrame using the BeautifulSoup library.

A Python script was written for using the read_html function from the pandas library to automatically read in a table from a web-page. The script also output the resulting table as a csv file.

A batch file using the script was then made, and then the script was automated using Task Scheduler to occur automatically at weekly intervals, which makes sure the table is as up to date as possible.

A workflow of using APIs to retrieve json format responses, and then working with them in Python to acquire information on the ISS was outlined here.