This project lets you fine-tune Mask-RCNN on masks annotated using labelme, this allows you to train mask-rcnn on any categories you want to annotate! This project comes with several pretrained models trained on either custom datasets or on subsets of COCO.

The pretrained models are stored in the repo with git-lfs, when you clone make sure you've pulled the files by calling,

git lfs pullor by downloading them from github directly. This project uses conda to manage its enviroment; once conda is installed we create the enviroment and activate it,

conda env create -f enviroment.yml

conda activate object_detection. On windows powershell needs to be initialised and the execution policy needs to be modified.

conda init powershell

Set-ExecutionPolicy -ExecutionPolicy RemoteSigned -Scope CurrentUserThis project comes bundled with several pretrained models, which can be found in the pretrained directory. To infer objects and instance masks on your images run evaluate_images.

# to display the output

python evaluate_images.py --images ~/Pictures/ --model pretrained/model_mask_rcnn_skin_30.pth --display

# to save the output

python evaluate_images.py --images ~/Pictures/ --model pretrained/model_mask_rcnn_skin_30.pth --saveThis was trained with a custom dataset of 89 images taken from COCO where pizza topping annotations were added. There's very few images for each type of topping so this model performs very badly and needs quite a few more images to behave well!

- 'chilli', 'ham', 'jalapenos', 'mozzarella', 'mushrooms', 'olive', 'pepperoni', 'pineapple', 'salad', 'tomato'

Annotated images of birds and cats were taken from COCO using the extract_from_coco script and then trained on.

- cat, birds

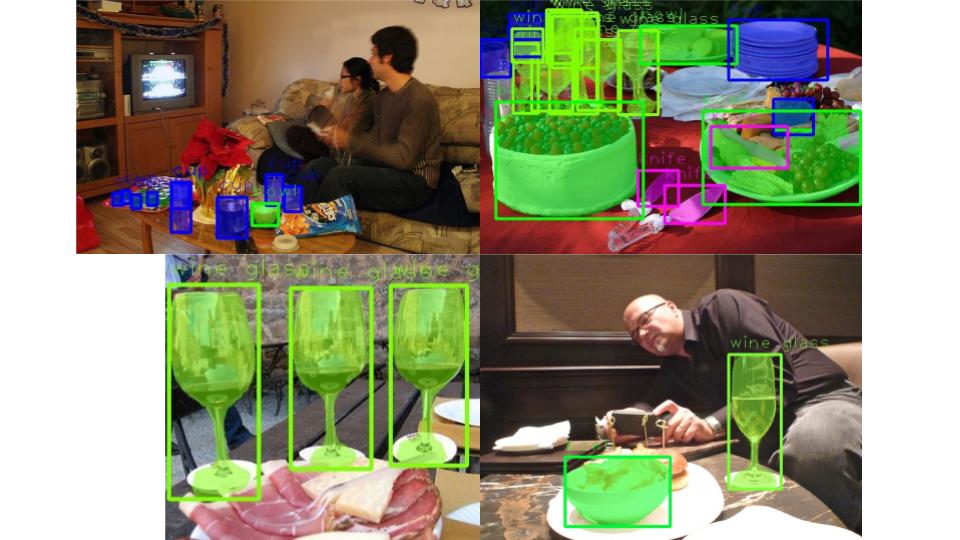

Annotated images of knifes, forks, spoons and other cutlery were taken from COCO using the extract_from_coco script and then trained on.

- knife, bowl, cup, bottle, wine glass, fork, spoon, dining table

To train a new project you can either create new labelme annotations on your images, to launch labelme run,

labelmeand start annotating your images! You'll need a couple of hundred. Alternatively if your category is already in COCO you can run the conversion tool to create labelme annotations from them.

python extract_from_coco.py --images ~/datasets/coco/val2017 --annotations ~/datasets/coco/annotations/instances_val2017.json --output ~/datasets/my_cat_images_val --categories catOnce you've got a directory of labelme annotations you can check how the images will be shown to the model during training by running,

python check_dataset.py --dataset ~/datasets/my_cat_images_val

# to show our dataset with training augmentation

python check_dataset.py --dataset ~/datasets/my_cat_images_val --use-augmentation. If your happy with the images and how they'll appear in training then train the model using,

python train.py --train ~/datasets/my_cat_images_train --val ~/datasets/my_cat_images_val --model-tag mask_rcnn_cat. This may take some time depending on how many images you have. Tensorboard logs are available in the logs directory. To run your trained model on a directory of images run

# to display the output

python evaluate_images.py --images ~/Pictures/my_cat_imgs --model models/model_mask_rcnn_cat_30.pth --display

# to save the output

python evaluate_images.py --images ~/Pictures/my_cat_imgs --model models/model_mask_rcnn_cat_30.pth --save