-

Notifications

You must be signed in to change notification settings - Fork 125

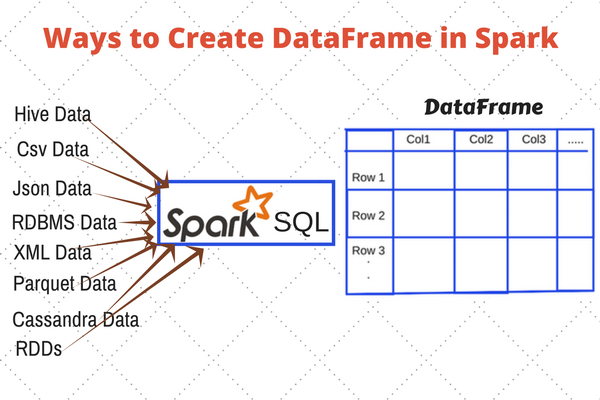

Understanding DataFrames

A DataFrame is an immutable distributed collection of data that is organized into named columns analogous to a table in a relational database. Introduced as an experimental feature within Apache Spark 1.0 as SchemaRDD, they were renamed to DataFrames as part of the Apache Spark 1.3 release. For readers who are familiar with Python Pandas DataFrame or R DataFrame, a Spark DataFrame is a similar concept in that it allows users to easily work with structured data (for example, data tables); there are some differences as well so please temper your expectations.

By imposing a structure onto a distributed collection of data, this allows Spark users to query structured data in Spark SQL or using expression methods (instead of lambdas)

In Apache Spark, a DataFrame is a distributed collection of rows under named columns. In simple terms, it is same as a table in relational database or an Excel sheet with Column headers. It also shares some common characteristics with RDD:

- Immutable in nature : We can create DataFrame / RDD once but can’t change it. And we can transform a DataFrame / RDD after applying transformations.

- Lazy Evaluations: Which means that a task is not executed until an action is performed.

- Distributed: RDD and DataFrame both are distributed in nature.

- DataFrames are designed for processing large collection of structured or semi-structured data.

- Observations in Spark DataFrame are organised under named columns, which helps Apache Spark to understand the schema of a * DataFrame. This helps Spark optimize execution plan on these queries.

- DataFrame in Apache Spark has the ability to handle petabytes of data.

- DataFrame has a support for wide range of data format and sources.

- It has API support for different languages like Python, R, Scala, Java.

- Understanding Spark

- Spark Jobs & API

- Architecture

- RDD Internals

- Creating RDD

- Understanding Deployment & Program Behaviour

- RDD Transformation & Action

- Assignments 1

- Best Practices -1

- Introduction to DataFrame

- PySpark SQL

- Pandas to DataFrames

- Machine Learning with PySpark

- Transformers

- Estimators

- Spark Streaming

- Structured Streaming

- GraphX & GraphFrames

- Data Processing Architectures

- Problems