-

Notifications

You must be signed in to change notification settings - Fork 665

Architect

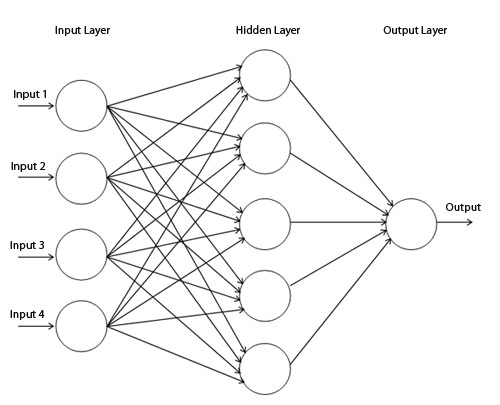

This architecture allows you to create multilayer perceptrons, also known as feed-forward neural networks. They consist of a sequence of layers, each fully connected to the next one.

You have to provide a minimum of 3 layers (input, hidden and output), but you can use as many hidden layers as you wish. This is a Perceptron with 2 neurons in the input layer, 3 neurons in the hidden layer, and 1 neuron in the output layer:

var myPerceptron = new Architect.Perceptron(2,3,1);And this is a deep multilayer perceptron with 2 neurons in the input layer, 4 hidden layers with 10 neurons each, and 1 neuron in the output layer

var myPerceptron = new Architect.Perceptron(2, 10, 10, 10, 10, 1);The long short-term memory is an architecture well-suited to learn from experience to classify, process and predict time series when there are very long time lags of unknown size between important events.

To use this architecture you have to set at least one input layer, one memory block assembly (consisting of four layers: input gate, memory cell, forget gate and output gate), and an output layer.

var myLSTM = new Architect.LSTM(2,6,1);Also you can set many layers of memory blocks:

var myLSTM = new Architect.LSTM(2,4,4,4,1);That LSTM network has 3 memory block assemblies, with 4 memory cells each, and their own input gates, memory cells, forget gates and output gates. Please note that each block still gets input from the previous layer AND the first layer. If you want a more sequential architecture, check out Neataptic. Neataptic also includes an option to clear the network during training, making it better for sequence prediction.

The Liquid architecture allows you to create Liquid State Machines. In these networks, neurons are randomly connected to each other. The recurrent nature of the connections turns the time varying input into a spatio-temporal pattern of activations in the network nodes.

To use this architecture you have to set the size of the input layer, the size of the pool, the size of the output layer, the number of random connections in the pool, and the number of random gates among the connections.

var input = 2;

var pool = 20;

var output = 1;

var connections = 30;

var gates = 10;

var myLiquidStateMachine = new Architect.Liquid(input, pool, output, connections, gates);The Hopfield architecture serves as content-addressable memory. They are trained to remember patterns and then when feeding new patterns to the network it returns the most similar one from the patterns it was trained to remember.

var hopfield = new Architect.Hopfield(10) // create a network for 10-bit patterns

// teach the network two different patterns

hopfield.learn([

[0, 1, 0, 1, 0, 1, 0, 1, 0, 1],

[1, 1, 1, 1, 1, 0, 0, 0, 0, 0]

])

// feed new patterns to the network and it will return the most similar to the ones it was trained to remember

hopfield.feed([0,1,0,1,0,1,0,1,1,1]) // [0, 1, 0, 1, 0, 1, 0, 1, 0, 1]

hopfield.feed([1,1,1,1,1,0,0,1,0,0]) // [1, 1, 1, 1, 1, 0, 0, 0, 0, 0]You can create your own architectures by extending the Network class. You can check the Examples section for more information about this.