The Rock Paper Scissors game project provides infrastructure, REST and gRPC APIs for the Rock Paper Scissors game.

The Rock Paper Scissors game project uses CQRS pattern. CQRS is an architectural pattern that can help maximize performance, scalability, and security. The pattern separates operations that read data from those operations that write data.

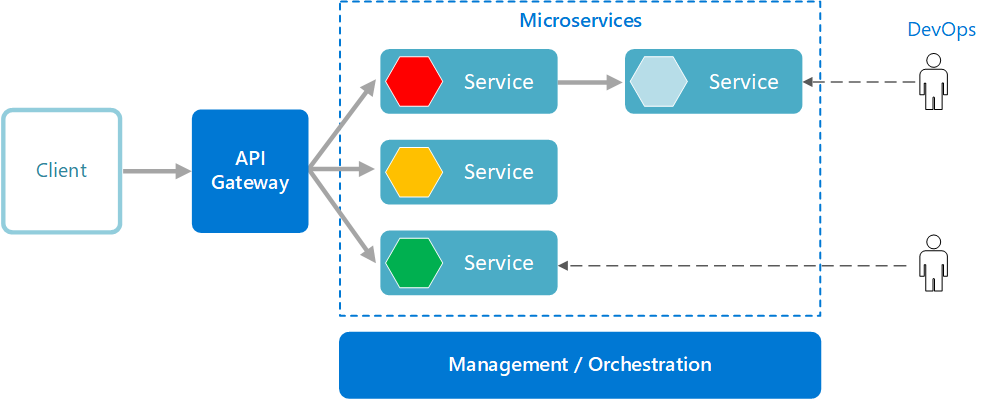

Source: Architecting Cloud Native .NET Applications for Azure

- Rock Paper Scissors game command microservice

- Rock Paper Scissors game query microservice

- Score command microservice

- Score query microservice

- Java 11 or higher

- OpenJDK 11 or higher

- Maven 3.6.3 or higher

- Spring Boot 2.6.1

- Lombok 1.18.20

- MapStruct

- Apache ZooKeeper 3.8.0

- Apache Kafka 2.7.0

- MongoDB NoSQL 4.4.22

- MariaDB Community Server 10.6.14

- H2 Database Engine

- OpenAPI 3.0

- gRPC framework 1.32.1

- Hibernate Validator

- Micrometer 1.8.0

- JUnit 5.8.2

- Mockito 3.9.0

- Spock 2.1

- Apache Groovy 3.0.9

- JaCoCo - unit and integration test coverage

- Flyway - database version control

- Prometheus - metrics database

- Grafana - metrics visualization

- ELK Stack - log aggregation and monitoring in a centralized way

- Redis - cache management

- Keycloak 18.0.0 - identity and access management server

** H2 in-memory database engine is used for it profile only

Local Machine

Microservices active profile is __dev__.

- OpenJDK 11 or higher

- Maven 3.6.0 or higher

- Keycloak 18.0.0

- MongoDB Community Edition

- Mongo Shell

- Apache ZooKeeper 3.8.0

- Apache Kafka 2.7.0

- Redis

- MariaDB Community Server 10.6.14

- Make sure you have OpenJDK 11 or a higher version installed using the following command:

> java -version

Windows 10

You should see the following output:

openjdk version "11.0.19" 2023-04-18

OpenJDK Runtime Environment Temurin-11.0.19+7 (build 11.0.19+7)

OpenJDK 64-Bit Server VM Temurin-11.0.19+7 (build 11.0.19+7, mixed mode)

If not, follow the steps below to install it.

-

Download and extract OpenJDK11U-jdk_x64_windows_hotspot_11.0.19_7.zip archive file for Windows x64 from the Adoptium website.

-

Extract the contents of the OpenJDK11U-jdk_x64_windows_hotspot_11.0.19_7.zip archive file to a directory of your choice. D:\jdks for example.

-

Add/Update user environmental variables. Open a Command Prompt and set the value of the JAVA_HOME environment variable to your Eclipse Temurin OpenJDK 11 for Windows installation path:

> setx JAVA_HOME "D:\jdks\jdk-11.0.19+7"

- Add the bin directory contained in your Eclipse Temurin OpenJDK 11 for Windows installation path to the PATH environment variable:

> setx PATH "%JAVA_HOME%\bin;%PATH%;"

- Add/Update system environmental variables. Open a Command Prompt as Administrator and set the value of the JAVA_HOME environment variable to your Eclipse Temurin OpenJDK 11 for Windows installation path:

> setx -m JAVA_HOME "D:\jdks\jdk-11.0.19+7"

- Add the bin directory contained in your Eclipse Temurin OpenJDK 11 for Windows installation path to the PATH environment variable:

> setx -m PATH "%JAVA_HOME%\bin;%PATH%;"

Note: The setx command permanently updates the environment variables. To add/update system environment variables, you must use the -m switch and open the command prompt using Administrator privilege.

-

Restart the Command Prompt to reload the environment variables.

-

Finally, verify that the JAVA_HOME and PATH environment variables are set and Java is installed:

> echo %JAVA_HOME%

> echo %PATH%

> java -version

Linux Ubuntu 20.04.6 LTS

You should see the following output:

openjdk 11.0.19 2023-04-18

OpenJDK Runtime Environment (build 11.0.19+7-post-Ubuntu-0ubuntu122.04.1)

OpenJDK 64-Bit Server VM (build 11.0.19+7-post-Ubuntu-0ubuntu122.04.1, mixed mode, sharing)

If not, follow the steps below to install it.

- Install the Java Runtime Environment (JRE) from OpenJDK 11 or higher using the following commands:

> sudo apt install default-jre

Note: By default, Ubuntu 20.04 includes OpenJDK 11, which is an open-source variant of the JRE and JDK.

- You can have multiple Java installations on one machine. You can configure which version is the default for use on the command line by using the update-alternatives command:

> sudo update-alternatives --config java

You should see the following output:

There is only one alternative in link group java (providing /usr/bin/java): /usr/lib/jvm/java-11-openjdk-amd64/bin/java

Nothing to configure.

It means that we have only single Java installation, OpenJDK 11, on our machine and it's located at the /usr/lib/jvm/java-11-openjdk-amd64/bin/java directory. Note this directory as you will need it in the next step.

- Then open the /etc/environment file in any text editor, nano for example, using the following command:

> sudo nano /etc/environment

Modifying this file will set the environment variables for all users on your machine.

- At the end of the file, add the following line, making sure to replace Java path with yours obtained in the previous step:

JAVA_HOME="/usr/lib/jvm/java-11-openjdk-amd64"

Note: Do not include the bin/ portion of the Java installation location path to the JAVA_HOME

- Then reload this file to apply the changes to your current session with the following command:

> source /etc/environment

- Finally, verify that the JAVA_HOME environment variable is set and Java is installed:

> echo $JAVA_HOME

> java -version

- Make sure you have Maven or a higher version installed using the following command:

> mvn -version

Windows 10

You should see the following output:

Apache Maven 3.9.3 (21122926829f1ead511c958d89bd2f672198ae9f)

Maven home: D:\maven\apache-maven-3.9.3

Java version: 11.0.19, vendor: Eclipse Adoptium, runtime: D:\jdks\jdk-11.0.19+7

Default locale: ru_RU, platform encoding: Cp1251

OS name: "windows 10", version: "10.0", arch: "amd64", family: "windows"

If not, follow the steps below to install it.

-

Download the apache-maven-3.9.3-bin.zip binary archive file from the Apache Maven Project website.

-

Extract the contents of the apache-maven-3.9.3-bin.zip archive file to a directory of your choice. D:\maven for example.

-

Add/Update user environmental variables. Open a Command Prompt and set the value of the M2_HOME environment variable for Windows installation path:

> setx M2_HOME "D:\maven\apache-maven-3.9.3"

- Add the bin directory contained in your apache-maven-3.9.3-bin.zip for Windows installation path to the PATH environment variable:

> setx PATH "%M2_HOME%\bin;%PATH%;"

- Add/Update system environmental variables. Open a Command Prompt as Administrator and set the value of the M2_HOME environment variable to your apache-maven-3.9.3-bin.zip for Windows installation path:

> setx -m M2_HOME "D:\maven\apache-maven-3.9.3"

- Add the bin directory contained in your apache-maven-3.9.3-bin.zip for Windows installation path to the PATH environment variable:

> setx -m PATH "%M2_HOME%\bin;%PATH%;"

-

Restart the Command Prompt to reload the environment variables.

-

Finally, verify that the M2_HOME and PATH environment variables are set and Maven is installed:

> echo %M2_HOME%

> echo %PATH%

> mvn -version

Linux Ubuntu 20.04.6 LTS

You should see the following output:

Apache Maven 3.9.3 (21122926829f1ead511c958d89bd2f672198ae9f)

Maven home: /opt/apache-maven-3.9.3

Java version: 11.0.19, vendor: Ubuntu, runtime: /usr/lib/jvm/java-11-openjdk-amd64

Default locale: en, platform encoding: UTF-8

OS name: "linux", version: "5.10.102.1-microsoft-standard-wsl2", arch: "amd64", family: "unix"

If not, follow the steps below to install it.

- Install Maven on you Linux Ubuntu machine by executing the following command:

> sudo apt install maven

If you want to install a specific version of Maven, follow steps below.

- Download the apache-maven-3.9.3-bin.tar.gz binary archive file from the Apache Maven Project website.

> wget https://dlcdn.apache.org/maven/maven-3/3.9.3/binaries/apache-maven-3.9.3-bin.tar.gz

- Once the download is completed, extract the downloaded file with the following commands:

> tar -xvzf apache-maven-3.9.3-bin.tar.gz

- Move the extracted files to the /opt directory with the following command:

> sudo mv apache-maven-3.9.3 /opt

- Remove the downloaded archive:

> rm apache-maven-3.9.3-bin.tar.gz

- Then open the /etc/environment file in nano text editor, using the following command:

> sudo nano /etc/environment

- At the end of the file, add the following line:

M2_HOME="/opt/apache-maven-3.9.3"

- Then reload this file to apply the changes to your current session with the following command:

> source /etc/environment

- Verify that the JAVA_HOME environment variable is set:

> echo $M2_HOME

You should see the following output:

/opt/apache-maven-3.9.3

- Add the bin directory contained in maven path to the PATH environment variable:

> export PATH="$M2_HOME/bin:$PATH"

- Then reload this file to apply the changes to your current session with the following command:

> source /etc/environment

- Finally, verify the Maven installation:

> mvn -version

You should see the following output:

Apache Maven 3.9.3 (21122926829f1ead511c958d89bd2f672198ae9f)

Maven home: /opt/apache-maven-3.9.3

Java version: 11.0.19, vendor: Ubuntu, runtime: /usr/lib/jvm/java-11-openjdk-amd64

Default locale: en, platform encoding: UTF-8

OS name: "linux", version: "5.10.102.1-microsoft-standard-wsl2", arch: "amd64", family: "unix"

Docker Compose

Microservices active profile is __docker__.

- Make sure you have Docker Desktop installed using the following command:

> docker -v

You should see the following output:

Docker version 24.0.2, build cb74dfc

- If Docker Desktop is not installed navigate to the docker website download and install it on your local machine.

Windows 10

* Follow the installation instructions below to install [Docker Desktop](https://www.docker.com/products/docker-desktop) on Windows 10 machine:

Linux Ubuntu 20.04.6 LTS

* Follow the installation instructions below to install [Docker Desktop](https://www.docker.com/products/docker-desktop) on Linux Ubuntu machine:

- Docker Compose is also required. Docker Desktop includes Docker Compose along with Docker Engine and Docker CLI which are Compose prerequisites.

- Check if Docker Compose is installed:

> docker compose version

You should see the following output:

Docker Compose version v2.18.1

- Check if Docker Swarm mode is active. To check it, you can simply run the command:

> docker info

And check the status of the Swarm property.

Swarm: inactive

- If it is not active, you can simply run the command:

> docker swarm init

to activate it.

You should see the following output:

Swarm initialized: current node (1ram5oln14qdk23b08eb5iv3q) is now a manager.

To add a worker to this swarm, run the following command:

docker swarm join --token SWMTKN-1-0im35q3hssq4ztnp2ftcq8dvyy4zg3sfhfg0twoo80iu8mhv6s-55g0y3u102p52rrunc6our8ji 192.168.1.106:2377

To add a manager to this swarm, run 'docker swarm join-token manager' and follow the instructions.

- Launch Docker Desktop:

> systemctl --user start docker-desktop

-

Ensure that sufficient resources have been allocated to Docker Compose.

-

Set COMPOSE_PROJECT_NAME environmental variables:

Windows 10

> setx COMPOSE_PROJECT_NAME "rps-app"

Linux Ubuntu 20.04.6 LTS

> export COMPOSE_PROJECT_NAME=rps-app

> source /etc/environment

Mote: By default, the log file directory is:

Linux Ubuntu 20.04.6 LTS

_/var/lib/docker/containers/<container_id>_

on the host where the container is running.

Kubernetes

Microservices active profile is __prod__.

Make sure that k8s is enabled in the Docker Desktop. If not, click on the Settings icon, then on the Kubernetes tab and check the Enable Kubernetes checkbox.

You can also use minikube for local K8S development.

Make sure Minikube, kubectl and helm are installed.

kubectl installation

Minikube installation

Helm installation

How To Install Minikube on Ubuntu 22.04|20.04|18.04

How To Install Docker On Ubuntu 22.04 | 20.04

Start minikube cluster:

> minikube start \

--addons=ingress,dashboard \

--cni=flannel \

--install-addons=true \

--kubernetes-version=stable \

--vm-driver=docker --wait=false \

--cpus=4 --memory=6g --nodes=1 \

--extra-config=apiserver.service-node-port-range=1-65535 \

--embed-certs \

--no-vtx-check \

--docker-env HTTP_PROXY=https://minikube.sigs.k8s.io/docs/reference/networking/proxy/

Note: The infrastructure clusters require significant resources (CPUs, memory). For example, I have the following server configuration:

OS: Ubuntu 22.04.2 LTS (Jammy Jellyfish)

Processor: Intel Xeon Processor (Icelake) 2GHz 16Mb

vCPU: 4

RAM: 32

Make sure Minikube is up and running with the following command:

> minikube status

You should see the following output:

minikube

type: Control Plane

host: Running

kubelet: Running

apiserver: Running

kubeconfig: Configured

Verify that metrics-server is installed by executing the following command:

> minikube addons list | grep metrics-server

If not, you should see the following output:

| metrics-server | minikube | disabled | Kubernetes

To install metrics-server on your K8S cluster, run:

> minikube addons enable metrics-server

You should see the following output:

You can view the list of minikube maintainers at: https://github.com/kubernetes/minikube/blob/master/OWNERS

- Using image registry.k8s.io/metrics-server/metrics-server:v0.6.3

* The 'metrics-server' addon is enabled

Verify that metrics-server pod is up and running:

> kubectl get pods -n kube-system | grep metrics-server

You should see the following output:

metrics-server-6588d95b98-bdb6x 1/1 Running 0 2m35s

It means that metrics-server is up and running.

Now, if you run the following command:

> kubectl top pod -n rps-app-dev

You should see resources used in specified namespace:

NAME CPU(cores) MEMORY(bytes)

rps-cmd-service-deployment-59bc84c8-bcx4b 1m 573Mi

rps-qry-service-deployment-9b4fbc8f6-vw58g 3m 590Mi

score-cmd-service-deployment-676c56db8-rpfbc 1m 389Mi

Now that you are certain everything is up and running deploy the Kubernetes Dashboard with the command:

> minikube dashboard

If you want to access the K8S Dashboard from outside the cluster, run the following command:

> kubectl proxy --address='0.0.0.0' --accept-hosts='^*$'

And then access the K8S Dashboard in any browser:

http://<ip of your hosting server>:8001/api/v1/namespaces/kubernetes-dashboard/services/http:kubernetes-dashboard:/proxy/#/workloads?namespace=default

Open a Command Prompt and check if access is available for your Minikube cluster:

> kubectl cluster-info

You should see the following output:

Kubernetes control plane is running at https://192.168.49.2:8443

CoreDNS is running at https://192.168.49.2:8443/api/v1/namespaces/kube-system/services/kube-dns:dns/proxy

To further debug and diagnose cluster problems, use 'kubectl cluster-info dump'.

Check the state of your Minikube cluster:

> kubectl get nodes

The output will list all of a cluster’s nodes and the version of Kubernetes each one is running.

NAME STATUS ROLES AGE VERSION

minikube Ready control-plane 7d14h v1.26.3

You should see a single node in the output called minikube. That’s a full K8S cluster, with a single node.

First, we have to set up our infrastucture (backing services).

Local Machine

- Clone the rps-microservices project to your local machine by executing the following command:

> git clone https://github.com/hokushin118/rps-microservices.git

- Once the cloning is completed, go to the rps-microservices folder by executing the following command:

> cd rps-microservices

- Execute the mvn clean install command in the root directory of the project to build microservices and its dependencies locally.

> mvn clean install

Note: Each microservice and shared dependency should normally be hosted in its own git repository.

Keycloak is an open source authentication server that implements OpenID Connect (OIDC) and OAuth 2.0 standards. It's used to allow SSO (Single Sign-On) with identity and access management aimed at modern applications and services.

The Keycloak exposes endpoints to support standard functionality, including:

- Authorize (authenticate the end user)

- JWT Token (request a token programmatically)

- Discovery (metadata about the server)

- User Info (get user information with a valid access token)

- Device Authorization (used to start device flow authorization)

- Introspection (token validation)

- Revocation (token revocation)

- End Session (trigger single sign-out across all apps)

Keycloak is used to implement the following patterns:

Architecture Diagram

Source: Server Installation and Configuration Guide

In our setup (execpt local machine profile), we use PostgreSQL as a database for Keycloak to persist data such as users, clients and realms, but you can choose any other database from the list below.

Infinispan caches provide flexible, in-memory data stores that you can configure to suit use cases such as:

- Boosting application performance with high-speed local caches.

- Optimizing databases by decreasing the volume of write operations.

- Providing resiliency and durability for consistent data across clusters.

Local Machine

Window 10

-

Download and extract keycloak-18.8.0.zip archive file from the Keycloak website.

-

Import the rps-dev realm from the /infrastructure/keycloak/rps-dev-realm.json file by executing the following command:.

> bin\kc.bat import --dir <path to root directory>\rps-microservices\infrastructure\keycloak\ --override true

You should see the following line in the output:

2023-07-02 16:08:13,347 INFO [org.keycloak.exportimport.util.ImportUtils] (main) Realm 'rps-dev' imported

- To start the Keycloak 18.0.0 in development mode, run the following command:

> bin\kc.bat start-dev --http-port 8180

The Keycloak 18.0.0 will be started in dev mode on port number 8190.

Linux Ubuntu 20.04.6 LTS

* Ensure your system is updated:

> sudo apt update && sudo apt upgrade

- Download the keycloak-18.0.0.tar.gz archive file from the Keycloak website.

> wget https://github.com/keycloak/keycloak/releases/download/18.0.0/keycloak-18.0.0.tar.gz

- Once the download is completed, extract the downloaded file with the following commands:

> tar -xvzf keycloak-18.0.0.tar.gz

- Move the extracted files to the /opt/keycloak directory with the following command:

> sudo mv keycloak-18.0.0 /opt/keycloak

- Remove the downloaded archive:

> rm keycloak-18.0.0.tar.gz

- Then copy the Keycloak configuration file for H2 database with the following command:

> sudo cp ./infrastructure/linux/ubuntu/conf/keycloak.conf /opt/keycloak/conf/keycloak.conf

- Then open the /etc/environment file using the following command:

> sudo nano /etc/environment

- At the end of the file, add the following line and save the changes.

KEYCLOAK_HOME="/opt/keycloak"

- Then reload this file to apply the changes to your current session with the following command:

> source /etc/environment

- Finally, verify that the KEYCLOAK_HOME environment variable is set:

> echo $KEYCLOAK_HOME

You should see the following output:

/opt/keycloak

- Create a separate user account (service account) for the keycloak service using the following commands:

> sudo useradd keycloak -m

> sudo usermod --shell /bin/bash keycloak

Note: It is considered a best practice for using a separate service account for each application. The same can be said about creating a separate group for each service account.

- Add the user to the sudo group for it to have Administrative Privileges using the following command:

> sudo usermod -aG sudo keycloak

- To verify that the keycloak user has been added to the sudo group run the following command:

> id keycloak

You should see the following output:

> uid=998(keycloak) gid=1003(keycloak) groups=1003(keycloak),27(sudo)

It means that the keycloak user belongs to two groups: keycloak and sudo.

- Hide the account from the login screen:

> sudo /var/lib/AccountsService/users/keycloak

and add the following lines to the file:

[User]

SystemAccount=true

- Give the keycloak user ownership of the keycloak files by executing the following command:

> sudo chown -R keycloak:keycloak $KEYCLOAK_HOME

- Import the rps-dev realm from the _ /infrastructure/keycloak/rps-dev-realm.json_ file by executing the following command:

> sudo mkdir -p $KEYCLOAK_HOME/data/import && sudo cp ./infrastructure/keycloak/rps-dev-realm.json $KEYCLOAK_HOME/data/import/rps-dev-realm.json

You should see the following line in the output:

2023-07-02 16:08:13,347 INFO [org.keycloak.exportimport.util.ImportUtils] (main) Realm 'rps-dev' imported

Note: Skip the next step if you are going to run Keycloak 18.0.0 as systemd service.

- To start the Keycloak 18.0.0 in development mode, run the following command:

> sudo $KEYCLOAK_HOME/bin/kc.sh start-dev --import-realm --http-port 8180

The Keycloak 18.0.0 will be started in dev mode on port number 8190.

Note: When running in development mode, Keycloak 18.0.0 uses by default an H2 Database to store its configuration.

- You will need to create a systemd service file to manage the Keycloak service. You can copy the sample systemd service with the following command:

> sudo cp ./infrastructure/linux/ubuntu/systemd/keycloak.service /etc/systemd/system/keycloak.service

- Then, reload the systemd daemon to apply the changes by executing the following command:

> systemctl daemon-reload

- Then, start the keycloak service and enable it to start at system reboot by executing the following commands:

> systemctl start keycloak

> systemctl enable keycloak

- You can check the status of the keycloak service with the following command:

> systemctl status keycloak

You should see the following output:

keycloak.service - The Keycloak IAM (Identity and Access Management) service

Loaded: loaded (/etc/systemd/system/keycloak.service; disabled; vendor preset: enabled)

Active: active (running) since Sun 2023-07-09 20:21:14 MSK; 43s ago

Main PID: 128421 (java)

Tasks: 55 (limit: 18682)

Memory: 345.2M

CGroup: /system.slice/keycloak.service

└─128421 java -Xms64m -Xmx512m -XX:MetaspaceSize=96M -XX:MaxMetaspaceSize=256m -Djava.net.preferIPv4Stack=true -Dkc.home.dir=/opt/keycloak/bin/../ -Djboss.server.config.dir=/opt/keycloak/bi>

You can also view the sys logs by executing the following command:

> cat /var/log/syslog

You should see the following lines in the sys log file:

Jul 9 20:27:14 hokushin-Latitude-3520 keycloak[128836]: 2023-07-09 20:27:14,589 WARN [org.keycloak.quarkus.runtime.KeycloakMain] (main) Running the server in development mode. DO NOT use this configuration in production.

- Open http://localhost:8180 and create a super user by filling the form with your preferred username and password.

For example:

| user name | password |

|---|---|

| admin | admin |

- Open Keycloak admin panel, enter super user credentials and make sure that rps-dev realm and test users has successfully been imported.

Keycloak Getting Started

How to export and import Realms in Keycloak

Docker Compose

- Then navigate to the root directory of the RPS Game project on your computer and run the Docker Compose command below to deploy Keycloak 18.0.0 on Docker Compose in the background:

> docker compose -f docker-compose-kc.yml up -d

You should see the following output:

[+] Running 4/4

✔ Network rps_net Created 0.2s

✔ Volume "rps_app_postgresql-data" Created 0.0s

✔ Container rps-app-postgresql-1 Started 2.0s

✔ Container rps-app-keycloak-1 Started 2.1s

- Verify that Keycloak 18.0.0 and PostgreSQL containers are up and running by executing the following command:

> docker compose -f docker-compose-kc.yml ps

You should see the following output:

NAME IMAGE COMMAND SERVICE CREATED STATUS PORTS

rps-app-keycloak-1 m rps-app-keycloak "/opt/bitnami/keyclo…" keycloak 2 minutes ago Up 2 minutes 0.0.0.0:28080->8080/tcp

rps-app-postgresql-1 bitnami/postgresql:14.2.0-debian-10-r95 "/opt/bitnami/script…" postgresql 2 minutes ago Up 2 minutes 0.0.0.0:15432->5432/tcp

It means that Keycloak 18.0.0 and PostgreSQL containers are up and running.

Note: Make sure that necessary external ports are not in use. If so, kill the processes by executing the following commands:

Windows 10

> netstat -ano | findStr "<necessary external port>"

> tasklist /fi "<pid of the proccess>"

Linux Ubuntu 20.04.6 LTS

> sudo fuser -k <necessary external port>/tcp

- Navigate to the keycloak microservice administration console:

> http://localhost:28080/admin

Enter credentials below:

| user name | password |

|---|---|

| admin | admin |

and make sure that rps-dev realm has been activated.

- When we don't need keycloak container anymore, we can take down containers and delete their corresponding volumes (-v) using the down command below:

> docker compose -f docker-compose-kc.yml down -v

Kubernetes

To create a kube-auth namespace on the K8S cluster, run:

> kubectl apply -f ./k8s/namespaces/kube-auth-ns.yml

To check the status, run:

> kubectl get namespaces --show-labels

You should see the following output:

NAME STATUS AGE LABELS

default Active 10d kubernetes.io/metadata.name=default

ingress-nginx Active 10d app.kubernetes.io/instance=ingress-nginx,app.kubernetes.io/name=ingress-nginx,kubernetes.io/metadata.name=ingress-nginx

kube-auth Active 2d10h kubernetes.io/metadata.name=kube-auth,name=kube-auth

kube-cache Active 3d16h kubernetes.io/metadata.name=kube-cache,name=kube-cache

kube-db Active 6d19h kubernetes.io/metadata.name=kube-db,name=kube-db

kube-elk Active 18h kubernetes.io/metadata.name=kube-elk,name=kube-elk

kube-kafka Active 23s kubernetes.io/metadata.name=kube-kafka,name=kube-kafka

kube-monitoring Active 29m kubernetes.io/metadata.name=kube-monitoring,name=kube-monitoring

kube-node-lease Active 10d kubernetes.io/metadata.name=kube-node-lease

kube-nosql-db Active 26h kubernetes.io/metadata.name=kube-nosql-db,name=kube-nosql-db

kube-public Active 10d kubernetes.io/metadata.name=kube-public

kube-system Active 10d kubernetes.io/metadata.name=kube-system

kubernetes-dashboard Active 10d addonmanager.kubernetes.io/mode=Reconcile,kubernetes.io/metadata.name=kubernetes-dashboard,kubernetes.io/minikube-addons=dashboard

Add a custom entry to the etc/hosts file using the nano text editor:

> sudo nano /etc/hosts

You should add the following ip address (copied in the previous step) and custom domain to the hosts file:

192.168.49.2 kc.internal

You may check the custom domain name with ping command:

> ping kc.internal

You should see the following output:

PING kc.internal (192.168.49.2) 56(84) bytes of data.

64 bytes from kc.internal (192.168.49.2): icmp_seq=1 ttl=64 time=0.064 ms

64 bytes from kc.internal (192.168.49.2): icmp_seq=2 ttl=64 time=0.048 ms

64 bytes from kc.internal (192.168.49.2): icmp_seq=3 ttl=64 time=0.056 ms

To create a Simple Fanout Ingress for the RPS microservices, run:

> kubectl apply -f ./k8s/ingress/kc-ingress.yml

Make sure the Keycloak ingress has been created:

> kubectl get ingress -n kube-auth

Note: Note for the ingress rule to take effect it needs to be created in the same namespace as the service.

You should see the following output:

NAME CLASS HOSTS ADDRESS PORTS AGE

kc-ingress nginx kc.internal 192.168.49.2 80 12m

To deploy Keycloak on K8S cluster with PostgreSQL database execute the following command:

> helm install keycloak \

--set image.tag=18.0.0-debian-11-r7 \

--set auth.adminUser=admin \

--set auth.adminPassword=admin \

--set auth.managementPassword=admin \

--set postgresql.postgresqlPassword=admin \

--set replicaCount=3 \

oci://registry-1.docker.io/bitnamicharts/keycloak -n kube-auth

Keycloak helm chart parameters

Wait for some time until the chart is deployed. You should see the following output:

Pulled: registry-1.docker.io/bitnamicharts/keycloak:15.1.3

Digest: sha256:0ab81efa3f53a1535b2d8948a365d15518f3c42d094e86e84437b6d54b199796

NAME: keycloak

LAST DEPLOYED: Thu Jun 15 19:59:32 2023

NAMESPACE: kube-auth

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

CHART NAME: keycloak

CHART VERSION: 15.1.3

APP VERSION: 21.1.1

** Please be patient while the chart is being deployed **

Keycloak can be accessed through the following DNS name from within your cluster:

keycloak.kube-auth.svc.cluster.local (port 80)

To access Keycloak from outside the cluster execute the following commands:

1. Get the Keycloak URL by running these commands:

export HTTP_SERVICE_PORT=$(kubectl get --namespace kube-auth -o jsonpath="{.spec.ports[?(@.name=='http')].port}" services keycloak)

kubectl port-forward --namespace kube-auth svc/keycloak ${HTTP_SERVICE_PORT}:${HTTP_SERVICE_PORT} &

echo "http://127.0.0.1:${HTTP_SERVICE_PORT}/"

2. Access Keycloak using the obtained URL.

3. Access the Administration Console using the following credentials:

echo Username: user

echo Password: $(kubectl get secret --namespace kube-auth keycloak -o jsonpath="{.data.admin-password}" | base64 -d)

Note the service name displayed in the output, as you will need this in subsequent steps.

keycloak.kube-auth.svc.cluster.local (port 80)

Make sure that the Keycloak cluster is up and running with the following command:

> kubectl get pods -n kube-auth -o wide -w

It will take some time. You can use -w (--watch) flag to start watching updates to deployment.

You should see the following output:

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

keycloak-0 1/1 Running 0 6m51s 10.244.0.14 minikube <none> <none>

keycloak-1 1/1 Running 0 6m51s 10.244.0.14 minikube <none> <none>

keycloak-2 1/1 Running 0 6m51s 10.244.0.14 minikube <none> <none>

keycloak-postgresql-0 1/1 Running 0 6m50s 10.244.0.15 minikube <none> <none>

Note: To access the Keycloak server locally, we have to forward a local port 80 to the Kubernetes node running Keycloak with the following command:

> kubectl port-forward --address 0.0.0.0 service/keycloak 8080:80 -n kube-auth

To access the Keycloak Administration Console, open the following URL in the browser: http://kc.internal/admin

a) Click the word Master in the top-left corner, then click Add realm.

b) Enter rps-dev in the Add realm Name field then click the Create button.

Configure an Open ID Connect (OIDC) Client

Open ID Connect (OIDC) is a modern SSO (Single Sign-On) protocol built on top of the OAuth 2.0 Authorization Framework. Open ID Connect (OIDC) makes use of JWT (JSON Web Token) in the form of identity (contains information about the logged user such as the username and the email) and access (contains access data such as the roles) tokens.

With the new realm created, let's create a client that is an application or group of applications that will authenticate in this Realm.

a) Click Clients menu item in the left navigation bar and then click the Create button.

b) Enter the Client ID. The Client ID is a string used to identify our client. We will use oauth2-proxy.

c) Select the Client Protocol openid-connect from the drop-down menu and click the Save button.

d) From the Access Type drop-down menu, select confidential option. This is the access type for server-side applications.

e) In the Valid Redirect URIs box, you can add multiple URLs that are valid to be redirected after the authentication. If this oauth2-proxy client will be used for multiple applications on your cluster, you can add a wildcard like https://your-domain.com/*. In my configuration, I've added http://rps.internal/* and https://rps.internal/*.

f) Confirm that the Standard Flow Enabled and Direct Access Grants Enabled toggle buttons are enabled. The __ Standard Flow Enabled__ property is used to activate the Authorization Code Flow .

g) Turn on the Service Accounts Enabled toggle button.

h) Turn on the Implicit Flow Enabled toggle button. It's required for OpenAPI 3.0 OAuth 2.0 authentication.

i) Click the Save button to persist changes.

The webpage will automatically be refreshed, and you will see a new tab called Credentials. Click on the __ Credentials__ tab and copy the value of the Secret textbox as you will need this in the next steps.

H0fnsBnCc7Ts22rxhvLcy66s1yvzSRgG

That's it. We have created a client that we can use to authenticate the users visiting our application.

Now when you have the client secret value for OAuth2 Client oauth2-proxy, you can request an access token using the client-credentials grant type, execute the following command:

> curl --location --request POST "http://kc.internal/realms/rps-dev/protocol/openid-connect/token" \

--header "Content-Type: application/x-www-form-urlencoded" \

--data-urlencode "grant_type=client_credentials" \

--data-urlencode "client_id=oauth2-proxy" \

--data-urlencode "client_secret=HVxWhjNes0vU3FyxETpmBcYXyV0WVAgw"

You will get an access token that you can use with Keycloak REST API:

{

"access_token":"eyJhbGciOiJSUz...",

"expires_in":300,

"refresh_expires_in":0,

"token_type":"Bearer",

"not-before-policy":0,

"scope":"profile email"

}

Keycloak Server OIDC URI Endpoints

For example, you can get the user info executing the following command:

> curl --location --request GET "http://kc.internal/realms/rps-dev/protocol/openid-connect/userinfo" \

--header "Content-Type: application/x-www-form-urlencoded" \

--header "Authorization: Bearer <access token obtained in the previous step>" \

--data-urlencode "grant_type=client_credentials" \

--data-urlencode "client_id=oauth2-proxy" \

--data-urlencode "client_secret=HVxWhjNes0vU3FyxETpmBcYXyV0WVAgw"

Groups mapper

a) Select the Mappers tab on the Create Protocol Mapper page, add a new mapper and enter all the groups using the following settings:

b) Enter the Name. We will use groups.

c) From the Mapper Type drop-down menu, select Group Membership option.

d) Enter the Token Claim Name. We will use groups.

e) Turn off the Full group path toggle button.

f) Click the Save button to persist changes.

Audience mapper

a) Select the Mappers tab on the Create Protocol Mapper page, add a new mapper and enter all the groups using the following settings:

b) Enter the Name. We will use audience.

c) From the Mapper Type drop-down menu, select Audience option.

d) From the Included Client Audience drop-down menu, select oauth2-proxy option.

e) Click the Save button to persist changes.

- Create an admin role

a) Click the Roles menu item in the left navigation bar and then click the Add Role button.

b) Enter ROLE_ADMIN as Role Name and click the Save button.

- Create a general user and moderator roles

Repeat the same steps for the ROLE_USER and ROLE_MODERATOR roles.

| role name | description |

|---|---|

| ROLE_ADMIN | Admin user |

| ROLE_MODERATOR | Moderator user |

| ROLE_USER | General user |

In Keycloak , Groups are just a collection of users that you can apply roles and attributes to in one place.

- Create an admin group

a) Click the Groups menu item in the left navigation bar and then click the New button:

b) Enter admins as Name and click the Save button to persist changes.

b) Assign appropriate roles to the created group.

- Create moderators and users groups

Repeat the same steps for moderators and users groups.

| group name | roles |

|---|---|

| admins | ROLE_ADMIN |

| moderators | ROLE_MODERATOR |

| users | ROLE_USER |

- Create an admin user

a) Click the Users menu item in the left navigation bar and then click the Add user button.

b) Enter admin as username, an email address and a password for the new user, and add the user to the users, _ moderators_ and admins groups.

c) Turn on the Email Verified toggle button.

User Details:

| property | value |

|---|---|

| Username | admin |

| [email protected] | |

| User Enabled | ON |

| Email Verified | ON |

| Groups | admins moderators users |

User Credentials:

| property | value |

|---|---|

| Password | admin |

| Temporary | OFF |

d) Set password for the new user.

- Create a general user

Repeat the same steps for test user. Add the test user to the user group only.

User Details:

| property | value |

|---|---|

| Username | test |

| [email protected] | |

| User Enabled | ON |

| Email Verified | ON |

| Groups | users |

User Credentials:

| property | value |

|---|---|

| Password | test |

| Temporary | OFF |

Update the application Keycloak properties in the microservices application-.yml files.

| property | value |

|---|---|

| hostname | kc.internal |

| port | 8180 |

| realm | rps-dev |

| client-id | oauth2-proxy |

| secret | H0fnsBnCc7Ts22rxhvLcy66s1yvzSRgG |

Spring Security - OAuth2 configuration

TODO: grpc security configuration

That's it! Microservices infrastructure backing services is up and running. We can start deploying microservices.

Local Machine

Window 10

-

Download and install MongoDB Community Edition from official website.

-

Download and install Mongo Shell from official website.

Linux Ubuntu 20.04.6 LTS

* Ensure your system is updated:

> sudo apt update && sudo apt upgrade

- First, import GPK key for the MongoDB apt repository on your system using the following command:

> sudo apt-key adv --keyserver hkp://keyserver.ubuntu.com:80 --recv 656408E390CFB1F5

Then add MongoDB APT repository url in /etc/apt/sources.list.d/mongodb.list using the following command:

> echo "deb [ arch=amd64,arm64 ] https://repo.mongodb.org/apt/ubuntu focal/mongodb-org/4.4 multiverse" | sudo tee /etc/apt/sources.list.d/mongodb-org-4.4.list

- And then, install MongoDB on your computer using the following commands:

> sudo apt update

> sudo apt install mongodb-org=4.4.22 mongodb-org-server=4.4.22 mongodb-org-shell=4.4.22 mongodb-org-mongos=4.4.22 mongodb-org-tools=4.4.22

- After installation, MongoDB should start automatically. if not, enable and start it using the following commands:

> sudo systemctl enable mongod.service

> sudo systemctl start mongod.service

- Check the status using the following command:

> sudo systemctl status mongod.service

- Finally, check installed MongoDB version using the following command:

> mongod --version

You should see the following output:

db version v4.4.22

Build Info: {

"version": "4.4.22",

"gitVersion": "fc832685b99221cffb1f5bb5a4ff5ad3e1c416b2",

"openSSLVersion": "OpenSSL 1.1.1f 31 Mar 2020",

"modules": [],

"allocator": "tcmalloc",

"environment": {

"distmod": "ubuntu2004",

"distarch": "x86_64",

"target_arch": "x86_64"

}

}

- Install Mongo Shell on your computer using the following commands:

> sudo apt install mongodb-mongosh=1.1.9

- To prevent unintended upgrades, you can pin the package at the currently installed version:

echo "mongodb-org hold" | sudo dpkg --set-selections

echo "mongodb-org-server hold" | sudo dpkg --set-selections

echo "mongodb-mongosh hold" | sudo dpkg --set-selections

echo "mongodb-org-mongos hold" | sudo dpkg --set-selections

echo "mongodb-org-tools hold" | sudo dpkg --set-selections

- You can stop MongoDB service by executing the following command:

> sudo service mongod stop

Windows 10 and Linux Ubuntu 20.04.6 LTS

- Open the command line tool and type the following command:

> mongosh

You should see the following output:

C:\Users\qdotn>mongosh

Current Mongosh Log ID: 649feb5649fae114f896e903

Connecting to: mongodb://127.0.0.1:27017/?directConnection=true&serverSelectionTimeoutMS=2000&appName=mongosh+1.1.9

Using MongoDB: 4.4.22

Using Mongosh: 1.1.9

For mongosh info see: https://docs.mongodb.com/mongodb-shell/

To help improve our products, anonymous usage data is collected and sent to MongoDB periodically (https://www.mongodb.com/legal/privacy-policy).

You can opt-out by running the disableTelemetry() command.

------

The server generated these startup warnings when booting:

2023-06-30T21:50:40.581+03:00: Access control is not enabled for the database. Read and write access to data and configuration is unrestricted

------

test>

It means that Mongo Shell has successfully been started:

- Change database to admin by executing the following command in Mongo Shell:

> use admin

You should see the following output:

switched to db admin

- To create a root user with root build-in role execute the following command in Mongo Shell:

> db.createUser(

{

user: "root",

pwd: "mongo12345",

roles: [ "root" ]

})

You should see the following output:

{ ok: 1 }

It means that user root user with build-in root role has successfully been created.

| user name | password | role |

|---|---|---|

| root | mongo12345 | root |

MongoDB build-in roles

Windows 10

* Download and install [MariaDB Community Server](https://mariadb.com/downloads) version 10.6.14-GA for MS Windows (64-bit) from official website.

Enter 12345 as root password. Enable the Enable access from remote machines for 'root' user checkbox.

| user name | password |

|---|---|

| root | 12345 |

Enable the Install as service checkbox.

Linux Ubuntu 20.04.6 LTS

* Ensure your system is updated and install software-properties-common package:

> sudo apt update && sudo apt upgrade

> sudo apt -y install software-properties-common

- First, we will add the official MariaDB apt repository using the following command:

> curl -LsS https://r.mariadb.com/downloads/mariadb_repo_setup | sudo bash -s -- --mariadb-server-version="mariadb-10.6"

Then, install MariaDB server and client.using the following command:

> sudo apt-get install mariadb-server mariadb-client -y

- Check the status using the following command:

> sudo systemctl status mariadb

- Finally, check installed MariaDB version using the following command:

> mysql -V

You should see the following output:

mysql Ver 15.1 Distrib 10.6.14-MariaDB, for debian-linux-gnu (x86_64) using readline 5.2

- To prevent unintended upgrades, you can pin the package at the currently installed version:

> echo "mariadb-server hold" | sudo dpkg --set-selections

> echo "mariadb-client hold" | sudo dpkg --set-selections

- After installation, secure MariaDB using the following commands:

> sudo mysql_secure_installation

You will be prompted with several questions. Choose options as shown below.

| question | answer |

|---|---|

| Enter current password for root (enter for none) | |

| Switch to unux_socket authentication [Y/n] | Y |

| Change the root password? [Y/n] | Y |

| New password: | 12345 |

| Re-enter new password: | 12345 |

| Remove anonymous users? [Y/n] | Y |

| Disallow root login remotely? [Y/n] | n |

| Remove the test database and access to it? [Y/n] | Y |

| Reload privilege tables now? [Y/n] | Y |

- You can stop MariaDB service by executing the following command:

> sudo service mariadb stop

Windows 10 and Linux Ubuntu 20.04.6 LTS

- Validate the configurations by connecting to MariaDB:

> mysql -u root -p

You will be prompted with password. Enter root password of 12345.

Windows 10

* To install [Redis](https://redis.io) on Windows, we'll first need to [enable WSL2 (Windows Subsystem for Linux)](https://learn.microsoft.com/en-us/windows/wsl/install).

You can a list of available Linux distros by executing the following command in Windows PowerShell:

> wsl --list --online

You'll see the following output:

NAME FRIENDLY NAME

Ubuntu Ubuntu

Debian Debian GNU/Linux

kali-linux Kali Linux Rolling

Ubuntu-18.04 Ubuntu 18.04 LTS

Ubuntu-20.04 Ubuntu 20.04 LTS

Ubuntu-22.04 Ubuntu 22.04 LTS

OracleLinux_7_9 Oracle Linux 7.9

OracleLinux_8_7 Oracle Linux 8.7

OracleLinux_9_1 Oracle Linux 9.1

openSUSE-Leap-15.5 openSUSE Leap 15.5

SUSE-Linux-Enterprise-Server-15-SP4 SUSE Linux Enterprise Server 15 SP4

SUSE-Linux-Enterprise-Server-15-SP5 SUSE Linux Enterprise Server 15 SP5

openSUSE-Tumbleweed openSUSE Tumbleweed

- Then you can install your favorite distro from the list by executing the following command:

> wsl --install -d <DistroName>

for example:

> wsl --install -d Ubuntu-20.04

- And then you can install Redis on your Linux distro (I am using Ubuntu) by executing the following commands:

> curl -fsSL https://packages.redis.io/gpg | sudo gpg --dearmor -o /usr/share/keyrings/redis-archive-keyring.gpg

> echo "deb [signed-by=/usr/share/keyrings/redis-archive-keyring.gpg] https://packages.redis.io/deb $(lsb_release -cs) main" | sudo tee /etc/apt/sources.list.d/redis.list

> sudo apt-get update

> sudo apt-get install redis

> sudo service redis-server start

Linux Ubuntu 20.04.6 LTS

* Ensure your system is updated:

> sudo apt update && sudo apt upgrade

- Install Redis on your Linux Ubuntu 20.04.6 LTS machine by executing the following commands:

> curl -fsSL https://packages.redis.io/gpg | sudo gpg --dearmor -o /usr/share/keyrings/redis-archive-keyring.gpg

> echo "deb [signed-by=/usr/share/keyrings/redis-archive-keyring.gpg] https://packages.redis.io/deb $(lsb_release -cs) main" | sudo tee /etc/apt/sources.list.d/redis.list

> sudo apt-get update

> sudo apt-get install redis

> sudo service redis-server start

Note: By default, Redis is accessible only from localhost.

- To prevent unintended upgrades, you can pin the package at the currently installed version:

> echo "redis hold" | sudo dpkg --set-selections

- Verify the status of the redis package by executing the following command:

> dpkg --get-selections redis

You should see the following output:

> redis hold

- You can stop Redis service by executing the following command:

> sudo service redis-server stop

Docker Desktop

- Navigate (if it's not already in) to the root directory of the RPS Game project on your computer and run the Docker Compose command below to deploy standalone database servers on Docker Compose in the background:

> docker compose -f docker-compose-general.yml up -d

You should see the following output:

[+] Running 3/3

✔ Container rps-app-redis-1 Started 0.0s

✔ Container rps-app-mongodb-1 Started 0.0s

✔ Container rps-app-mariadb-1 Started 0.0s

- Verify that MongoDB, MariaDB and Redis containers are up and running by executing the following command:

> docker compose -f docker-compose-general.yml ps

You should see the following output:

NAME IMAGE COMMAND SERVICE CREATED STATUS PORTS

rps-app-mariadb-1 bitnami/mariadb:10.6 "/opt/bitnami/script…" mariadb 6 minutes ago Up 5 minutes 0.0.0.0:13306->3306/tcp

rps-app-mongodb-1 mongo:4.4 "docker-entrypoint.s…" mongo 6 minutes ago Up 5 minutes 0.0.0.0:28017->27017/tcp

rps-app-redis-1 bitnami/redis:4.0.9-r24 "/app-entrypoint.sh …" cache 6 minutes ago Up 5 minutes 0.0.0.0:16379->6379/tcp

It means that MongoDB, MariaDB and Redis containers are up and running.

- When we don't need database containers anymore, we can take down containers and delete their corresponding volumes (-v) using the down command below:

> docker compose -f docker-compose-general.yml down -v

Kubernetes

MariaDB Server is one of the most popular open source relational databases.

MongoDB is used to implement the following patterns:

To create a kube-db namespace on the k8s cluster, run:

> kubectl apply -f ./k8s/namespaces/kube-db-ns.yml

To check the status, run:

> kubectl get namespaces --show-labels

You should see the following output:

NAME STATUS AGE LABELS

default Active 2d13h kubernetes.io/metadata.name=default

ingress-nginx Active 2d13h app.kubernetes.io/instance=ingress-nginx,app.kubernetes.io/name=ingress-nginx,kubernetes.io/metadata.name=ingress-nginx

kube-db Active 99m kubernetes.io/metadata.name=kube-db,name=kube-db

kube-elk Active 2d12h kubernetes.io/metadata.name=kube-elk,name=kube-elk

kube-node-lease Active 2d13h kubernetes.io/metadata.name=kube-node-lease

kube-public Active 2d13h kubernetes.io/metadata.name=kube-public

kube-system Active 2d13h kubernetes.io/metadata.name=kube-system

kubernetes-dashboard Active 2d13h addonmanager.kubernetes.io/mode=Reconcile,kubernetes.io/metadata.name=kubernetes-dashboard,kubernetes.io/minikube-addons=dashboard

To deploy MariaDB cluster to Kubernetes, first run:

> kubectl apply -f ./k8s/rbacs/mariadb-rbac.yml

Then run:

> kubectl apply -f ./k8s/configmaps/mariadb-configmap.yml

Then deploy a headless service for MariaDB pods using the following command:

> kubectl apply -f ./k8s/services/mariadb-svc.yml

Note: You cannot directly access the application running in the pod. If you want to access the application, you need a Service object in the Kubernetes cluster.

Headless service means that only internal pods can communicate with each other. They are not exposed to external requests outside the Kubernetes cluster. Headless services expose the individual pod IPs instead of the service IP and should be used when client applications or pods want to communicate with specific (not randomly selected) pod (stateful application scenarios).

Get the list of running services under the kube-db namespace with the following command:

> kubectl get service -n kube-db

You should see the following output:

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

mariadb-svc ClusterIP None <none> 3306/TCP 113s

Then run:

> kubectl apply -f ./k8s/secrets/mariadb-secret.yml

Now the secrets can be referenced in our statefulset. And then run:

> kubectl apply -f ./k8s/sets/mariadb-statefulset.yml

To monitor the deployment status, run:

> kubectl rollout status sts/mariadb-sts -n kube-db

You should see the following output:

partitioned roll out complete: 3 new pods have been updated...

To check the pod status, run:

> kubectl get pods -n kube-db

You should see the following output:

NAME READY STATUS RESTARTS AGE

mariadb-sts-0 1/1 Running 0 108s

mariadb-sts-1 1/1 Running 0 105s

mariadb-sts-2 1/1 Running 0 102s

At this point, your MariaDB cluster is ready for work. Test it as follows:

Create data on first (primary) replica set member with these commands:

> kubectl -n kube-db exec -it mariadb-sts-0 -- mariadb -uroot -p12345

You should see the following output:

Defaulted container "mariadb" out of: mariadb, init-mariadb (init)

Welcome to the MariaDB monitor. Commands end with ; or \g.

Your MariaDB connection id is 6

Server version: 10.11.3-MariaDB-1:10.11.3+maria~ubu2204-log mariadb.org binary distribution

Copyright (c) 2000, 2018, Oracle, MariaDB Corporation Ab and others.

Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.

MariaDB [(none)]> show databases;

+--------------------+

| Database |

+--------------------+

| information_schema |

| mysql |

| performance_schema |

| primary_db |

| sys |

+--------------------+

5 rows in set (0.001 sec)

MariaDB [(none)]> use primary_db;

Database changed

MariaDB [primary_db]> create table my_table (t int); insert into my_table values (5),(15),(25);

Query OK, 0 rows affected (0.041 sec)

Query OK, 3 rows affected (0.007 sec)

Records: 3 Duplicates: 0 Warnings: 0

MariaDB [primary_db]> exit

Bye

Check data on second (secondary) replica set member with these commands:

> kubectl -n kube-db exec -it mariadb-sts-1 -- mariadb -uroot -p12345

You should see the following output:

Defaulted container "mariadb" out of: mariadb, init-mariadb (init)

Welcome to the MariaDB monitor. Commands end with ; or \g.

Your MariaDB connection id is 6

Server version: 10.11.3-MariaDB-1:10.11.3+maria~ubu2204 mariadb.org binary distribution

Copyright (c) 2000, 2018, Oracle, MariaDB Corporation Ab and others.

Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.

MariaDB [(none)]> show databases;

+--------------------+

| Database |

+--------------------+

| information_schema |

| mysql |

| performance_schema |

| primary_db |

| sys |

+--------------------+

5 rows in set (0.001 sec)

MariaDB [(none)]> use primary_db;

Database changed

MariaDB [primary_db]> show tables;

+----------------------+

| Tables_in_primary_db |

+----------------------+

| my_table |

+----------------------+

1 row in set (0.000 sec)

MariaDB [primary_db]> select * from my_table;

+------+

| t |

+------+

| 5 |

| 15 |

| 25 |

+------+

3 rows in set (0.000 sec)

Repeat the same steps for the third (secondary) replica set member by changing the name of the pod to mariadb-sts-2.

MongoDB is a source-available cross-platform document-oriented database program.

MongoDB is used to implement the following patterns:

To create a kube-nosql-db namespace on the k8s cluster, run:

> kubectl apply -f ./k8s/namespaces/kube-nosql-db-ns.yml

To check the status, run:

> kubectl get namespaces --show-labels

You should see the following output:

NAME STATUS AGE LABELS

default Active 2d13h kubernetes.io/metadata.name=default

ingress-nginx Active 2d13h app.kubernetes.io/instance=ingress-nginx,app.kubernetes.io/name=ingress-nginx,kubernetes.io/metadata.name=ingress-nginx

kube-db Active 99m kubernetes.io/metadata.name=kube-db,name=kube-db

kube-elk Active 2d12h kubernetes.io/metadata.name=kube-elk,name=kube-elk

kube-node-lease Active 2d13h kubernetes.io/metadata.name=kube-node-lease

kube-nosql-db Active 3m5s kubernetes.io/metadata.name=kube-nosql-db,name=kube-nosql-db

kube-public Active 2d13h kubernetes.io/metadata.name=kube-public

kube-system Active 2d13h kubernetes.io/metadata.name=kube-system

kubernetes-dashboard Active 2d13h addonmanager.kubernetes.io/mode=Reconcile,kubernetes.io/metadata.name=kubernetes-dashboard,kubernetes.io/minikube-addons=dashboard

To deploy MongoDB cluster to Kubernetes, first run:

> kubectl apply -f ./k8s/rbacs/mongodb-rbac.yml

Then run:

> kubectl apply -f ./k8s/configmaps/mongodb-configmap.yml

Then deploy a headless service for MongoDB pods using the following command:

> kubectl apply -f ./k8s/services/mongodb-svc.yml

Note: You cannot directly access the application running in the pod. If you want to access the application, you need a Service object in the Kubernetes cluster.

Headless service means that only internal pods can communicate with each other. They are not exposed to external requests outside the Kubernetes cluster. Headless services expose the individual pod IPs instead of the service IP and expose the individual pod IPs instead of the service IP and should be used when client applications or pods want to communicate with specific (not randomly selected) pod (stateful application scenarios).

Get the list of running services under the kube-nosql-db namespace with the following command:

> kubectl get service -n kube-nosql-db

You should see the following output:

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

mongodb-svc ClusterIP None <none> 27017/TCP 2m36s

Then run:

> kubectl apply -f ./k8s/secrets/mongodb-secret.yml

Now the secrets can be referenced in our statefulset. And then run:

> kubectl apply -f ./k8s/sets/mongodb-statefulset.yml

To monitor the deployment status, run:

> kubectl rollout status sts/mongodb-sts -n kube-nosql-db

You should see the following output:

Waiting for 3 pods to be ready...

Waiting for 2 pods to be ready...

Waiting for 1 pods to be ready...

partitioned roll out complete: 3 new pods have been updated...

To check the pod status, run:

> kubectl get pods -n kube-nosql-db -o wide

You should see the following output:

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

mongodb-sts-0 2/2 Running 0 85s 10.244.0.8 minikube <none> <none>

mongodb-sts-1 2/2 Running 0 62s 10.244.0.9 minikube <none> <none>

mongodb-sts-2 2/2 Running 0 58s 10.244.0.10 minikube <none> <none>

Connect to the first replica set member with this command:

> kubectl -n kube-nosql-db exec -it mongodb-sts-0 -- mongo

You should see the following output:

Defaulted container "mongodb" out of: mongodb, mongo-sidecar

MongoDB shell version v4.4.21

connecting to: mongodb://127.0.0.1:27017/?compressors=disabled&gssapiServiceName=mongodb

Implicit session: session { "id" : UUID("c3a2b74c-75f0-4288-9deb-30a7d0bc4bd6") }

MongoDB server version: 4.4.21

---

The server generated these startup warnings when booting:

2023-05-27T10:11:59.717+00:00: Using the XFS filesystem is strongly recommended with the WiredTiger storage engine. See http://dochub.mongodb.org/core/prodnotes-filesystem

2023-05-27T10:12:00.959+00:00: Access control is not enabled for the database. Read and write access to data and configuration is unrestricted

2023-05-27T10:12:00.960+00:00: You are running this process as the root user, which is not recommended

---

---

Enable MongoDB's free cloud-based monitoring service, which will then receive and display

metrics about your deployment (disk utilization, CPU, operation statistics, etc).

The monitoring data will be available on a MongoDB website with a unique URL accessible to you

and anyone you share the URL with. MongoDB may use this information to make product

improvements and to suggest MongoDB products and deployment options to you.

To enable free monitoring, run the following command: db.enableFreeMonitoring()

To permanently disable this reminder, run the following command: db.disableFreeMonitoring()

---

You now have a REPL environment connected to the MongoDB database. Initiate the replication by executing the following command:

> rs.initiate()

If you get the following output:

{

"operationTime" : Timestamp(1685727395, 1),

"ok" : 0,

"errmsg" : "already initialized",

"code" : 23,

"codeName" : "AlreadyInitialized",

"$clusterTime" : {

"clusterTime" : Timestamp(1685727395, 1),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}

Define the variable called cfg. The variable executes rs.conf() command:

> cfg = rs.conf()

Use the cfg variable to add the replica set members to the configuration:

> cfg.members = [{_id: 0, host: "mongodb-sts-0.mongodb-svc.kube-nosql-db"},

{_id: 1, host: "mongodb-sts-1.mongodb-svc.kube-nosql-db", priority: 0},

{_id: 2, host: "mongodb-sts-2.mongodb-svc.kube-nosql-db", priority: 0}]

You should see the following output:

[

{

"_id" : 0,

"host" : "mongodb-sts-0.mongodb-svc.kube-nosql-db"

},

{

"_id" : 1,

"host" : "mongodb-sts-1.mongodb-svc.kube-nosql-db",

"priority": 0

},

{

"_id" : 2,

"host" : "mongodb-sts-2.mongodb-svc.kube-nosql-db",

"priority": 0

}

]

Confirm the configuration by executing the following command:

> rs.reconfig(cfg, {force: true})

You should see the following output:

{

ok: 1,

'$clusterTime': {

clusterTime: Timestamp({ t: 1684949311, i: 1 }),

signature: {

hash: Binary(Buffer.from("0000000000000000000000000000000000000000", "hex"), 0),

keyId: Long("0")

}

},

operationTime: Timestamp({ t: 1684949311, i: 1 })

}

Verify MongoDB replication status with this command:

> rs.status()

You should see the following output:

{

"set" : "rs0",

"date" : ISODate("2023-05-27T10:14:52.096Z"),

"myState" : 1,

"term" : NumberLong(1),

"syncSourceHost" : "",

"syncSourceId" : -1,

"heartbeatIntervalMillis" : NumberLong(2000),

"majorityVoteCount" : 2,

"writeMajorityCount" : 2,

"votingMembersCount" : 3,

"writableVotingMembersCount" : 3,

"optimes" : {

"lastCommittedOpTime" : {

"ts" : Timestamp(1685182483, 1),

"t" : NumberLong(1)

},

"lastCommittedWallTime" : ISODate("2023-05-27T10:14:43.714Z"),

"readConcernMajorityOpTime" : {

"ts" : Timestamp(1685182483, 1),

"t" : NumberLong(1)

},

"readConcernMajorityWallTime" : ISODate("2023-05-27T10:14:43.714Z"),

"appliedOpTime" : {

"ts" : Timestamp(1685182483, 1),

"t" : NumberLong(1)

},

"durableOpTime" : {

"ts" : Timestamp(1685182483, 1),

"t" : NumberLong(1)

},

"lastAppliedWallTime" : ISODate("2023-05-27T10:14:43.714Z"),

"lastDurableWallTime" : ISODate("2023-05-27T10:14:43.714Z")

},

"lastStableRecoveryTimestamp" : Timestamp(1685182438, 1),

"electionCandidateMetrics" : {

"lastElectionReason" : "electionTimeout",

"lastElectionDate" : ISODate("2023-05-27T10:12:03.578Z"),

"electionTerm" : NumberLong(1),

"lastCommittedOpTimeAtElection" : {

"ts" : Timestamp(0, 0),

"t" : NumberLong(-1)

},

"lastSeenOpTimeAtElection" : {

"ts" : Timestamp(1685182323, 1),

"t" : NumberLong(-1)

},

"numVotesNeeded" : 1,

"priorityAtElection" : 1,

"electionTimeoutMillis" : NumberLong(10000),

"newTermStartDate" : ISODate("2023-05-27T10:12:03.670Z"),

"wMajorityWriteAvailabilityDate" : ISODate("2023-05-27T10:12:03.712Z")

},

"members" : [

{

"_id" : 0,

"name" : "10.244.1.83:27017",

"health" : 1,

"state" : 1,

"stateStr" : "PRIMARY",

"uptime" : 173,

"optime" : {

"ts" : Timestamp(1685182483, 1),

"t" : NumberLong(1)

},

"optimeDate" : ISODate("2023-05-27T10:14:43Z"),

"lastAppliedWallTime" : ISODate("2023-05-27T10:14:43.714Z"),

"lastDurableWallTime" : ISODate("2023-05-27T10:14:43.714Z"),

"syncSourceHost" : "",

"syncSourceId" : -1,

"infoMessage" : "",

"electionTime" : Timestamp(1685182323, 2),

"electionDate" : ISODate("2023-05-27T10:12:03Z"),

"configVersion" : 5,

"configTerm" : 1,

"self" : true,

"lastHeartbeatMessage" : ""

},

{

"_id" : 1,

"name" : "mongodb-sts-1.mongodb-svc.kube-nosql-db:27017",

"health" : 1,

"state" : 2,

"stateStr" : "SECONDARY",

"uptime" : 53,

"optime" : {

"ts" : Timestamp(1685182483, 1),

"t" : NumberLong(1)

},

"optimeDurable" : {

"ts" : Timestamp(1685182483, 1),

"t" : NumberLong(1)

},

"optimeDate" : ISODate("2023-05-27T10:14:43Z"),

"optimeDurableDate" : ISODate("2023-05-27T10:14:43Z"),

"lastAppliedWallTime" : ISODate("2023-05-27T10:14:43.714Z"),

"lastDurableWallTime" : ISODate("2023-05-27T10:14:43.714Z"),

"lastHeartbeat" : ISODate("2023-05-27T10:14:51.822Z"),

"lastHeartbeatRecv" : ISODate("2023-05-27T10:14:51.853Z"),

"pingMs" : NumberLong(0),

"lastHeartbeatMessage" : "",

"syncSourceHost" : "10.244.1.83:27017",

"syncSourceId" : 0,

"infoMessage" : "",

"configVersion" : 5,

"configTerm" : 1

},

{

"_id" : 2,

"name" : "mongodb-sts-2.mongodb-svc.kube-nosql-db:27017",

"health" : 1,

"state" : 2,

"stateStr" : "SECONDARY",

"uptime" : 34,

"optime" : {

"ts" : Timestamp(1685182483, 1),

"t" : NumberLong(1)

},

"optimeDurable" : {

"ts" : Timestamp(1685182483, 1),

"t" : NumberLong(1)

},

"optimeDate" : ISODate("2023-05-27T10:14:43Z"),

"optimeDurableDate" : ISODate("2023-05-27T10:14:43Z"),

"lastAppliedWallTime" : ISODate("2023-05-27T10:14:43.714Z"),

"lastDurableWallTime" : ISODate("2023-05-27T10:14:43.714Z"),

"lastHeartbeat" : ISODate("2023-05-27T10:14:51.823Z"),

"lastHeartbeatRecv" : ISODate("2023-05-27T10:14:50.251Z"),

"pingMs" : NumberLong(0),

"lastHeartbeatMessage" : "",

"syncSourceHost" : "mongodb-sts-1.mongodb-svc.kube-nosql-db:27017",

"syncSourceId" : 1,

"infoMessage" : "",

"configVersion" : 5,

"configTerm" : 1

}

],

"ok" : 1,

"$clusterTime" : {

"clusterTime" : Timestamp(1685182483, 1),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

},

"operationTime" : Timestamp(1685182483, 1)

}

Note: The members section of the status output shows three replicas. The pod mongodb-sts-0 is listed as the __ Primary__ replica, while the other two pods, mongodb-sts-1 and mongodb-sts-2, are listed as the Secondary replicas.

The ReplicaSet deployment of MongoDB is set up and ready to operate.

Quit the replica set member with the following command:

> exit

Now let's create the admin account.

Connect to the first (primary) replica set member shell with the following command:

> kubectl -n kube-nosql-db exec -it mongodb-sts-0 -- mongo

Switch to admin database with the following command:

> use admin

Create admin user with the following command:

> db.createUser({ user:'admin', pwd:'mongo12345', roles:[ { role:'userAdminAnyDatabase', db: 'admin'}]})

You should see the following output:

Successfully added user: {

"user" : "admin",

"roles" : [

{

"role" : "userAdminAnyDatabase",

"db" : "admin"

}

]

}

It means admin account has been created successfully. Quit the replica set member with the following command:

> exit

At this point, your MongoDB cluster is ready for work. Test it as follows:

Connect to the first (primary) replica set member shell with the following command:

> kubectl -n kube-nosql-db exec -it mongodb-sts-0 -- mongo

Display all databases with the following command:

> show dbs

You should see the following output:

admin 80.00 KiB

config 176.00 KiB

local 404.00 KiB

Switch to the test database (if not) and add test entries with the following commands:

> use test

> db.games.insertOne({name: "RPS game", language: "Java" })

> db.games.insertOne({name: "Tic-Tac-Toe game" })

Display all data from the test database with the following commands:

> db.games.find()

You should see the following output:

{ "_id" : ObjectId("6471d9141175a02c9a9c27a0"), "name" : "RPS game", "language" : "Java" }

{ "_id" : ObjectId("6471d9211175a02c9a9c27a1"), "name" : "Tic-Tac-Toe game" }

Quit the primary replica set member with the following command:

> exit

Connect to the second (secondary) replica set member shell with the following command:

> kubectl -n kube-nosql-db exec -it mongodb-sts-1 -- mongo

Set a read preference to the secondary replica set member with the following command:

> rs.secondaryOk()

Display all databases with the following command:

> show dbs

You should see the following output:

admin 80.00 KiB

config 176.00 KiB

local 404.00 KiB

test 72.00 KiB

Display all data from the test database with the following commands:

> db.games.find()

You should see the following output:

{ "_id" : ObjectId("6471d9141175a02c9a9c27a0"), "name" : "RPS game", "language" : "Java" }

{ "_id" : ObjectId("6471d9211175a02c9a9c27a1"), "name" : "Tic-Tac-Toe game" }

Repeat the same steps for the third (secondary) replica set member by changing the name of the pod to mongodb-sts-2.

Mongo Express is an open source, basic web-based MongoDB admin interface.

To create a Simple Single Service Ingress for the Mongo Express application, run:

> kubectl apply -f ./k8s/ingress/mongodb-ingress.yml

Note: A Mongo Express application Simple Single Service Ingress configuration exposes only one service to external users.

Make sure the Mongo Express application ingress has been created:

> kubectl get ingress -n kube-nosql-db

You should see the following output:

NAME CLASS HOSTS ADDRESS PORTS AGE

mongodb-ingress nginx mongodb.internal 192.168.49.2 80 40h

Note the ip address (192.168.49.2) displayed in the output, as you will need this in the next step.

Add a custom entry to the etc/hosts file using the nano text editor:

> sudo nano /etc/hosts

You should add the following ip address (copied in the previous step) and custom domain to the hosts file:

192.168.49.2 mongodb.internal

You may check the custom domain name with ping command:

> ping mongodb.internal

You should see the following output:

64 bytes from mongodb.internal (192.168.49.2): icmp_seq=1 ttl=64 time=0.072 ms

64 bytes from mongodb.internal (192.168.49.2): icmp_seq=2 ttl=64 time=0.094 ms

64 bytes from mongodb.internal (192.168.49.2): icmp_seq=3 ttl=64 time=0.042 ms

Access the Mongo Express application from any browser by typing:

> mongodb.internal

To deploy Mongo Express to Kubernetes, first run:

> kubectl apply -f ./k8s/services/mongodb-express-svc.yml

It deploys a ClusterIP service for Mongo Express pods.

Then run:

> kubectl apply -f ./k8s/deployment/mongodb-express-deployment.yml

Redis is an open source, in-memory data structure store used as a distributed cache in the RPS application. It is used to store data in a key-value format, allowing for fast access and retrieval of data. Redis is a popular choice for distributed caching due to its scalability, performance, and flexibility.

Redis is used to implement the following patterns:

- Cache-Aside pattern.

To create a kube-cache namespace on the k8s cluster, run:

> kubectl apply -f ./k8s/namespaces/kube-cache-ns.yml

To check the status, run:

> kubectl get namespaces --show-labels

You should see the following output:

NAME STATUS AGE LABELS

default Active 2d13h kubernetes.io/metadata.name=default

ingress-nginx Active 2d13h app.kubernetes.io/instance=ingress-nginx,app.kubernetes.io/name=ingress-nginx,kubernetes.io/metadata.name=ingress-nginx

kube-cache Active 25s kubernetes.io/metadata.name=kube-cache,name=kube-cache

kube-db Active 99m kubernetes.io/metadata.name=kube-db,name=kube-db

kube-elk Active 2d12h kubernetes.io/metadata.name=kube-elk,name=kube-elk

kube-node-lease Active 2d13h kubernetes.io/metadata.name=kube-node-lease

kube-public Active 2d13h kubernetes.io/metadata.name=kube-public

kube-system Active 2d13h kubernetes.io/metadata.name=kube-system

kubernetes-dashboard Active 2d13h addonmanager.kubernetes.io/mode=Reconcile,kubernetes.io/metadata.name=kubernetes-dashboard,kubernetes.io/minikube-addons=dashboard

To deploy Redis cluster to Kubernetes, first run:

> kubectl apply -f ./k8s/rbacs/redis-rbac.yml

Then run:

> kubectl apply -f ./k8s/configmaps/redis-configmap.yml

Then deploy a headless service for Redis pods using the following command:

> kubectl apply -f ./k8s/services/redis-svc.yml

Note: You cannot directly access the application running in the pod. If you want to access the application, you need a Service object in the Kubernetes cluster.

Headless service means that only internal pods can communicate with each other. They are not exposed to external requests outside the Kubernetes cluster. Headless services expose the individual pod IPs instead of the service IP and should be used when client applications or pods want to communicate with specific (not randomly selected) pod (stateful application scenarios).

To get the list of running services under the Redis namespace, run:

> kubectl get service -n kube-cache

You should see the following output:

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

redis-svc ClusterIP None <none> 6379/TCP 4h11m

Then run:

> kubectl apply -f ./k8s/secrets/redis-secret.yml

Now the secrets can be referenced in our statefulset. And then run:

> kubectl apply -f ./k8s/sets/redis-statefulset.yml

To monitor the deployment status, run:

> kubectl rollout status sts/redis-sts -n kube-cache

You should see the following output:

statefulset rolling update complete 3 pods at revision redis-sts-85577d848c...

To check the pod status, run:

> kubectl get pods -n kube-cache

You should see the following output:

NAME READY STATUS RESTARTS AGE

redis-sts-0 1/1 Running 0 5m40s

redis-sts-1 1/1 Running 0 5m37s

redis-sts-2 1/1 Running 0 5m34s

At this point, your Redis cluster is ready for work. Test it as follows:

Connect to the first (master) replica set member shell with the following command:

> kubectl -n kube-cache exec -it redis-sts-0 -- sh

Then connect to Redis the Redis CLI:

# redis-cli

You should see the following output:

127.0.0.1:6379>

Authenticate to Redis with following command:

127.0.0.1:6379> auth 12345

Check the replica member replication information with the following command:

127.0.0.1:6379> info replication

You should see the following output:

# Replication

role:master

connected_slaves:2

slave0:ip=10.244.1.205,port=6379,state=online,offset=952,lag=1

slave1:ip=10.244.1.206,port=6379,state=online,offset=952,lag=1

master_replid:e7add4a40b5434360c75163ab01d8871928c5f03

master_replid2:0000000000000000000000000000000000000000

master_repl_offset:952

second_repl_offset:-1

repl_backlog_active:1

repl_backlog_size:1048576

repl_backlog_first_byte_offset:1

repl_backlog_histlen:952

Check the roles of the replica member with the following command:

127.0.0.1:6379> role

You should see the following output:

1) "master"

2) (integer) 728

3) 1) 1) "10.244.1.205"

2) "6379"

3) "728"

2) 1) "10.244.1.206"

2) "6379"

3) "728"

Create some key-value pair data using the following command:

127.0.0.1:6379> set game1 RPS

OK

127.0.0.1:6379> set game2 Tic-Tac-Toe

OK

Now get the key-value pair list with the following command:

127.0.0.1:6379> keys *

You should see the following output:

1) "game1"

2) "game2"

Connect to the second (slave) replica set member shell with the following command:

> kubectl -n kube-cache exec -it redis-sts-1 -- sh

Then connect to Redis the Redis CLI:

# redis-cli

And type the following command:

127.0.0.1:6379> keys *

You should see the following output:

1) "game1"

2) "game2"

Repeat the same steps for the third (slave) replica set member by changing the name of the pod to redis-sts-2.

Apache Kafka is an open-source, event streaming platform that is distributed, scalable, high-throughput, low-latency, and has a very large ecosystem.

Apache Kafka is used to implement the following patterns:

Local Machine

5.1 Adding custom entries to the etc/host file for the Apache Zookeeper and Kafka applications on local machine

Window 10