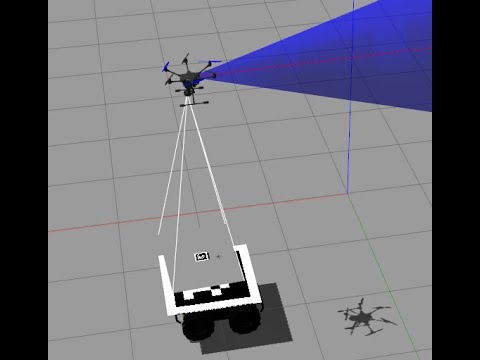

This package allows a PX4-powered drone to track a moving target (an AprilTag in this case) which is detected by a camera. Tag detection is done using apriltag_ros package. The target's position and velocity estimation is done using an implementaion of a Kalman filter based on a constant velocity model. The drone is controllerd using an implementation of a position controller which sends velocity commands to PX4. The detection and control are done with respect to the drone frame. So, errors caused by drifts in the local fixed frame can be avoided.

Kindly, give this repo a STAR if it helps you in your work

Video:

This package is tested with Ubuntu 18 + ROS Melodic + PX4 10.1

The easiest way to test this package is through a ready Docker image prepared for this project which is available here

Use docker_px4_vehicle_tracking.sh script to automatically pull and run the docker image. Don't forget to make it executable using chmod +x docker_px4_vehicle_tracking.sh

./docker_px4_vehicle_tracking.sh <optional_container_name>Once logged into the container terminal, proceed to the Simulation seciton.

- Clone this package into your

~/catkin_ws/srcfoldercd ~/catkin_ws/src git clone https://github.com/mzahana/mavros_apriltag_tracking.git

- Use the

install/setup.shscript to do the installationcd ~/catkin_ws/src/mavros_apriltag_tracking/install ./setup.sh

- Copy the modified

typhoon_h480model to/home/arrow/Firmware/Tools/sitl_gazebo/modelscp -R ~/catkin_ws/src/mavros_apriltag_tracking/models/typhoon_h480 ~/Firmware/Tools/sitl_gazebo/models/

- Copy the modified Husky simulation model. You need sudo for this one

sudo cp ~/catkin_ws/src/mavros_apriltag_tracking/models/custom_husky/husky.urdf.xacro $(catkin_find husky_description/urdf)/-

Use the

launch/tracker.launchfile to run the simulationroslaunch mavros_apriltag_tracking tracker.launch

-

All steps required to set a takeoff position, bringing camera down, arming and setting OFFBOARD mode, and moving the Husky are available in a shell script which can be run using

rosrun mavros_apriltag_tracking start_tracking.sh

THAT IS IT!

The steps in the start_tracking.sh script are explained in details below:

- Bring the drone above and close to the tag by publishing one message to the

/setpoint/local_posrostopic pub --once /setpoint/local_pos geometry_msgs/Point "x: 0.0 y: 0.0 z: 3.0"

- Make the camera face downward (pitch rotation of -90 degrees)

NOTE: The camera won't move until the drone is armed, but the command will be saved.

rostopic pub --once /mavros/mount_control/command mavros_msgs/MountControl "header: seq: 0 stamp: {secs: 0, nsecs: 0} frame_id: '' mode: 2 pitch: -90.0 roll: 0.0 yaw: 0.0 altitude: 0.0 latitude: 0.0 longitude: 0.0"

- Arm the drone and activate the OFFBOARD mode for the setpoint to take effect

rosservice call /arm_and_offboard "{}"

You should see the drone taking off. Then, once the tag on the Husky UGV is detected, the drone will be automatically controlled to hover above the tag. The dron will keep tracking the tag on top of the Husky while it's moving.

To move the Husky around, publish a linear velocity (along forward direction of the Husky's x-axis), and a rotational velocity about the Husky's z-axis as follows.

rostopic pub -r 10 /husky_velocity_controller/cmd_vel geometry_msgs/Twist "linear:

x: 1.0

y: 0.0

z: 0.0

angular:

x: 0.0

y: 0.0

z: 0.4"Look at the launch/tracker.launch file and change parameters as needed.

The following are the ROS nodes in this package.

mavros_offboard_controller.py: Mainly implements a position controller and sends velocity commands to PX4. This controller accepts two types of position setpoints.- Position setpoints in local fixed frame. Corresponding topic is

/setpoint/local_pos - Position setpoints relative to the drone horizontal body frame. This one is used for the tag tracking as the tag is detected relative to the camera. Corresponding topic is

/setpoint/relative_pos

- Position setpoints in local fixed frame. Corresponding topic is

apriltag_setpoint_publisher.py: This node takes the pose of the detected tag and computes relative positino setpoints which are published to/setpoint/relative_postopic.kf_tracker_node: Performs Kalman filtering based on a constant velocity model for object tracking. The input to this node is a PoseStamped topic of the object pose measurements (only the 3D position is consumed). The output of the filter (kf/estimatetopic) is an estimate of the 3D position of the tracked object in the same frame as the input measrements.

The position controller may require tuning for the PI gains. There are two services to help you tune those gains in runtime, for both horizontal and verical controllers.

To tune the PI gains for the horizontal controller,

rosservice call /horizontal_controller/pid_gains "p: 1.0

i: 0.01

d: 0.0"for the verical controller,

rosservice call /vertical_controller/pid_gains "p: 1.0

i: 0.01

d: 0.0"Error signals are also published to the following topics. This helps you to plot the error response while tuning the controllers.

# Velocity errors help you to tune the velocity controller on PX4 side

/analysis/body_vel_err

/analysis/local_vel_err

# Position errors help you to tune the position controllers implemented in this package

/analysis/local_pos_err

/analysis/relative_pos_errYou can use rqt_plot or plotjuggler to plot signals in realtime.

If you use this package in an academic context, please cite it as follows

@misc{mohamed_abdelkader_2020_07,

author = {Mohamed Abdelkader},

title = {{mavros_apriltag_tracking - Vision-based target tracking for PX4-powered drones}},

month = july,

year = 2020,

doi = {10.5281/zenodo.3959150 },

url = {https://zenodo.org/badge/latestdoi/279731687}

}