Build AR apps with confidence.

Iterate fast, right in Editor.

Non-invasive, drop-in solution.

Fair pricing.

Quick Start ⚡ • License & Pricing 💸 • Documentation 📜 • Troubleshooting ☂️

Technical Details 🔎 • Comparison to MARS 🚀 • Related Solutions 👪 • Say hi ✍️

This package allows you to fly around in the Editor and test your AR app, without having to change any code or structure. Iterate faster, test out more ideas, build better apps.

ARSimulation is a custom XR backend, built on top of the XR plugin architecture.

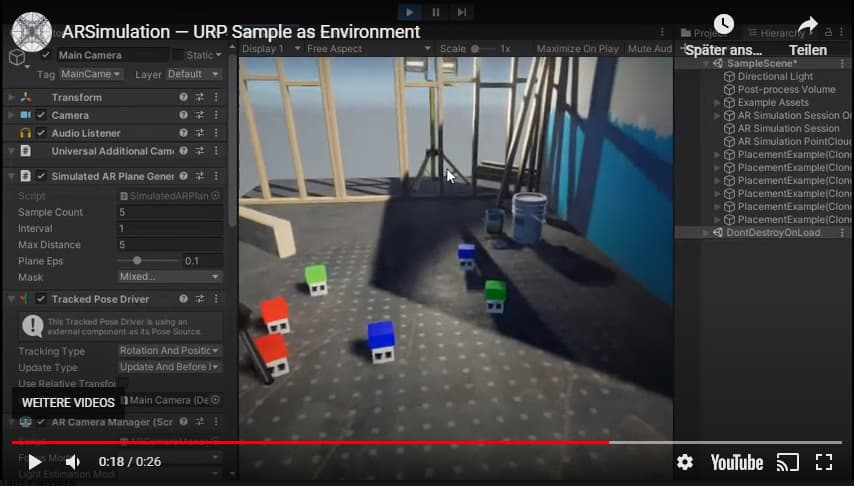

This scene only uses ARFoundation features.

Because it's just another XR Plugin and we took great care to simulate important features, it works with your existing app, ARFoundation, XR Interaction Toolkit — zero changes to your code or setup needed! ✨

And if you need more control, there's a lot of knobs to turn.

- Install ARSimulation by dropping this package into Unity 2019.3+:

📦 ARSimulation Installer - Open any scene that is set up for ARFoundation or click

Tools/AR Simulation/Convert to AR Scene - Press Play

- Press RMB (Right Mouse Button) + Use WASD+QE to move around,

LMB (Left Mouse Button) to click • touch • interact with your app - Done.

Click here for a step-by-step walkthrough — it's worth the time!

Here, we'll walk you through using AR Simulation with the arfoundation-samples project which provides a good overview over what AR Simulation can and can't do.

-

First, we'll download the samples and take a look at what we got.

- Clone or download Unity's arfoundation-samples project from GitHub

- Open the project (at time of writing, this is currently using Unity 2019.4.1f1, but should work with any 2019.3+).

- Open the menu scene

Scenes/ARFoundationMenu/Menu.unity - Press Play.

"Hey wait, we didn't import ARSimulation yet!" "Yes indeed. We want to show you how lonely it's here without it." - The samples scene looks somewhat like this (more or less pixelated depending on your game window settings):

Q: "Wow what's up with the font?!"

A: "Unfortunately seems Unity thinks this will ever only be looked at on a device, in a build. AR in Editor?! Hm."

-

Let's give it a chance and install the Device Simulator package to make this look better.

- Stop Play Mode

- Open Package Manager

- Make sure "All Packages" and "Preview Packages" is enabled (*Note: on 2020.1/2, you'll need to enable preview settings in Project Settings first)

- Install Device Simulator 2.2.2+

- In your Game View, select the little new dropdown and then

Simulator.

- Press Play

- Note that this looks more like a device now:

- Also note, this tells you that no AR features are available in the Editor.

Luckily, AR Simulation is here to change that!

-

Next up, the fun stuff happens. We'll install AR Simulation.

- Stop Play Mode

- Download the 📦 ARSimulation Installer

- Drop the installer into your project.

- You should be greeted by our Getting Started window, and have documentation and sample scenes ready:

You can close this window for now — you can always get back to it withWindow/AR Simulation/Getting Started. - Press Play.

Q: "Oh, suddenly there are a lot of sample buttons that have turned white?"

A: "You probably guessed it, that means we can now simulate those scenes!"

-

Let's play with the samples a bit.

- Click on the

Simple ARbutton to load that scene. - Press and hold the Right Mouse Button and use the WASDQE keys to move around the scene. Move the mouse to look around.

- Note that there's an orange plane detected and tracked, together with some matching pointcloud visuals. These visuals come straight from ARFoundation - this is exactly what happens on device, including everything going on in the Unity hierarchy.

- Release Right Mouse Button and click with the Left Mouse Button to spawn something.

- Press the Return button

- Click on the

Interactionbutton to load that scene.

This scene uses the XR Interaction Toolkit for interactive-ness, another thing that is notoriously hard to test in Editor.

Press theReturnbutton - Last one here, let's try

Sample UX— click it!

- Click on the

-

Q: "OK, I got it, the arfoundation-samples work. Is there more?"

A: "Well, happy that you ask, of course!"- Make sure you have the AR Simulation samples installed at

Samples/AR Simulation.- if not, open

Window > AR Simulation > Getting Startedand clickInstall Samples.

- if not, open

- From

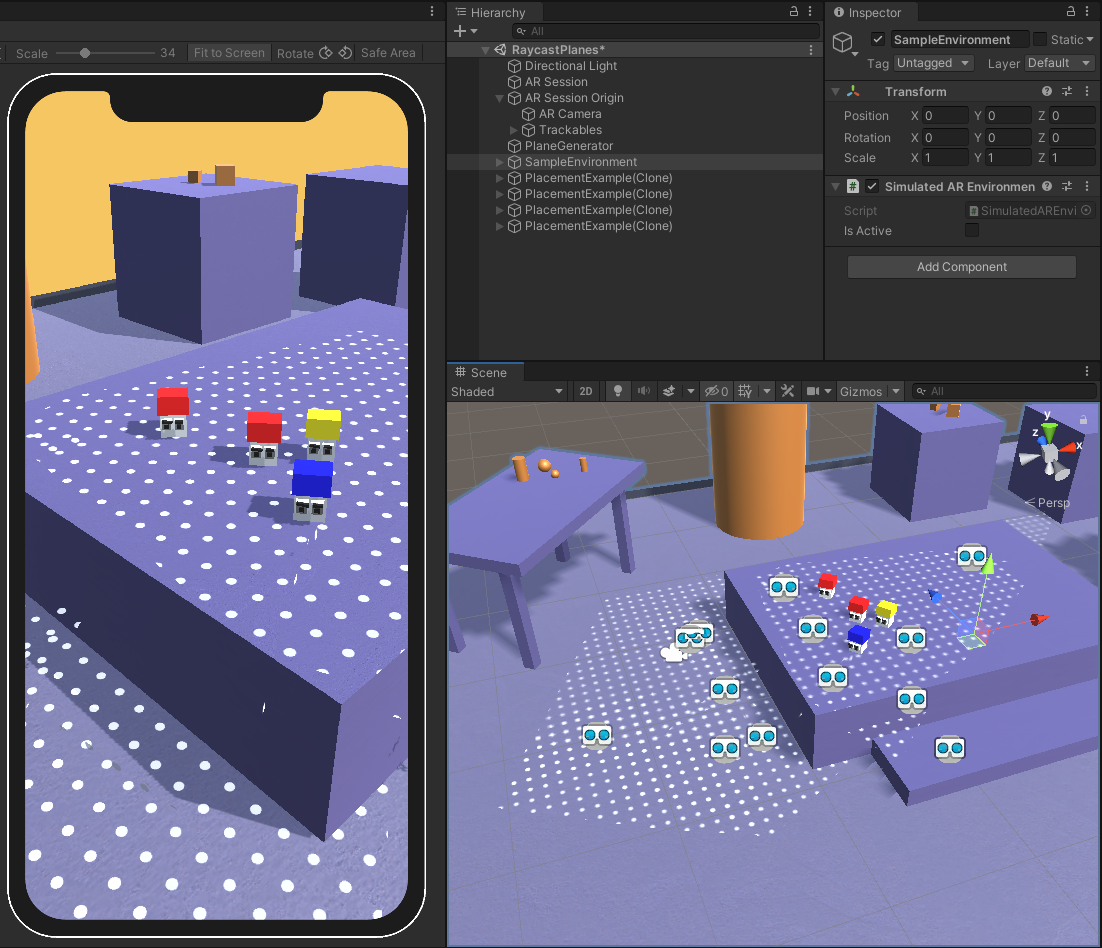

Samples/AR Simulation/someversion/Getting Started, open the sceneRaycastPlanes.unity. - This scene uses a

Simulated AR Environmentto provide a more complex testing scenario. - Press Play.

- Move around the scene using Right Mouse Button and WASDQE.

- While moving around, notice that planes are detected as you go:

- Click the Left Mouse Button to spawn little guys on all planes.

- Make sure you have the AR Simulation samples installed at

*Q: "Wow. This makes testing AR applications so much easier! How can I thank you guys?" A: "Well, please don't forget to buy a license!"

Using ARSimulation requires you to buy a per-seat license —

please buy seats for your team through Unity AssetStore.

This is a single $60 payment, not a monthly subscription.

You can use it for 7 days for evaluation purposes only,

without buying a license. 🧐

Go to Edit/Project Settings/XR-Plugin-Management and make sure that AR Simulation is checked ✔️ in the PC, Mac and Linux Standalone Settings tab.

We are working on improving the docs right now and making some nice "Getting Started" videos. Stay tuned — until then, here's some things you might need:

Please open an issue and tell us about it! We want this to be as useful as possible to speed up your workflow.

- Drop the

SimulatedPlanePrefab into the scene in Edit or Play Mode (or just add anotherSimulatedARPlanecomponent) - Move and adjust as necessary

Video: Custom Planes

Video: Runtime Adjustments

The same works for Point Clouds.

(Tracked 3D Objects Coming Soon™)

- Just press play, if your scene uses image tracking a

Simulated Tracked Imageis generated for you. - If you're using more than one tracked image, generate them with

Empty GameObject+SimulatedARTrackedImageof your choice (needs to be in aReferenceImageLibraryof course)

- Add your geometry, ideally as Prefab

- Add a

SimulatedEnvironmentcomponent to it - Press Play

- Done.

- (Background camera image rendering 📷 is experimental right now)

Video: Complex Environment Simulation

Here's a preview of a nicely dressed apartement sample: 🏡

📱 Device Simulator (but works without)

👆 Input System: both (but works with old/new/both)

| Unity Version | Input System | ARFoundation | Interaction Mode | ||||

|---|---|---|---|---|---|---|---|

| Old | Both | New | 3.15 | 4.0 | Game View | Device Simulator1 | |

|

✔️ | ✔️ | ✔️ | ✔️ | ✔️ | ✔️ | ✔️ |

|

✔️ | ✔️ | ✔️ | ✔️ | ✔️ | ✔️ | ✔️ |

|

✔️ | ✔️ | ✔️ | ✔️ | ✔️ | ✔️ | ✔️ |

| Unity Version | Render Pipeline | Platform | ||||

|---|---|---|---|---|---|---|

| Built-in | URP | HDRP2 | Editor | iOS/Android Build3 | Desktop Build4 | |

|

✔️ | ✔️ | — | ✔️ | ✔️ | untested |

|

✔️ | ✔️ | — | ✔️ | ✔️ | untested |

|

✔️ | ✔️ | — | ✔️ | ✔️ | untested |

1 Recommended. Feels very nice to use, and gives correct sizes for UI etc.

2 HDRP is not supported by Unity on iOS/Android currently.

3 "Support" here means: ARSimulation does not affect your builds, it is purely for Editor simulation.

4 We haven't done as extensive testing as with the others yet. Making Desktop builds with ARSimulation is very useful for testing multiplayer scenarios without the need to deploy to multiple mobile devices.

5 There is a known bug in XR Plugin Management 3.2.13 and earlier. Please use to 3.2.15 or newer.

ARSimulation running in Device Simulator.

ARSimulation is a XR Plugin that works with Unity's XR SDK infrastructure and thus plugs right into ARFoundation and other systems in the VR/AR realm inside Unity.

Currently supported features are marked orange.

This architecture has some advantages:

- ARSimulation will not clutter your project

- Does not show up at all in your compiled app (otherwise it's a bug, please let us know)

- easier to maintain with future ARFoundation changes

- Requires Zero Changes™ for working with other plugins that use ARFoundation

- Camera background is supported (with custom 3D scenes), but our default shader has no occlusion support right now (same as ARFoundation by default). You can just use your own plane shaders of course that supports occlusion (see

AR Foundation samples/Plane Occlusionscene) - Environment cubemap support is platform-specific

(Reason: Unity bug, Issue Tracker Link) - No support for simulating faces, people, or collaboration right now

(let us know if you feel this is important to you!) - Partial support for meshing simulation

(some support, but not identical to specific devices) - Touch input is single-touch for now, waiting for Unity to support it better & looking into it ourselves

(Reason: Device Simulator only supports single touch, since Input.SimulateTouch only supports one) - If your scene feels to dark / does not use environment lighting, make sure

Auto Generateis on in Lighting Window or bake light data.

(Reason: spherical harmonics simulation will only work if the shaders are aware that they should use it) - ARFoundation 4 logs warnings in Editor:

No active UnityEngine.XR.XRInputSubsystem is available. Please ensure that a valid loader configuration exists in the XR project settings.We have no idea what that means: Link to Forum Thread - ARSimulation currently has a dependency on

XRLegacyInputHelpersthat isn't needed in call cases; we will remove that dependency in a future release.

Long story short:

- If you are starting a new project, are new to AR dev but have a lot of financial resources, are building a very complex AR app with multiple planes and dynamic content and constraints between objects, then MARS might be a good fit.

- If you have an existing project, are fine with ARFoundation`s feature set, are using other extensions on top of ARFoundation, are building a relatively simple AR app, don't want to shell out 600$/year/seat, ARSimulation might be helpful.

Unity describes MARS (Mixed and Augmented Reality Studio) as "a framework for simplified, flexible AR authoring". We were active alpha testers, trying to use it for our own AR applications, and started developing our own solution in parallel. After a while, we stopped using MARS (besides of course testing and giving feedback to new releases).

MARS is very ambitious and future-facing. It tries to anticipate many new types of devices and sensors, and to do that, reinvents the wheel (namely: basic ARFoundation features) in many places.

It wraps around ARFoundation instead of extending it, which is great for some usecases but makes it very heavy for others.

A core concept of MARS is Functionality Injection, which at its base feels pretty similar to what the XR SDK system is trying to achieve (note: FI might allow for more complex scenarious, but solves a similar problem of device-specific implementations.)

Our goal are fast iteration times in the Editor for a range of AR applications we and partner companies build. These usually consist of placing and interacting with objects from different angles. We just needed a way to "simulate" an AR device in the Editor, not a full-blown additional framework!

Fortunately, Unity provides the ability to build exactly that using the XR plugin architecture: a custom XR provider that works in the Editor and Desktop builds.

There were quite some challenges, especially around Input System support (we support old/both/new modes now) and touch injection (there's a private Input.SimulateTouch API that is also used by the DeviceSimulator package).

Plus, the usual amount of Unity bugs and crashes; we are pretty confident that we worked around most of them and sent bug reports for the others.

| ⚔ | ARSimulation | MARS |

|---|---|---|

| Claim | Non-invasive editor simulation backend for ARFoundation | Framework for simplified, flexible AR Authoring |

| Functionality | XR SDK plugin for Desktop: positional tracking simulation, touch input simulation, image tracking, ... |

Wrapper around ARFoundation with added functionality: custom simulation window, object constraints and forces, editor simulation (including most of what ARSimulation can do), file system watchers, custom Editor handles, codegen, ... |

| Complexity |

|

|

| Changes to project | none | |

| Required changes | none | ARFoundation components need to be replaced with their MARS counterparts |

The following table compares ARSimulation and MARS in respect to in-editor simulation for features available in ARFoundation.

Note that MARS has a lot of additional tools and features (functionality injection, proxies, recordings, automatic placement of objects, constraints, ...) not mentioned here that might be relevant to your usecase. See the MARS docs for additional featuers.

| ⚔ | ARSimulation Simulation Features |

MARS Simulation Features |

|---|---|---|

| Plane Tracking | ✔️ | ✔️ |

| Touch Input | ✔️ | ❌1 |

| Simulated Environments | (✔️)2 | ✔️ |

| Device Simulator | ✔️ | ❌3 |

| Point Clouds | ✔️ | ✔️ |

| Image Tracking | ✔️ | ✔️ |

| Light Estimation Spherical Harmonics |

✔️ | ❌ |

| Anchors | ✔️ | ❌ |

| Meshing | (✔️) | ✔️ |

| Face Tracking | ❌ | (✔️)4 |

| Object Tracking | ✔️ | ❌ |

| Human Segmentation | ❌ | ❌ |

1 MARS uses Input.GetMouseButtonDown for editor input AND on-device input. This means: no testing of XR Interaction Toolkit features, no multitouch. You can see the (somewhat embarassing) MARS input example at this Unity Forum link. ARSimulation supports full single-touch simulation in GameView and DeviceSimulator.

2 ARSimulation's plane shader doesn't support occlusion right now, which matches what ARFoundation shaders currently do (no occlusion). You can still use your own shaders that support occlusion (see AR Foundation samples/PlaneOcclusion scene)

3 MARS uses a custom "Device View", but doesn't support the Unity-provided Device Simulator package. This means you can't test your UIs with MARS with proper DPI settings (e.g. the typical use of Canvas: Physical Size).

4 MARS has a concept of Landmarks that are created from ARKit blendshapes and ARCore raw meshes, but no direct support for either.

Unfortunately it seems nobody at Unity anticipated someone building custom XR providers in C# that are actually supposed to work in the Editor. It's advertised as a "way to build custom C++ plugins" only.

This has lead to funny situations where we reporting bugs around usage in Editor (e.g. of the ARFoundation Samples, XR Interaction Toolkit, and others), and Unity telling us that these "don't matter since you can't use them in Editor anyways". Well guys, we hope now you see why we were asking.

- Device Simulator has no way to do multitouch simulation (usually a must for any touch simulator). This means that rotating in ARFoundation isn't working out of the box in Editor right now. We are currently using LeanTouch as a workaround, as that gives proper multitouch simulation support in both Game View and Device Simulator.

- Device Simulator does not support tapCount (is always 1). Case 1258166

- New Input System does not support tapCount (is always 0, even on devices). Case 1258165

- Device Simulator: Simulated touches oscillate between "stationary" and "moved" in Editor (no subpixel accuracy?). Case 1258158

- There's a number of warnings around subsystem usage in Editor. They seem to not matter much but are annoying (and incorrect).

- There's an open issue with Cubemap creation in Editor (necessary for Environment Probe simulation). Issue Tracker

- Device Simulator disables Mouse input completely - we're working around that here but be aware when you try to create Android / iOS apps that also support mouse. Forum Thread

- in 2020.1 and 2020.2, even when you enable "New Input System", the Input System package is not installed in package manager. You have to install it manually. Forum Thread

- switching from a scene with Object Tracking to a scene with Image Tracking on device crashes Android apps (we'll report a bug soon)

- XR Plugin Management versions 3.2.13 and older are buggy and lose settings sometimes. Please use XR Plugin Management 3.2.15 or greater.

Since Unity still hasn't provided a viable solution for testing AR projects without building to devices, a number of interesting projects arose to overcome that, especially for remoting.

For our own projects, we found that device remoting is still too slow for continuous testing and experimentation, so we made ARSimulation.

Kirill Kuzyk recently released a great tool called AR Foundation Editor Remote which uses a similar approach and creates a custom, editor-only XR SDK backend that injects data received from remote Android and iOS devices.

Here's the AR Foundation Editor Remote forum thread.

Koki Ibukuro has also experimented with remoting data into the Unity editor for ARFoundation development. His plugin also supports background rendering.

It's available on GitHub: asus4/ARKitStreamer.

Unity Techologies is of course also experimenting with remoting, and currently has an internal Alpha version that is undergoing user testing.

Here's the forum thread for the upcoming Unity AR Remoting.

And of course there's MARS, the newly released, 600$/seat/year framework for simplified and flexible AR Authoring. It's probably a great solution for enterprises, and has a ton of additional tooling that goes way beyond what ARFoundation provides. We were Alpha testers of MARS and early on it became clear that it was not what many people believed it to be — a simple way to test your app without building to device. Here's the Forum section for MARS.

needle — tools for unity • @NeedleTools • @marcel_wiessler • @hybridherbst • Say hi!