-

Notifications

You must be signed in to change notification settings - Fork 150

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Experiment on pulling NVTX3 via CPM #1002

base: branch-23.08

Are you sure you want to change the base?

Experiment on pulling NVTX3 via CPM #1002

Conversation

…into feature/polygon_distances

…into feature/polygon_distances

|

This PR require adding nvtx dependency to conda environment. |

…oid/cuspatial into benchmark/point_polygon_distance_dev

…into benchmark/point_polygon_distance_dev

| @@ -198,6 +210,7 @@ target_compile_definitions(cuspatial PUBLIC "SPDLOG_ACTIVE_LEVEL=SPDLOG_LEVEL_${ | |||

|

|

|||

| # Specify the target module library dependencies | |||

| target_link_libraries(cuspatial PUBLIC cudf::cudf) | |||

| target_link_libraries(cuspatial PRIVATE nvtx3-cpp) | |||

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

This is a PUBLIC dependency not a PRIVATE one. The nvtx3 headers are included by the cuspatial public headers and therefore consumers of cuspatial will also need nvtx3.

This will resolve the test compilation failures.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

We expected to need PUBLIC here and tried it but it caused another issue. @isVoid Can you provide the logs?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

The nvtx3 project doesn't have have install / export rules for the targets they create it looks like. So you would get errors when trying to export the cuspatial targets as they now depend on targets that have no way to be exported.

What we should do is specify DOWNLOAD_ONLY when getting nvtx3 and directly including nvtxImportedTargets.cmake ( https://github.com/NVIDIA/NVTX/blob/v3.1.0-c-cpp/CMakeLists.txt#L33 ).

We will need to also setup install rules for this file and the nvtx3 headers, plus a custom FINAL_CODE_BLOCK I expect for the rapids_export.

I expect that this is why previously cuspatial copied nvtx3 directly, as it dramatically simplified the build-system.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

This is exactly what we ran into. We tried setting it to PUBLIC and used rapids_cpm_find.

The nvtx3 project doesn't have have install / export rules for the targets they create it looks like.

Should we look into contributing to nvtx3 to provide install / export rules?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Should we look into contributing to nvtx3 to provide install / export rules?

Yes but don't hold your breath for it to be put in a release anytime soon. It took them about 2 years to release the last merged feature I was waiting on. So you will probably need to take Rob's DOWNLOAD_ONLY suggestion as well.

…into benchmark/point_polygon_distance_dev

…into benchmark/point_polygon_distance_dev

…into benchmark/point_polygon_distance_dev

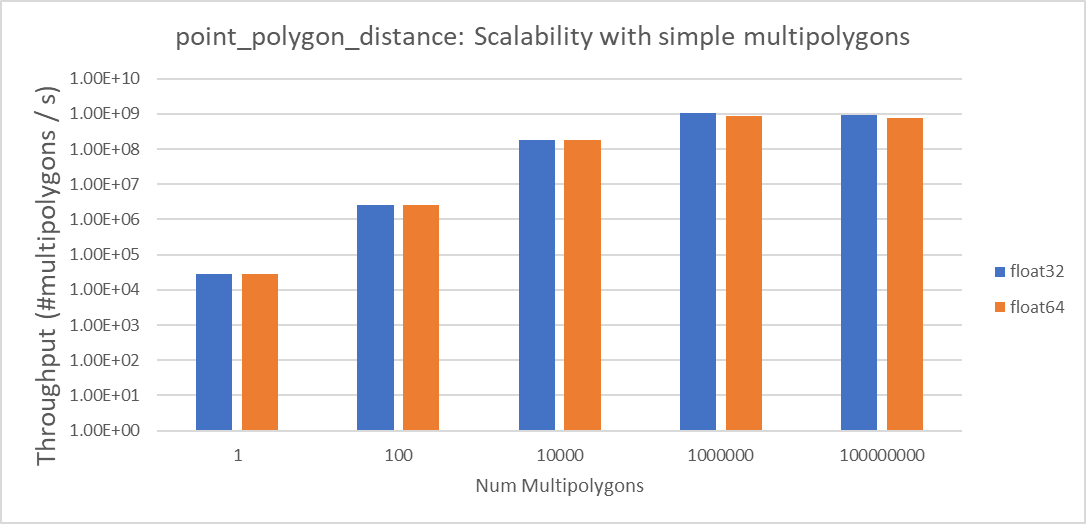

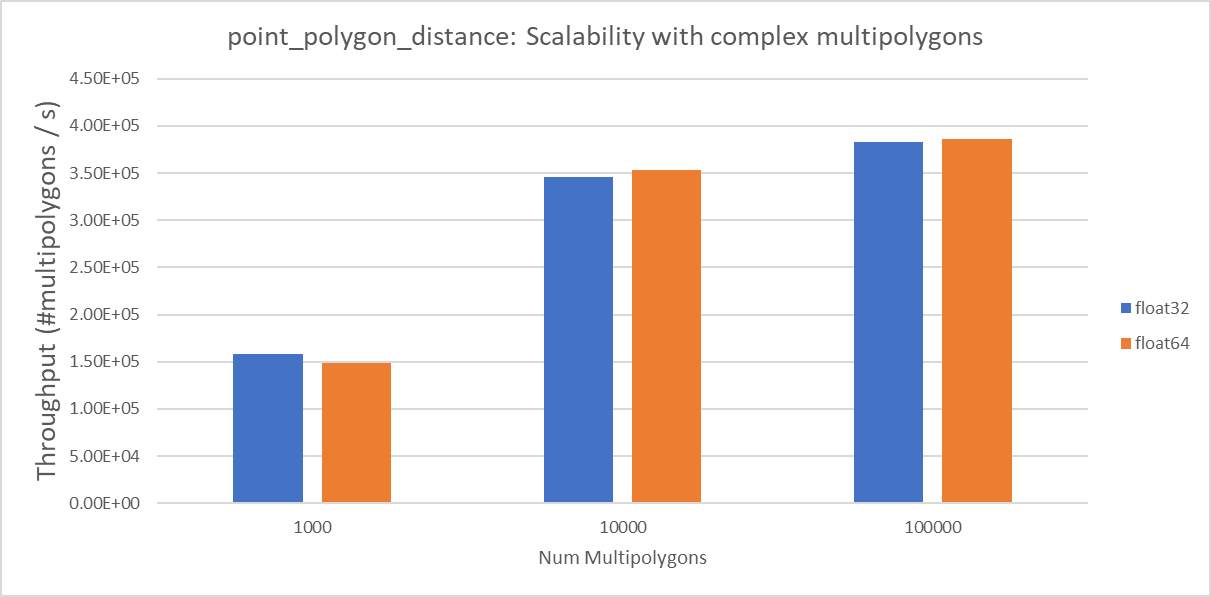

pairwise_point_polygon_distance benchmarkThis PR separates the `pairwise_point_polygon_distance` benchmark portion of PR #1002. While that PR is only left for nvtx3 experiments. # Original PR description: This PR adds pairwise point polygon distance benchmark. Depends on #998 Point-polygon distance performance can be affected by many factors, because the geometry is complex in nature. I benchmarked these questions: 1. How does the algorithm scales with simple multipolygons? 2. How does it scales with complex multipolygons? ## How does the algorithm scales with simple multipolygons? The benchmark uses the most simple multipolygon, 3 sides per polygon, 0 hole and 1 polygon per multipolygon. Float32 | Num multipolygon | Throughput (#multipolygons / s) | | --- | --- | | 1 | 28060.32971 | | 100 | 2552687.469 | | 10000 | 186044781 | | 1000000 | 1047783101 | | 100000000 | 929537385.2 | Float64 | Num multipolygon | Throughput (#multipolygons / s) | | --- | --- | | 1 | 28296.94817 | | 100 | 2491541.218 | | 10000 | 179379919.5 | | 1000000 | 854678939.9 | | 100000000 | 783364410.7 |  The chart shows that with simple polygons and simple multipoint (1 point per multipoint), the algorithm scales pretty nicely. Throughput is maxed out at near 1M pairs. ## How does the algorithm scales with complex multipolygons? The benchmark uses a complex multipolygon, 100 edges per ring, 10 holes per polygon and 3 polygons per multipolygon. float32 Num multipolygon | Throughput (#multipolygons / s) -- | -- 1000 | 158713.2377 10000 | 345694.2642 100000 | 382849.058 float64 Num multipolygon | Throughput (#multipolygons / s) -- | -- 1000 | 148727.1246 10000 | 353141.9758 100000 | 386007.3016  The algorithm reaches max throughput at near 10K pairs. About 100X lower than the simple multipolygon example. Authors: - Michael Wang (https://github.com/isVoid) - Mark Harris (https://github.com/harrism) Approvers: - Mark Harris (https://github.com/harrism) URL: #1131

|

@isVoid is this ready to go into 23.06? |

|

This PR successfully implemented NVTX from GitHub for cudf: rapidsai/cudf#15178 We can probably copy from it and start a new attempt at this for cuSpatial if desired. |

Description

This PR experiments pulling nvtx3 via CPM.

Checklist