Fast image style transfer using TensorFlow!

Both the "slow," iterative method described in the paper A Neural Algorithm of Artistic Style by Gatys et al. and the "fast" feed-forward version described in the paper Perceptual Losses for Real-Time Style Transfer and Super-Resolution by Johnson et al. with modifications (e.g. replication border padding, instance normalization described in Instance Normalization: The Missing Ingredient for Fast Stylization by Ulyanov et al, etc.) are implemented.

To run, download the VGG-19 network into models/vgg and the COCO dataset, then edit the parameters in train.py (should be self-explanatory), then run python3 train.py. To run the iterative method, uncomment the relevant sections in the code as indicated by the comments.

Note: Minimal hyperparameter tuning was performed. You can probably get much better results by tuning the parameters (e.g. loss function coefficients, layers/layer weights, etc.).

- ELU instead of ReLU (experimental)

- Resize convolution layers instead of transpose convolution layers (experimental)

- VGG-19 and content/style layers specified in Gatys et al.

- Instance normalization for all convolutional layers including those in residual blocks

- Output tanh activation is scaled by 150 then centered around 127.5 and clipped to [0, 255]

- Border replication padding (reflection padding is planned)

- Batch size 16 for 2 epochs (~10000 iterations)

White Line, Fast, Content 5:Style 85:Denoise 5

Udnie, Fast, Content 5:Style 85:Denoise 1

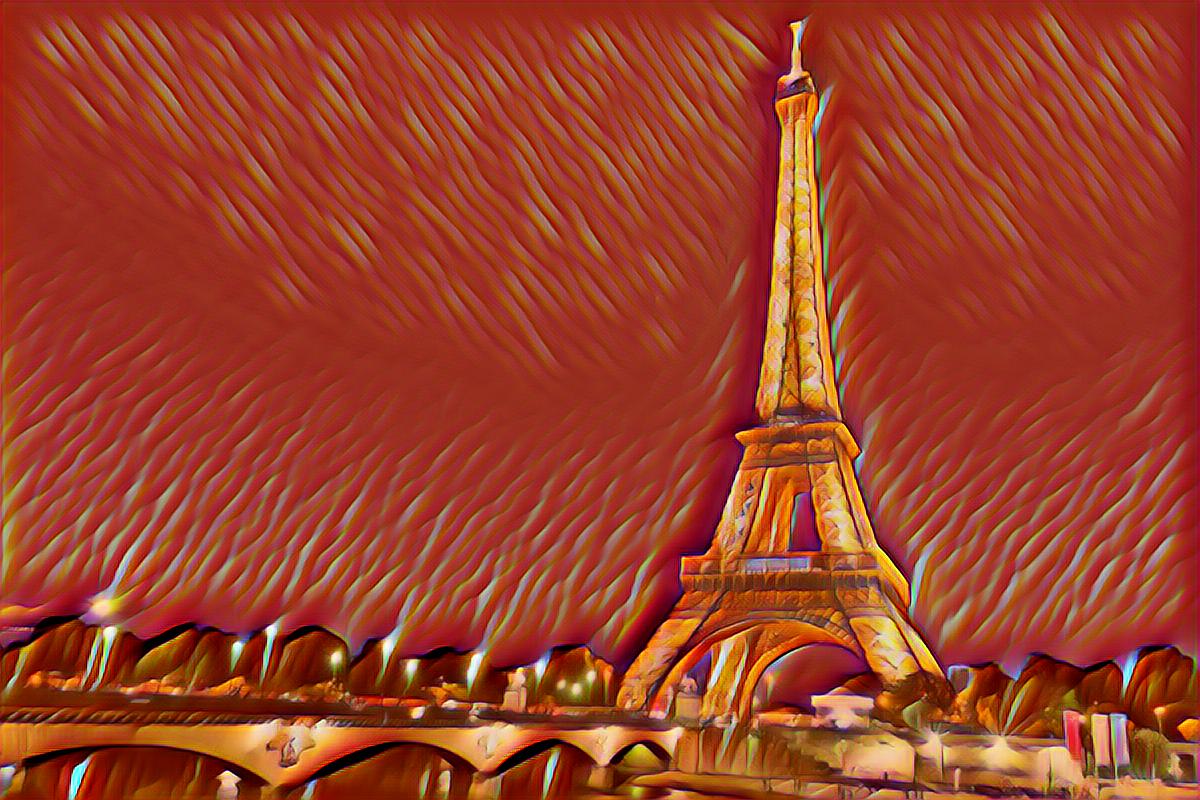

Red Canna, Fast, Content 5:Style 85:Denoise 5

Udnie, Fast, Content 5:Style 50:Denoise 50 (ReLU, Transpose Convolution)

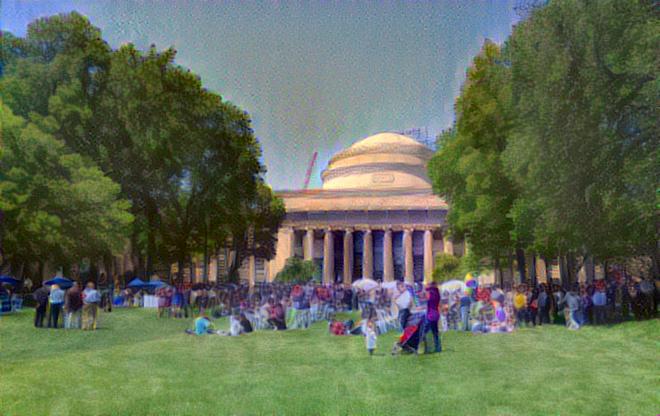

A Sunday on La Grande Jatte, Slow, Content 0.005:Style 1: Denoise 1

Playing around with patterns: