A Python 3 ROS package for lidar based off-road mapping.

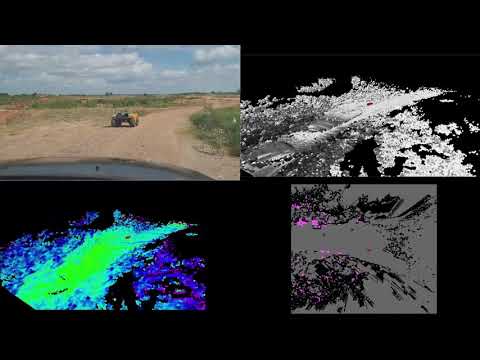

G-VOM is a local 3D voxel mapping framework for off-road path planning and navigation. It provides both hard and soft positive obstacle detection, negative obstacle detection, slope estimation, and roughness estimation. By using a 3D array lookup table data structure and by leveraging the GPU it can provide online performance.

We implemented G-VOM on three vehicles that were tested at the Texas A&M RELLIS campus. A Clearpath Robotics Warthog and Moose, and a Polaris Ranger. For sensing we used an Ouster OS1-64 lidar on the Warthog and an Ouster OS1-128 lidar on the Moose and Ranger. For all vehicles, the system was ran on a laptop with an Nvidia Quadro RTX 4000 GPU and an Intel i9-10885H CPU achieving a mapping rate from 9-12 Hz.

The video below shows autonomous operation on the Warthog at 4 m/s.

For more detailed results see the paper.

Ubuntu 64-bit 20.04.

Python 3.6 or later.

Follow Numba Installation and Numba CUDA.

ROS Noetic. ROS Installation Note, ROS is only needed to run gvom_ros.py.

The system is implemented within a class in gvom.py. There are three public functions. Class initialization, process_pointcloud, and combine_maps.

Class initialization initialises all parameters for the class. A description of each parameter is provided in the file.

process_pointcloud takes the pointcloud, ego position, and optionally a transform matrix. It Imports a pointcloud and processes it into a voxel map then adds the map to the buffer. The transform matrix is necessary if the pointcloud is not in the world frame since all map processing is in the world frame..

combine_maps takes no inputs and processes all maps in the buffer into a set of 2D output maps. The outputs are the map origin, positive obstacle map, negative obstacle map, roughness map, and visibility map.

Note process_pointcloud and combine_maps can be ran asynchronously. Multiple sensors can each call process_pointcloud however we recommend a buffer size greater than twice the number of sensors.

An example ROS implementation is provided in gvom_ros.py. It subscribes to a PointCloud2 message and an Odometry message. Additionally, it requires a tf tree between the “odom_frame” and the PointCloud2 message’s frame. It’s assumed that the Odometry message is in the “odom_frame”.

@INPROCEEDINGS{overbye2021gvom,

author={Overbye, Timothy and Saripalli, Srikanth},

booktitle={2022 IEEE Intelligent Vehicles Symposium (IV)},

title={G-VOM: A GPU Accelerated Voxel Off-Road Mapping System},

year={2022},

volume={},

number={},

pages={1480-1486},

doi={10.1109/IV51971.2022.9827107}}