-

-

Notifications

You must be signed in to change notification settings - Fork 3.5k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

OpenXR: Virtual Reality Rendering #115

Comments

|

I would love to support XR. I've never personally written code in that space, but I have a general idea about a few ways it could integrate with bevy. Does OpenXR interact directly with render apis (ex vulkan / opengl) or is it abstract (in a way that we could use the bevy render api directly)? If it needs direct vulkan access, then we can either:

my preference would be to do this through wgpu to reduce complexity on our end (and also improve the wgpu ecosystem). |

|

Probably should be done upstream: gfx-rs/gfx#3219 |

|

Yeah, it needs direct vulkan access. It should really be done upstream. This means that bevy should only expose the gfx XR API after gfx adds support |

|

I want to point out once gfx-hal supports openXR you'd have to create a wgpu native(web wont get this) extension as well. Keep in mind we'd need some way of turning on/off wgpu native extensions in bevy. Current extensions can be found here: |

|

gfx-rs/gfx#3219 mentions that the api could potentially be based on WebXR which means it could also target the web without needing a native and web extension |

|

The WGPU API will be based on WebXR, the gfx one is going to be based on OpenXR. |

|

Is this a work in progress? Can I study the code, perhaps even make a pull request? |

|

I could be wrong about this, but I've been in the community for a while

and haven't seen anyone actively moving it forward. I imagine you could

take it if you wanted.

I, personally, am interested in this if only because I hope it'll get us

better spatial audio support, which I think is a bigger deal in VR than

it is in most desktop/mobile games.

Be prepared to draw up an RFC first, though, as that seems to be the

direction we're headed in. Alternatively, you may be able to knock

together a renderer similar to what bevy_webgl2 did, though at some

point there'll probably be a bureaucratic hurdle to overcome. :)

|

|

I've been working on this for a while, continuing what @amberkowalski started on the gfx side of things, but I'm new to Rust, Vulkan and OpenXR. Here's what I have so far: https://github.com/agrande/gfx/tree/gfx-xr. Not quite there yet, but I'm actively working on it. |

|

I am not experienced in OpenXR, but I have some OpenVR experience. I would like to see a channel or other place for discussion if that's possible, as I am also in the position of wanting to contribute, but not having enough experience that I feel able to lead the effort. If we can coordinate, we can hopefully make some progress without too much duplication of effort. |

|

Ok, so now we have an XR channel on discord to coordinate the effort 🙌 |

|

Nice. I'm afraid I can't help as I currently do not have access to a vr headset anymore |

|

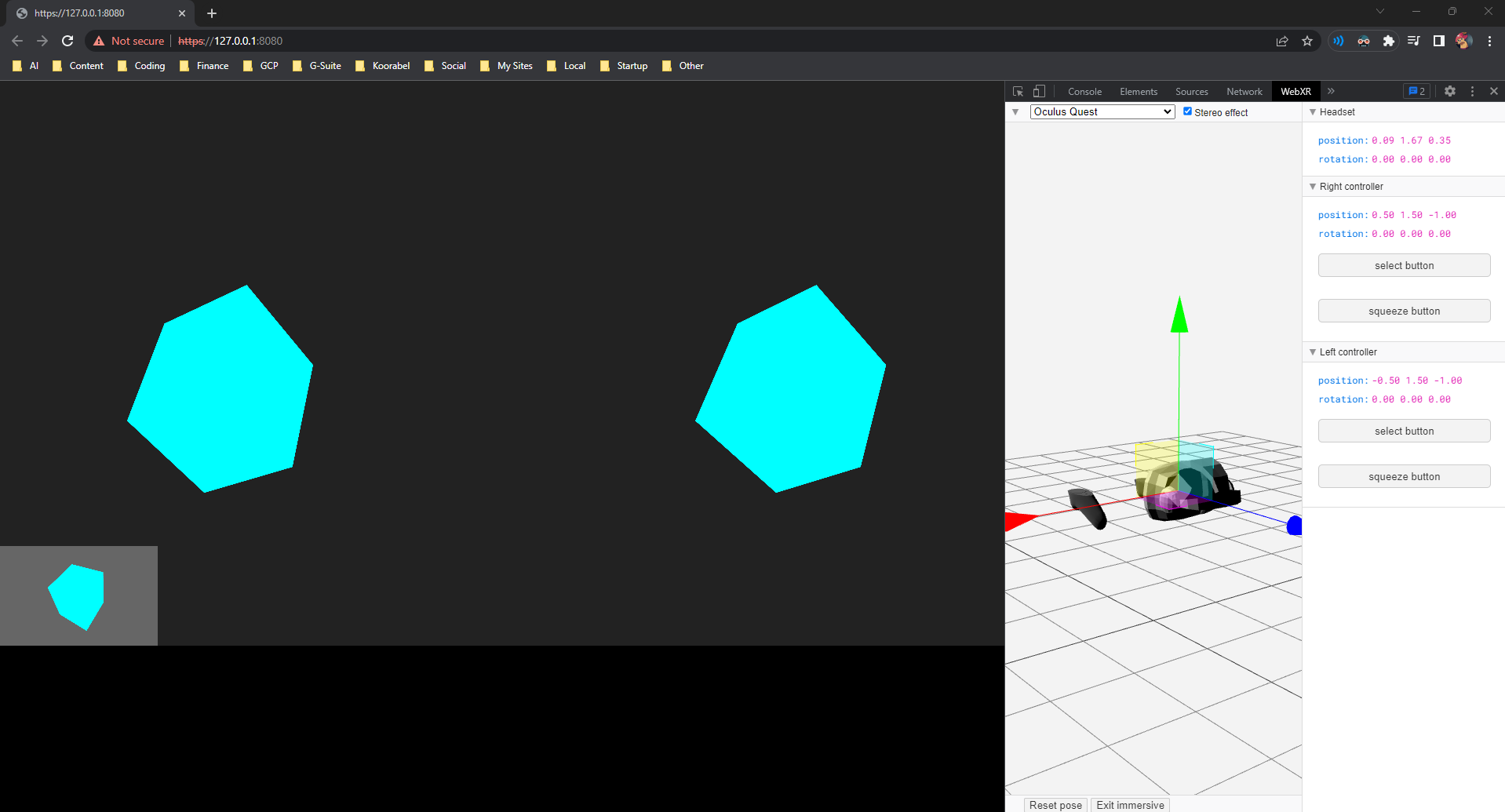

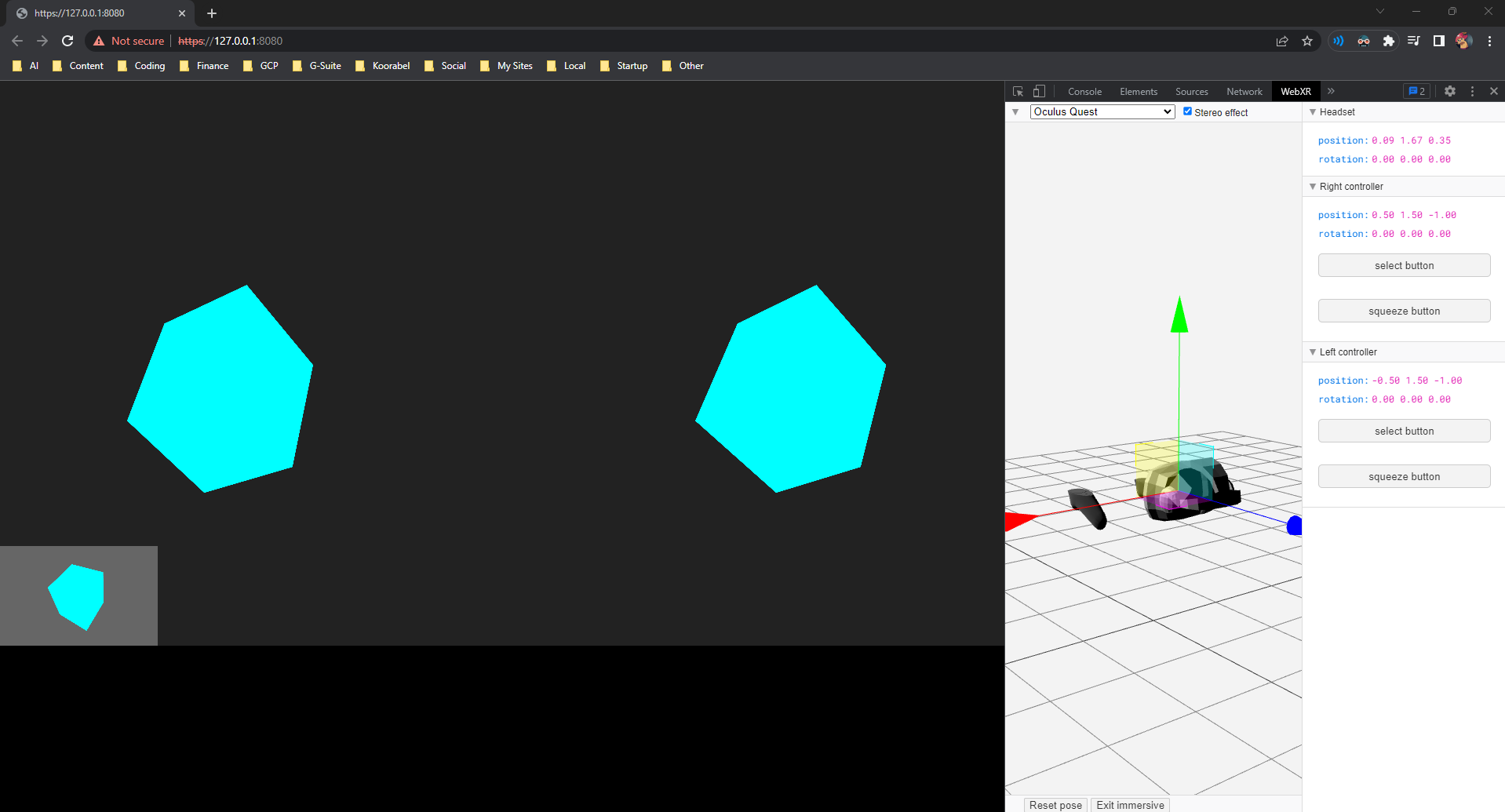

I've created a proof-of-concept (but functional) Bevy - OpenXR that works on Oculus Quest 2 and most probably on PC with Monado as well. See https://github.com/blaind/xrbevy. |

|

Love to see this integration, as someone who watched Amethyst closely and am now here, I really love VR dev. Would be happy to test on SteamVR and contribute as needed. |

|

For the record, there's some work being done in this PR #2319 to support XR in bevy |

|

I am wondering if there any progress on WebXR? |

|

Edit: here's a summary of bevy xr forks I've come across with my understanding of the difference in features:

|

# Objective We can currently set `camera.target` to either an `Image` or `Window`. For OpenXR & WebXR we need to be able to render to a `TextureView`. This partially addresses #115 as with the addition we can create internal and external xr crates. ## Solution A `TextureView` item is added to the `RenderTarget` enum. It holds an id which is looked up by a `ManualTextureViews` resource, much like how `Assets<Image>` works. I believe this approach was first used by @kcking in their [xr fork](https://github.com/kcking/bevy/blob/eb39afd51bcbab38de6efbeeb0646e01e2ce4766/crates/bevy_render/src/camera/camera.rs#L322). The only change is that a `u32` is used to index the textures as `FromReflect` does not support `uuid` and I don't know how to implement that. --- ## Changelog ### Added Render: Added `RenderTarget::TextureView` as a `camera.target` option, enabling rendering directly to a `TextureView`. ## Migration Guide References to the `RenderTarget` enum will need to handle the additional field, ie in `match` statements. --- ## Comments - The [wgpu work](gfx-rs/wgpu@c039a74) done by @expenses allows us to create framebuffer texture views from `wgpu v0.15, bevy 0.10`. - I got the WebXR techniques from the [xr fork](https://github.com/dekuraan/xr-bevy) by @dekuraan. - I have tested this with a wip [external webxr crate](https://github.com/mrchantey/forky/blob/018e22bb06b7542419db95f5332c7684931c9c95/crates/bevy_webxr/src/bevy_utils/xr_render.rs#L50) on an Oculus Quest 2.  --------- Co-authored-by: Carter Anderson <[email protected]> Co-authored-by: Paul Hansen <[email protected]>

# Objective We can currently set `camera.target` to either an `Image` or `Window`. For OpenXR & WebXR we need to be able to render to a `TextureView`. This partially addresses bevyengine#115 as with the addition we can create internal and external xr crates. ## Solution A `TextureView` item is added to the `RenderTarget` enum. It holds an id which is looked up by a `ManualTextureViews` resource, much like how `Assets<Image>` works. I believe this approach was first used by @kcking in their [xr fork](https://github.com/kcking/bevy/blob/eb39afd51bcbab38de6efbeeb0646e01e2ce4766/crates/bevy_render/src/camera/camera.rs#L322). The only change is that a `u32` is used to index the textures as `FromReflect` does not support `uuid` and I don't know how to implement that. --- ## Changelog ### Added Render: Added `RenderTarget::TextureView` as a `camera.target` option, enabling rendering directly to a `TextureView`. ## Migration Guide References to the `RenderTarget` enum will need to handle the additional field, ie in `match` statements. --- ## Comments - The [wgpu work](gfx-rs/wgpu@c039a74) done by @expenses allows us to create framebuffer texture views from `wgpu v0.15, bevy 0.10`. - I got the WebXR techniques from the [xr fork](https://github.com/dekuraan/xr-bevy) by @dekuraan. - I have tested this with a wip [external webxr crate](https://github.com/mrchantey/forky/blob/018e22bb06b7542419db95f5332c7684931c9c95/crates/bevy_webxr/src/bevy_utils/xr_render.rs#L50) on an Oculus Quest 2.  --------- Co-authored-by: Carter Anderson <[email protected]> Co-authored-by: Paul Hansen <[email protected]>

|

Much more recent, actively developed XR plugin for Bevy: https://github.com/awtterpip/bevy_openxr Largely built off the work listed above <3 Currently targeting main, but should be usable with Bevy 0.12 in a couple weeks. |

Hi! This project sounds awesome. I just discovered it and wanted to talk about XR support.

One could assume that this could be implemented as an external module. However, the OpenXR library creates one or more render targets (for Vulkan/GL/...) based on the physical XR hardware. Therefore, the XR module needs to talk to the rendering module. As a consequence, a few generic changes to the rendering module may possibly be required, changes that are most probably unspecific to OpenXR. That's why it's reasonable to talk about this now already.

I'd like to look into OpenXR, which already has Rust bindings. However, the architecture should probably be prototyped in collaboration, as I don't know all the bevy stuff yet. I'll read all the bevy documentation and then come back to this issue.

I'd be happy to hear your thoughts to this! Cheers

The text was updated successfully, but these errors were encountered: