-

Notifications

You must be signed in to change notification settings - Fork 162

806: Apache Arrow Min Max Statistics Hint

The latest pg2arrow and arrow_fdw of PG-Strom supports to embed min/max values of fields for each record-batch.

This statistics performs as a hint, when we scan the Apache Arrow file, to skip record-batches that contains no rows to the given condition clause.

Its typical use case is time series log-data processing because its workloads are likely INSERT-only (suitable to Apache Arrow), and neighbor rows tend to have similar values. Its basic idea was come from BRIN Indexes (Block Range Index) of PostgreSQL.

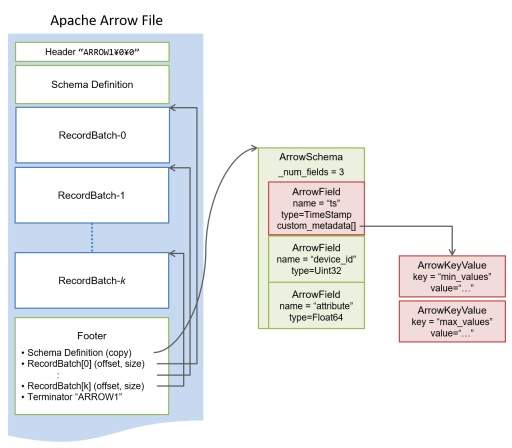

This min/max statistics are embedded in Apache Arrow files using custrom_metadata[] array of Field, so it never breaks any data portability of the files.

This min/max statistics are embedded in using custom_metadata[] attribute of Arrow::Field structure.

As literal, it allows to have custom key-value pairs for each Arrow::Field.

We use min_values and max_values as name of the custom_metadata[] keys, and its values are comma separated integer values that reflects the binary form of the relevant Field. Even if Field is not Integer (like Floating-Point), we use integer representation because it makes no sense to have human-readable form here.

This data representation is fully legal to Apache Arrow file format. Even if we generate an Apache Arrow file with min/max statistics, PyArrow can successfully read the file than print the custom_metadata[] as a part of schema definition. It simply ignores the min/max statistics on data processing.

$ python3

Python 3.6.8 (default, Aug 24 2020, 17:57:11)

[GCC 8.3.1 20191121 (Red Hat 8.3.1-5)] on linux

Type "help", "copyright", "credits" or "license" for more information.

>>> import pyarrow as pa

>>> X = pa.RecordBatchFileReader('/dev/shm/flineorder_sort.arrow')

>>> X.schema

lo_orderkey: decimal(30, 8)

lo_linenumber: int32

lo_custkey: decimal(30, 8)

lo_partkey: int32

lo_suppkey: decimal(30, 8)

lo_orderdate: int32

-- field metadata --

min_values: '19920101,19920919,19930608,19940223,19941111,19950730,1996' + 31

max_values: '19920919,19930608,19940223,19941111,19950730,19960417,1997' + 31

lo_orderpriority: fixed_size_binary[15]

lo_shippriority: fixed_size_binary[1]

lo_quantity: decimal(30, 8)

lo_extendedprice: decimal(30, 8)

lo_ordertotalprice: decimal(30, 8)

lo_discount: decimal(30, 8)

lo_revenue: decimal(30, 8)

lo_supplycost: decimal(30, 8)

lo_tax: decimal(30, 8)

lo_commit_date: fixed_size_binary[8]

lo_shipmode: fixed_size_binary[10]

-- schema metadata --

sql_command: 'SELECT * FROM lineorder_sort'

Right now, only pg2arrow supports to embed the min/max statistics.

The --stat option of pg2arrow enables the min/max statistics on the specified column.

Below is an example of pg2arrow. It dumps the lineorder_sort table into the specified Apache Arrow file with min/max statistics on the specified lo_orderdata column.

$ pg2arrow -d postgres -o /dev/shm/flineorder_sort.arrow -t lineorder_sort --stat=lo_orderdate --progress

RecordBatch[0]: offset=1640 length=268437080 (meta=920, body=268436160) nitems=1303085

RecordBatch[1]: offset=268438720 length=268437080 (meta=920, body=268436160) nitems=1303085

RecordBatch[2]: offset=536875800 length=268437080 (meta=920, body=268436160) nitems=1303085

RecordBatch[3]: offset=805312880 length=268437080 (meta=920, body=268436160) nitems=1303085

RecordBatch[4]: offset=1073749960 length=268437080 (meta=920, body=268436160) nitems=1303085

RecordBatch[5]: offset=1342187040 length=268437080 (meta=920, body=268436160) nitems=1303085

RecordBatch[6]: offset=1610624120 length=268437080 (meta=920, body=268436160) nitems=1303085

RecordBatch[7]: offset=1879061200 length=268437080 (meta=920, body=268436160) nitems=1303085

RecordBatch[8]: offset=2147498280 length=268437080 (meta=920, body=268436160) nitems=1303085

RecordBatch[9]: offset=2415935360 length=55668888 (meta=920, body=55667968) nitems=270231

The source table is preliminary sorted by lo_orderdate, so the database system returns rows from older to newer ones.

In the results, rows in a particular record batch tend to have close values for each.

Below is the raw dump of the generated Apache Arrow file.

$ pg2arrow --dump /dev/shm/flineorder_sort.arrow

[Footer]

{Footer: version=V4, schema={Schema: endianness=little, fields=[

{Field: name="lo_orderkey", nullable=true, type={Decimal: precision=30, scale=8}, children=[], custom_metadata=[]},

{Field: name="lo_linenumber", nullable=true, type={Int32}, children=[], custom_metadata=[]},

{Field: name="lo_custkey", nullable=true, type={Decimal: precision=30, scale=8}, children=[], custom_metadata=[]},

{Field: name="lo_partkey", nullable=true, type={Int32}, children=[], custom_metadata=[]},

{Field: name="lo_suppkey", nullable=true, type={Decimal: precision=30, scale=8}, children=[], custom_metadata=[]},

{Field: name="lo_orderdate", nullable=true, type={Int32}, children=[], custom_metadata=[

{KeyValue: key="min_values" value="19920101,19920919,19930608,19940223,19941111,19950730,19960417,19970103,19970922,19980609"},

{KeyValue: key="max_values" value="19920919,19930608,19940223,19941111,19950730,19960417,19970103,19970922,19980609,19980802"}]},

{Field: name="lo_orderpriority", nullable=true, type={FixedSizeBinary: byteWidth=15}, children=[], custom_metadata=[]},

:

<snip>

You can find out only lo_orderdate has min_values and max_values attributes.

Their first element introduces the min-/max-value of the first record-batch (min: 19920101 and max: 19920919), and the second one also introduces the min-/max-value of the second record-batch.

You can specify multiple columns in --stat option as a comma separated name list.

However, we recommend to choose the target column carefully, because the min/max statistics grows up the metadata size then leads slow down of metadata parsing.

See PG-Strom Manual::Apache Arrow for the information of Apache Arrow support in PG-Strom.

When we scan on a foreign-table by Arrow_Fdw on behalf of the Apache Arrow files with the min/max statistics, it fetches the min_values and max_values attributes if any (and cached in the binary form), then it also checks WHERE-clause whether it fits the min/max statistics hints.

For example, if WHERE-clause contains lo_orderdate BETWEEN 19940101 AND 19940630, no rows shall survive from a record-batch with max-value less than 19940101, or a record-batch with min-value larger than 19940630. So, Arrow_Fdw checks the statistics hint when it fetches record-batches for each, and simply skipps junk ones.

The EXPLAIN ANALYZE result below shows how min/max statistis hints works.

postgres=# EXPLAIN ANALYZE

SELECT count(*) FROM flineorder_sort

WHERE lo_orderpriority = '2-HIGH'

AND lo_orderdate BETWEEN 19940101 AND 19940630;

QUERY PLAN

------------------------------------------------------------------------------------------

Aggregate (cost=33143.09..33143.10 rows=1 width=8) (actual time=115.591..115.593 rows=1loops=1)

-> Custom Scan (GpuPreAgg) on flineorder_sort (cost=33139.52..33142.07 rows=204 width=8) (actual time=115.580..115.585 rows=1 loops=1)

Reduction: NoGroup

Outer Scan: flineorder_sort (cost=4000.00..33139.42 rows=300 width=0) (actual time=10.682..21.555 rows=2606170 loops=1)

Outer Scan Filter: ((lo_orderdate >= 19940101) AND (lo_orderdate <= 19940630) AND (lo_orderpriority = '2-HIGH'::bpchar))

Rows Removed by Outer Scan Filter: 2425885

referenced: lo_orderdate, lo_orderpriority

Stats-Hint: (lo_orderdate >= 19940101), (lo_orderdate <= 19940630) [loaded: 2, skipped: 8]

files0: /dev/shm/flineorder_sort.arrow (read: 217.52MB, size: 2357.11MB)

Planning Time: 0.210 ms

Execution Time: 153.508 ms

(11 rows)

postgres=# SELECT count(*) FROM flineorder_sort

WHERE lo_orderpriority = '2-HIGH'

AND lo_orderdate BETWEEN 19940101 AND 19940630;

count

--------

180285

(1 row)

The Stats-Hint line shows Arrow_Fdw could utilize the statistics by the WHERE-clause: (lo_orderdate >= 19940101), (lo_orderdate <= 19940630), then its fetches 2 record-batches that may contain any valid rows, but skipped 8 record-batches.

After thatm the Outer Scan Filter of GpuPreAgg removed 2425885 rows come from the two record batches.

We can disable the min/max statistis hint using arrow_fdw.stats_hint_enabled options.

It shows the query execution plan without min/max statistis hints.

postgres=# SET arrow_fdw.stats_hint_enabled = off;

SET

postgres=# EXPLAIN ANALYZE

SELECT count(*) FROM flineorder_sort

WHERE lo_orderpriority = '2-HIGH'

AND lo_orderdate BETWEEN 19940101 AND 19940630;

QUERY PLAN

------------------------------------------------------------------------------------------

Aggregate (cost=33143.09..33143.10 rows=1 width=8) (actual time=185.985..185.986 rows=1 loops=1)

-> Custom Scan (GpuPreAgg) on flineorder_sort (cost=33139.52..33142.07 rows=204 width=8) (actual time=185.974..185.979 rows=1 loops=1)

Reduction: NoGroup

Outer Scan: flineorder_sort (cost=4000.00..33139.42 rows=300 width=0) (actual time=10.626..100.734 rows=11997996 loops=1)

Outer Scan Filter: ((lo_orderdate >= 19940101) AND (lo_orderdate <= 19940630) AND (lo_orderpriority = '2-HIGH'::bpchar))

Rows Removed by Outer Scan Filter: 11817711

referenced: lo_orderdate, lo_orderpriority

files0: /dev/shm/flineorder_sort.arrow (read: 217.52MB, size: 2357.11MB)

Planning Time: 0.186 ms

Execution Time: 231.942 ms

(10 rows)

postgres=# SELECT count(*) FROM flineorder_sort

WHERE lo_orderpriority = '2-HIGH'

AND lo_orderdate BETWEEN 19940101 AND 19940630;

count

--------

180285

(1 row)

Even though the final result is identical, GpuPreAgg does not show the Stats-Hint line, and increase the rows removed by outer scan filter: 11,817,711 from 2,425,885 when the min/max statistics is enabled. It means Arrow_Fdw loaded junk record-batches, transferred to GPU, then removed all the rows.

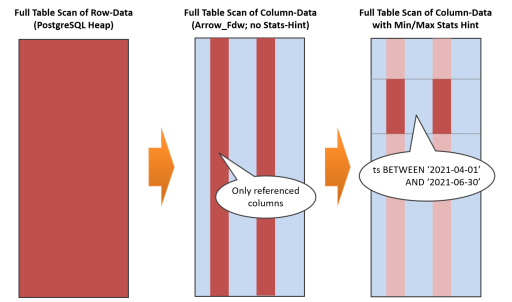

Apache Arrow is column-oriented structured data format, so we can optimize amount of data transfer rather than the row-data like PostgreSQL Heap-tables. In addition, the min/max statistics allows to reduce vertical scale of data transfer, if range of the table scan is enough predictible.

Typically, we expect this feature is valuable for time-series log-data processing that focus on a particular time range.