-

Notifications

You must be signed in to change notification settings - Fork 8

Hypervisor Memory overcommit tests

This is area where VMware ESXi really shines - because there is no specific hard limit on available memory for VMs.

Host/guest hardware is same as in Simple MySQL benchmarks]:

Host Hardware:

- CPU: AMD Athlon(tm) 64 X2 Dual Core Processor 3800+

- MB: MS-7250

- 6GB RAM

- 200GB SATA Maxtor disk

Guest Hardware:

- 1xCPU

- 1GB RAM

- 10GB virtual disk

- 1x Bridged Network

Test description:

- cloned (or Export OVF Template and Deploy OVF Template... in case of ESXi) above VMs with MariaDB benchmarks - see Simple MySQL benchmarks for details.

- all VMs has allocated 1GB of RAM

- all VMs run command

while true; do ./test-ATIS;doneto keep memory active.

Notes:

- please see XenServer-7.4 how I imported one VM backup 5 times...

| Hypervisor | Number of running 1GB VMs | Notes |

|---|---|---|

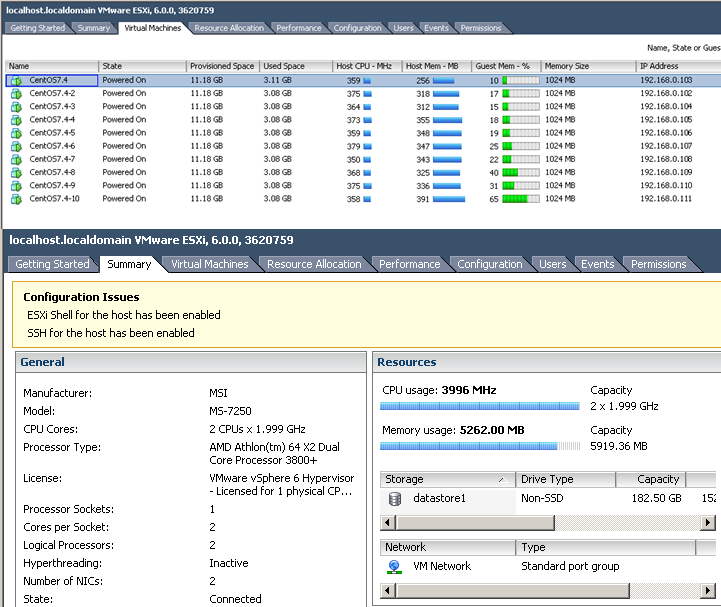

| ESXi 6u2 | 10* | |

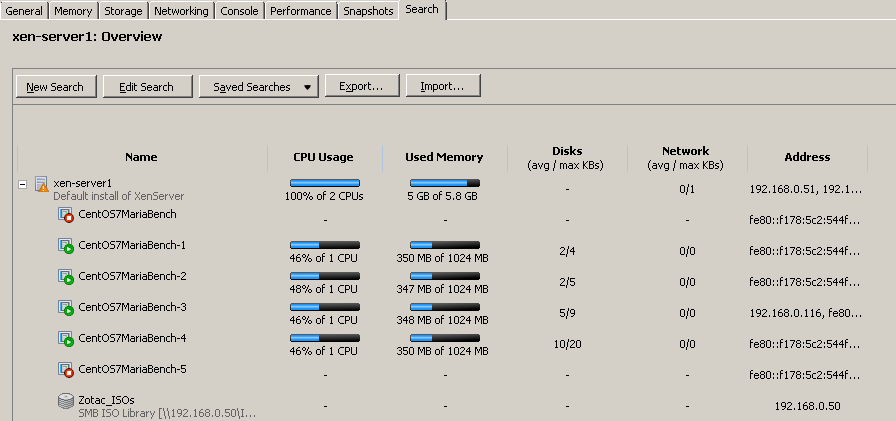

| XenServer 7.4.0 | 4 | You get Not enough server memory is available to perform this operation

|

| oVirt 4.2 | Pending | |

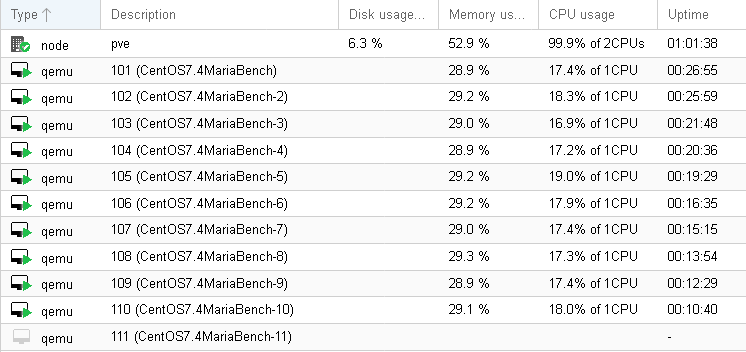

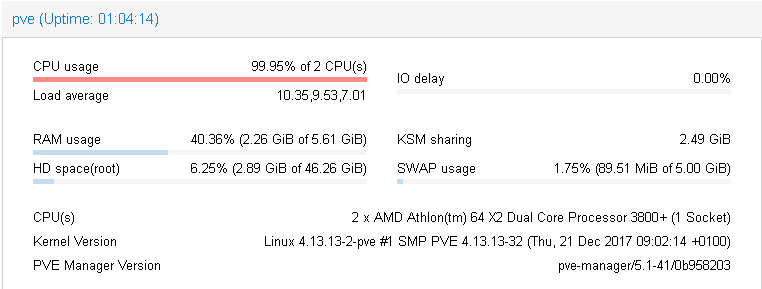

| Proxmox VE 5.1 | 10+ | clear winner - only 40% memory used, KSM saved 2.5GB(!) |

*) ESXi has no hard limit on VMs memory vs. free physical memory. I did not run further VMs

because there was lack of CPU power (all test-ATIS scripts are CPU bound). However according to summary

bellow this was approaching usage of all physical RAM.

However the total number of running VMs strongly depends on workload - how much portion of guest memory is active. In real production environment the memory commit is usually smaller.

This is reason why VMware is laughing on Dynamic Memory feature of other hypervisors: https://blogs.vmware.com/virtualreality/2011/02/hypervisor-memory-management-done-right.html. Because what is Dynamic Memory good for when you just can't run VM because there are few missing megabytes of free physical memory - and you have no control how much memory hypervisor allocates there... As you can see this never happens in ESXi.

It was a bit suprise, but even KVM/QEMU can overcommit memory very well. Combined with KSM it outperformed even ESXi (and single VM CPU run 2times faster than on ESXi)... However XFS barriers needed to be disabled (otherwise nearly all VMs in test would be most time stuck on inserting data).

The only (lightly) wit remark is about Web UI similarity to XenCenter client, but it is probably OK (I did not understand why this tab was called "Search", until I found it on XenCenter which additionaly offers to Export/Import search queries).

Copyright © Henryk Paluch. All rights reserved.